plotPartialDependence

部分依存プロット (PDP) および個別条件付き期待値 (ICE) プロットの作成

構文

説明

plotPartialDependence( は、RegressionMdl,Vars)Vars にリストされた予測子変数とモデル予測の間の部分依存を計算してプロットします。この構文におけるモデル予測は、予測子データを含む回帰モデル RegressionMdl を使用して予測される応答です。

Varsで 1 つの変数を指定した場合、関数は変数に対する部分依存のライン プロットを作成します。Varsで 2 つの変数を指定した場合、関数は 2 つの変数に対する部分依存の表面プロットを作成します。

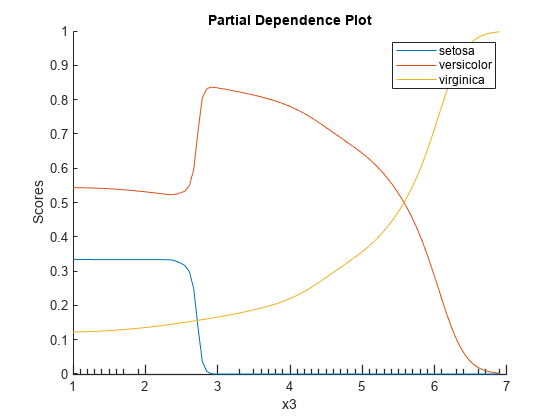

plotPartialDependence( は、予測子データが含まれる分類モデル ClassificationMdl,Vars,Labels)ClassificationMdl を使用して、Vars にリストされた予測子変数と、Labels で指定されたクラスのスコアの間の部分依存を計算してプロットします。

Varsで 1 つの変数を指定した場合、関数はLabelsの各クラスの変数に対する部分依存のライン プロットを作成します。Varsで 2 つの変数を指定した場合、関数は 2 つの変数に対する部分依存の表面プロットを作成します。Labelsで 1 つのクラスを指定しなければなりません。

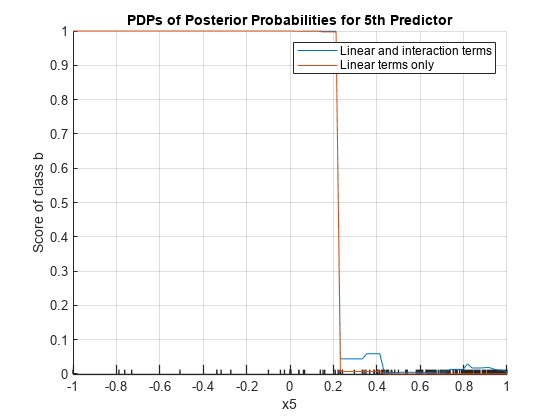

plotPartialDependence( は、予測子データ fun,Vars,Data)Data を使用して、Vars にリストされた予測子変数と、カスタム モデル fun で返される出力の間の部分依存を計算してプロットします。

Varsで 1 つの変数を指定した場合、関数はfunによって返された出力の各列の変数に対する部分依存のライン プロットを作成します。Varsで 2 つの変数を指定した場合、関数は 2 つの変数に対する部分依存の表面プロットを作成します。2 つの変数を指定した場合、funは列ベクトルを返す必要があります。または、名前と値の引数OutputColumnsを設定して使用する出力列を指定する必要があります。

plotPartialDependence(___, は、1 つ以上の名前と値の引数によって指定された追加オプションを使用します。たとえば、Name,Value)"Conditional","absolute" を指定した場合、関数 plotPartialDependence は PDP、選択した予測子変数と予測応答またはスコアの散布図、および各観測値の ICE プロットが含まれている Figure を作成します。

例

入力引数

名前と値の引数

出力引数

詳細

アルゴリズム

回帰モデル (RegressionMdl) と分類モデル (ClassificationMdl) の両方で、plotPartialDependence は、関数 predict を使用して応答またはスコアを予測します。plotPartialDependence は、モデルに従って適切な関数 predict を選択し、既定の設定で predict を実行します。各関数 predict の詳細については、次の 2 つの表の関数 predict を参照してください。指定したモデルが木ベースのモデル (木のブースティング アンサンブルを除く) で Conditional が "none" の場合、plotPartialDependence は関数 predict ではなく重み付き走査アルゴリズムを使用します。詳細については、重み付き走査アルゴリズムを参照してください。

回帰モデル オブジェクト

| モデル タイプ | 完全またはコンパクトな回帰モデル オブジェクト | 応答を予測する関数 |

|---|---|---|

| 決定木のアンサンブルのバギング | CompactTreeBagger | predict |

| 決定木のアンサンブルのバギング | TreeBagger | predict |

| 回帰モデルのアンサンブル | RegressionEnsemble, RegressionBaggedEnsemble, CompactRegressionEnsemble | predict |

| ランダムな特徴量拡張を使用したガウス カーネル回帰モデル | RegressionKernel | predict |

| ガウス過程回帰 | RegressionGP, CompactRegressionGP | predict |

| 一般化加法モデル | RegressionGAM, CompactRegressionGAM | predict |

| 一般化線形混合効果モデル | GeneralizedLinearMixedModel | predict |

| 一般化線形モデル | GeneralizedLinearModel, CompactGeneralizedLinearModel | predict |

| 線形混合効果モデル | LinearMixedModel | predict |

| 線形回帰 | LinearModel, CompactLinearModel | predict |

| 高次元データの線形回帰 | RegressionLinear | predict |

| ニューラル ネットワーク回帰モデル | RegressionNeuralNetwork, CompactRegressionNeuralNetwork | predict |

| 非線形回帰 | NonLinearModel | predict |

| 打ち切り線形回帰 | CensoredLinearModel, CompactCensoredLinearModel | predict |

| 回帰木 | RegressionTree, CompactRegressionTree | predict |

| サポート ベクター マシン | RegressionSVM, CompactRegressionSVM | predict |

分類モデル オブジェクト

| モデル タイプ | 完全またはコンパクトな分類モデル オブジェクト | ラベルとスコアを予測する関数 |

|---|---|---|

| 判別分析分類器 | ClassificationDiscriminant, CompactClassificationDiscriminant | predict |

| サポート ベクター マシンまたはその他の分類器用のマルチクラス モデル | ClassificationECOC, CompactClassificationECOC | predict |

| 分類用のアンサンブル学習器 | ClassificationEnsemble, CompactClassificationEnsemble, ClassificationBaggedEnsemble | predict |

| ランダムな特徴量拡張を使用したガウス カーネル分類モデル | ClassificationKernel | predict |

| 一般化加法モデル | ClassificationGAM, CompactClassificationGAM | predict |

| k 最近傍モデル | ClassificationKNN | predict |

| 線形分類モデル | ClassificationLinear | predict |

| 単純ベイズ モデル | ClassificationNaiveBayes, CompactClassificationNaiveBayes | predict |

| ニューラル ネットワーク分類器 | ClassificationNeuralNetwork, CompactClassificationNeuralNetwork | predict |

| 1 クラスおよびバイナリ分類用のサポート ベクター マシン | ClassificationSVM, CompactClassificationSVM | predict |

| マルチクラス分類用の二分決定木 | ClassificationTree, CompactClassificationTree | predict |

| 決定木のバギング アンサンブル | TreeBagger, CompactTreeBagger | predict |

代替機能

partialDependenceは可視化せずに部分依存を計算します。この関数は、1 回の関数呼び出しで 2 つの変数と複数クラスの部分依存を計算できます。

参照

[3] Hastie, Trevor, Robert Tibshirani, and Jerome Friedman. The Elements of Statistical Learning. New York, NY: Springer New York, 2001.