boxLabelDatastore

Datastore for bounding box label data

Description

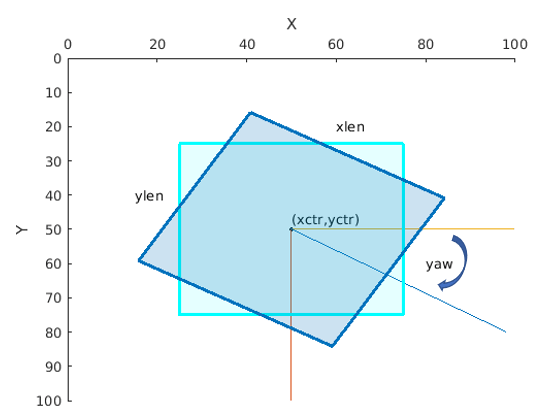

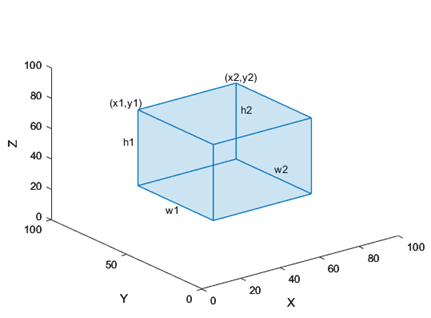

The boxLabelDatastore object creates a datastore for

bounding box label data. Use this object to read labeled bounding box data for object

detection.

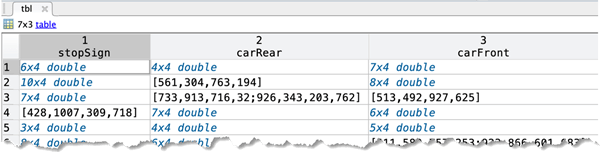

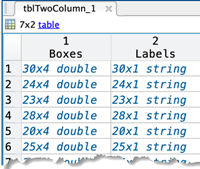

To read bounding box label data from a boxLabelDatastore object,

use the read

function. This object function returns a cell array with either two or three columns.

You can create a datastore that combines the boxLabelDatastore object

with an ImageDatastore object using the combine

object function. Use the combined datastore to train object detectors using the training

functions such as trainYOLOv4ObjectDetector and trainSSDObjectDetector. To modify the ReadSize

property, you can use dot notation.

Creation

Description

blds = boxLabelDatastore(tbl1,...,tbln)boxLabelDatastore object from one or more tables

containing labeled bounding box data.

Input Arguments

Properties

Object Functions

combine | Combine data from multiple datastores |

countEachLabel | Count occurrence of pixel or box labels |

hasdata | Determine if data is available to read from label datastore |

numpartitions | Number of partitions for label datastore |

partition | Partition label datastore |

preview | Read first row of data in datastore |

progress | Percentage of data read from a datastore |

read | Read data from label datastore |

readall | Read all data in label datastore |

reset | Reset label datastore to initial state |

shuffle | Return shuffled version of label datastore |

subset | Create subset of datastore or FileSet |

transform | Transform datastore |

isPartitionable | Determine whether datastore is partitionable |

isShuffleable | Determine whether datastore is shuffleable |

Examples

Version History

Introduced in R2019bSee Also

Apps

Functions

balanceBoxLabels|blockLocationsWithROI|analyzeNetwork(Deep Learning Toolbox) |estimateAnchorBoxes

Objects

Topics

- Datastores for Deep Learning (Deep Learning Toolbox)

- Deep Learning in MATLAB (Deep Learning Toolbox)

- Training Data for Object Detection and Semantic Segmentation