位置推定と姿勢推定

慣性ナビゲーション、姿勢推定、スキャン マッチング、モンテカルロ位置推定

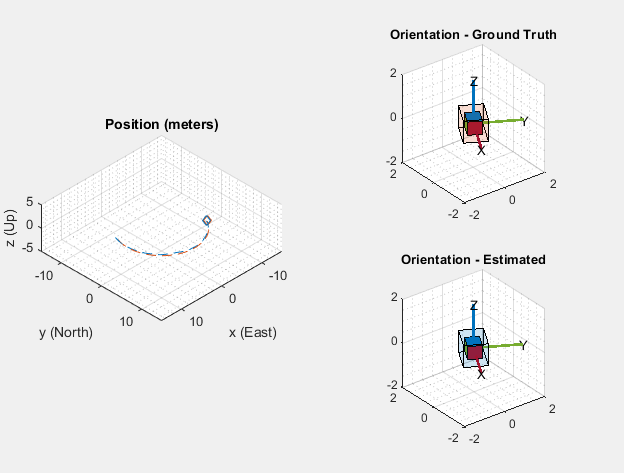

位置推定と姿勢推定のアルゴリズムを使用して、環境内でのビークルの向きを決定します。慣性センサー フュージョンは、フィルターを使用して IMU、GPS などのセンサー読み取り値を改善し、組み合わせます。モンテカルロ位置推定、スキャン マッチングなどの位置推定アルゴリズムは、距離センサーまたは LiDAR の読み取り値を使用して、既知のマップでの姿勢を推定します。姿勢グラフは推定姿勢を追跡し、エッジの制約およびループ閉じ込みに基づいて最適化することができます。

特定のセンサーをモデル化する場合は、センサー モデルを参照してください。

自己位置推定と環境地図作成の同時実行については、SLAMを参照してください。

カテゴリ

- 慣性センサー フュージョン

IMU と GPS による慣性ナビゲーション、センサー フュージョン、カスタム フィルター調整

- 位置推定アルゴリズム

粒子フィルター、スキャン マッチング、モンテカルロ位置推定、姿勢グラフ、オドメトリ