複数のビューからの structure from motion

structure from motion (SfM) は、一連の 2 次元ビューからシーンの 3 次元構造を推定するプロセスです。これは、ロボット操縦、自動運転、拡張現実など、数多くのアプリケーションで使用されます。この例では、ビューのシーケンスからキャリブレートされたカメラの姿勢を推定し、シーンの 3 次元構造を未知の倍率で再構成する方法を説明します。

概要

この例では、カメラ キャリブレーター アプリを使ってキャリブレートされたカメラで撮影した 2 次元ビューのシーケンスから、3 次元シーンを再構成する方法を説明します。例では imageviewset オブジェクトを使用して、カメラの姿勢、イメージ ポイント、ビューのペアにおける点間のマッチなど、各ビューに関連するデータの保存と管理を行います。

また、ペアワイズの点のマッチを使用して、前のビューに対する現在のビューでのカメラの姿勢を推定します。その後、imageviewset オブジェクトの findTracks メソッドを使用して、ペアワイズのマッチをより長い、複数のビューにわたる点のトラックにリンクします。これらのトラックは、後で関数triangulateMultiviewを使用したマルチビューの三角形分割と、関数bundleAdjustmentを使用したカメラの姿勢と 3 次元シーンの点を調整するための入力として役立ちます。

この例は、カメラの動き推定と稠密なシーンの再構成という 2 つの主要部分から構成されています。最初の部分では、複数のビューにわたってマッチした点の疎集合を使用して、各ビューでのカメラの姿勢を推定します。2 番目の部分では、ビューのシーケンスをもう一度反復し、vision.PointTrackerを使用して複数のビューにわたって点の稠密集合を追跡することで、シーンの緻密な 3 次元再構成を計算します。

カメラの動き推定アルゴリズムは以下のステップで構成されています。

連続するイメージの各ペアについて、点の一連の対応関係を求める。この例では、関数

detectSURFFeaturesを使って関心点を検出し、関数extractFeaturesを使って特徴記述子を抽出したうえで、関数matchFeaturesを使ってマッチを探します。あるいはvision.PointTrackerを使用して、複数のビューにわたって点を追跡することもできます。現在のビューの相対的な姿勢を推定する。これは前のビューを基準としたカメラの向きと位置です。この例では補助関数

helperEstimateRelativePoseを使用しますが、これはestimateEssentialMatrixとestrelposeを呼び出します。現在のビューの相対的な姿勢を、シーケンスの最初のビューの座標系に変換する。

現在のビューの属性であるカメラの姿勢とイメージ ポイントを保存する。

前のビューと現在のビューの間にあるインライアのマッチを保存する。

これまでに処理されたすべてのビューにわたって点のトラックを探す。

関数

triangulateMultiviewを使用して、トラックに対応する初期の 3 次元位置を計算する。関数

bundleAdjustmentを使用して、カメラの姿勢と 3 次元の点を調整する。調整されたカメラの姿勢をimageviewsetオブジェクトに保存する。

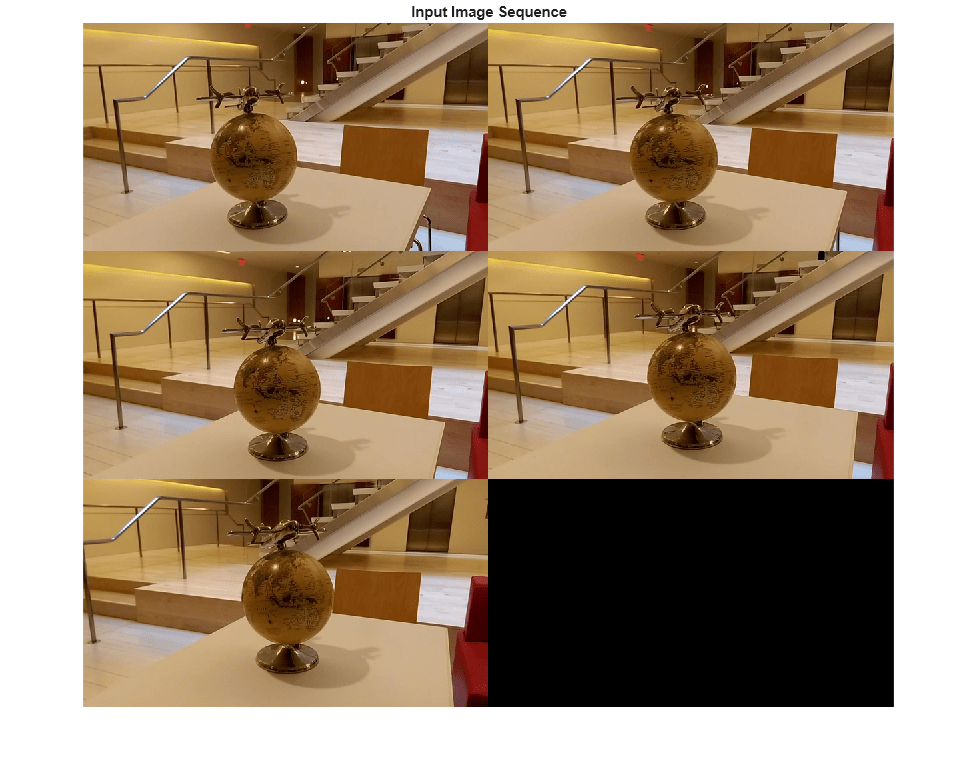

入力イメージ シーケンスの読み取り

イメージ シーケンスを読み取って表示します。

% Use |imageDatastore| to get a list of all image file names in a directory. imageDir = fullfile(toolboxdir('vision'), 'visiondata', ... 'structureFromMotion'); imds = imageDatastore(imageDir); % Display the images. figure montage(imds.Files, 'Size', [3, 2]); % Convert the images to grayscale. images = cell(1, numel(imds.Files)); for i = 1:numel(imds.Files) I = readimage(imds, i); images{i} = im2gray(I); end title('Input Image Sequence');

カメラ パラメーターの読み込み

カメラ キャリブレーター を使用して作成された cameraParameters オブジェクトを読み込みます。

data = load(fullfile(imageDir, "cameraParams.mat"));

cameraParams = data.cameraParams;最初のビューを含むビュー セットの作成

imageviewset オブジェクトを使用して、各ビューに関連付けられたイメージ ポイントとカメラの姿勢を、ビューのペア間における点のマッチと共に保存し、管理します。imageviewsetオブジェクトに値を入れた後、これを使用して複数のビューにわたる点のトラックを見つけ、関数triangulateMultiviewおよびbundleAdjustmentで使用されるカメラの姿勢を取得できます。

% Get intrinsic parameters of the camera intrinsics = cameraParams.Intrinsics; % Undistort the first image. I = undistortImage(images{1}, intrinsics); % Detect features. Increasing 'NumOctaves' helps detect large-scale % features in high-resolution images. Use an ROI to eliminate spurious % features around the edges of the image. border = 50; roi = [border, border, size(I, 2)- 2*border, size(I, 1)- 2*border]; prevPoints = detectSURFFeatures(I, NumOctaves=8, ROI=roi); % Extract features. Using 'Upright' features improves matching, as long as % the camera motion involves little or no in-plane rotation. prevFeatures = extractFeatures(I, prevPoints, Upright=true); % Create an empty imageviewset object to manage the data associated with each % view. vSet = imageviewset; % Add the first view. Place the camera associated with the first view % and the origin, oriented along the Z-axis. viewId = 1; vSet = addView(vSet, viewId, rigidtform3d, Points=prevPoints);

残りのビューの追加

残りのイメージを処理します。各イメージについて以下を行います。

前のイメージと現在のイメージ間で点をマッチングする。

前のビューに対して、現在のビューのカメラの姿勢を推定する。

現在のビューでのカメラの姿勢を、最初のビューに対するグローバル座標で計算する。

最初の 3 次元ワールド ポイントを三角形分割する。

バンドル調整を使用して、カメラの姿勢と 3 次元のワールド ポイントをすべて調整する。

for i = 2:numel(images) % Undistort the current image. I = undistortImage(images{i}, intrinsics); % Detect, extract and match features. currPoints = detectSURFFeatures(I, NumOctaves=8, ROI=roi); currFeatures = extractFeatures(I, currPoints, Upright=true); indexPairs = matchFeatures(prevFeatures, currFeatures, ... MaxRatio=0.7, Unique=true); % Select matched points. matchedPoints1 = prevPoints(indexPairs(:, 1)); matchedPoints2 = currPoints(indexPairs(:, 2)); % Estimate the camera pose of current view relative to the previous view. % The pose is computed up to scale, meaning that the distance between % the cameras in the previous view and the current view is set to 1. % This will be corrected by the bundle adjustment. [relPose, inlierIdx] = helperEstimateRelativePose(... matchedPoints1, matchedPoints2, intrinsics); % Get the table containing the previous camera pose. prevPose = poses(vSet, i-1).AbsolutePose; % Compute the current camera pose in the global coordinate system % relative to the first view. currPose = rigidtform3d(prevPose.A*relPose.A); % Add the current view to the view set. vSet = addView(vSet, i, currPose, Points=currPoints); % Store the point matches between the previous and the current views. vSet = addConnection(vSet, i-1, i, relPose, Matches=indexPairs(inlierIdx,:)); % Find point tracks across all views. tracks = findTracks(vSet); % Get the table containing camera poses for all views. camPoses = poses(vSet); % Triangulate initial locations for the 3-D world points. xyzPoints = triangulateMultiview(tracks, camPoses, intrinsics); % Refine the 3-D world points and camera poses. [xyzPoints, camPoses, reprojectionErrors] = bundleAdjustment(xyzPoints, ... tracks, camPoses, intrinsics, FixedViewId=1, ... PointsUndistorted=true); % Store the refined camera poses. vSet = updateView(vSet, camPoses); prevFeatures = currFeatures; prevPoints = currPoints; end

カメラの姿勢の表示

調整されたカメラの姿勢と 3 次元のワールド ポイントを表示します。

% Display camera poses. camPoses = poses(vSet); figure; plotCamera(camPoses, Size=0.2); hold on % Exclude noisy 3-D points. goodIdx = (reprojectionErrors < 5); xyzPoints = xyzPoints(goodIdx, :); % Display the 3-D points. pcshow(xyzPoints, VerticalAxis="y", VerticalAxisDir="down", MarkerSize= 45); grid on hold off % Specify the viewing volume. loc1 = camPoses.AbsolutePose(1).Translation; xlim([loc1(1)-5, loc1(1)+4]); ylim([loc1(2)-5, loc1(2)+4]); zlim([loc1(3)-1, loc1(3)+20]); camorbit(0, -30); title('Refined Camera Poses');

緻密な再構成の計算

イメージをもう一度処理します。今回はコーナーの稠密集合を検出し、vision.PointTrackerを使用してすべてのビューにわたりそれを追跡します。

% Read and undistort the first image I = undistortImage(images{1}, intrinsics); % Detect corners in the first image. prevPoints = detectMinEigenFeatures(I, MinQuality=0.001); % Create the point tracker object to track the points across views. tracker = vision.PointTracker(MaxBidirectionalError=1, NumPyramidLevels=6); % Initialize the point tracker. prevPoints = prevPoints.Location; initialize(tracker, prevPoints, I); % Store the dense points in the view set. vSet = updateConnection(vSet, 1, 2, Matches=zeros(0, 2)); vSet = updateView(vSet, 1, Points=prevPoints); % Track the points across all views. for i = 2:numel(images) % Read and undistort the current image. I = undistortImage(images{i}, intrinsics); % Track the points. [currPoints, validIdx] = step(tracker, I); % Clear the old matches between the points. if i < numel(images) vSet = updateConnection(vSet, i, i+1, Matches=zeros(0, 2)); end vSet = updateView(vSet, i, Points=currPoints); % Store the point matches in the view set. matches = repmat((1:size(prevPoints, 1))', [1, 2]); matches = matches(validIdx, :); vSet = updateConnection(vSet, i-1, i, Matches=matches); end % Find point tracks across all views. tracks = findTracks(vSet); % Find point tracks across all views. camPoses = poses(vSet); % Triangulate initial locations for the 3-D world points. xyzPoints = triangulateMultiview(tracks, camPoses,... intrinsics); % Refine the 3-D world points and camera poses. [xyzPoints, camPoses, reprojectionErrors] = bundleAdjustment(... xyzPoints, tracks, camPoses, intrinsics, FixedViewId=1, ... PointsUndistorted=true);

緻密な再構成の表示

カメラの姿勢と稠密な点群を表示します。

% Display the refined camera poses. figure; plotCamera(camPoses, Size=0.2); hold on % Exclude noisy 3-D world points. goodIdx = (reprojectionErrors < 5); % Display the dense 3-D world points. pcshow(xyzPoints(goodIdx, :), VerticalAxis="y", VerticalAxisDir="down", MarkerSize=45); grid on hold off % Specify the viewing volume. loc1 = camPoses.AbsolutePose(1).Translation; xlim([loc1(1)-5, loc1(1)+4]); ylim([loc1(2)-5, loc1(2)+4]); zlim([loc1(3)-1, loc1(3)+20]); camorbit(0, -30); title("Dense Reconstruction");

参考文献

[1] M.I.A. Lourakis and A.A. Argyros (2009). "SBA: A Software Package for Generic Sparse Bundle Adjustment". ACM Transactions on Mathematical Software (ACM) 36 (1): 1-30.

[2] R. Hartley, A. Zisserman, "Multiple View Geometry in Computer Vision," Cambridge University Press, 2003.

[3] B. Triggs; P. McLauchlan; R. Hartley; A. Fitzgibbon (1999). "Bundle Adjustment: A Modern Synthesis". Proceedings of the International Workshop on Vision Algorithms.Springer-Verlag. pp. 298-372.