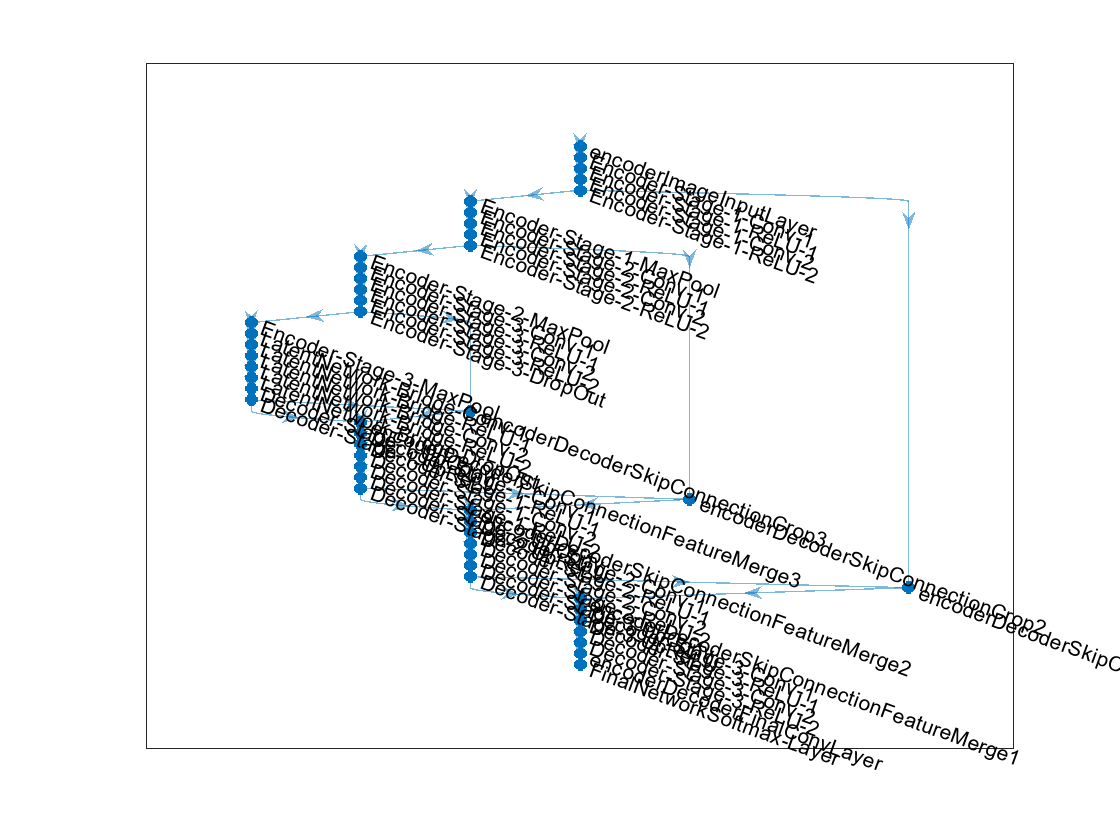

unet

構文

説明

unetNetwork = unet(imageSize,numClasses)

unet を使用して、U-Net ネットワーク アーキテクチャを作成します。Deep Learning Toolbox™ の関数 trainnet (Deep Learning Toolbox) を使用してネットワークに学習させなければなりません。

[ は、U-Net ネットワークからの出力サイズも返します。unetNetwork,outputSize] = unet(imageSize,numClasses)

___ = unet( は、1 つ以上の名前と値の引数を使用してオプションを指定します。たとえば、imageSize,numClasses,Name=Value)unet(imageSize,numClasses,NumFirstEncoderFilters=64) は、最初の符号化器ステージの出力チャネルの数を 64 として指定します。

例

入力引数

名前と値の引数

出力引数

詳細

ヒント

畳み込み層で

'same'パディングを使用すると、入力から出力まで同じデータ サイズが維持され、幅広い入力イメージ サイズの使用が可能になります。大きなイメージをシームレスにセグメント化するにはパッチベースのアプローチを使用します。関数

randomPatchExtractionDatastoreを使用してイメージ パッチを抽出できます。パッチベースのアプローチを使用してセグメンテーションを行う際に、境界のアーティファクトが生じるのを防ぐには、

'valid'パディングを使用します。unet関数を使用して作成したネットワークは、trainnet(Deep Learning Toolbox) で学習させた後、GPU コード生成に使用できます。詳細と例については、コード生成と深層ニューラル ネットワークの展開 (Deep Learning Toolbox)を参照してください。

参照

[1] Ronneberger, O., P. Fischer, and T. Brox. "U-Net: Convolutional Networks for Biomedical Image Segmentation." Medical Image Computing and Computer-Assisted Intervention (MICCAI). Vol. 9351, 2015, pp. 234–241.

[2] He, K., X. Zhang, S. Ren, and J. Sun. "Delving Deep Into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification." Proceedings of the IEEE International Conference on Computer Vision. 2015, 1026–1034.

拡張機能

バージョン履歴

R2024a で導入

参考

オブジェクト

dlnetwork(Deep Learning Toolbox)

関数

trainnet(Deep Learning Toolbox) |deeplabv3plus|unet3d|pretrainedEncoderNetwork|semanticseg|evaluateSemanticSegmentation

トピック

- 深層学習を使用したマルチスペクトル イメージのセマンティック セグメンテーション

- 深層学習を使用したセマンティック セグメンテーション入門

- MATLAB による深層学習 (Deep Learning Toolbox)