detect

Syntax

Description

keypoints = detect(detector,I,bboxes)I, using an HRNet

object keypoint detector, detector. The detect

function automatically resizes and rescales the input to match the size of the images used

to train the detector. The function returns the locations of keypoints detected in each

input image as a set of object keypoints.

[

returns the detection confidence score for each keypoint. The confidence score determines

the location accuracy of the detected keypoint. The value of keypoint confidence score is

in the range of [0, 1]. A higher score indicates greater confidence in the

detection.keypoints,scores] = detect(detector,I,bboxes)

detectionResults = detect(___,Name=Value)KeypointSize=2 specifies the keypoint size to be two pixels.

Examples

This example uses:

Read a sample image into the workspace.

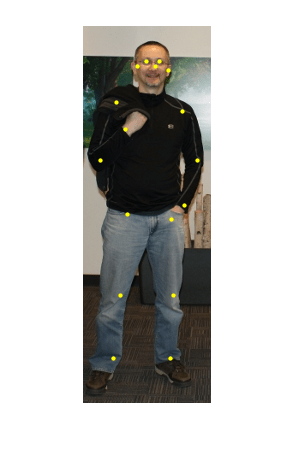

I = imread("visionteam.jpg");Crop and display one person from the image.

personBox = [262.5 19.51 127.98 376.98]; [personImg] = imcrop(I,personBox); figure imshow(personImg)

Specify a bounding box for the person in the image. You can use the bounding box region as input to the object keypoint detector to detect the person.

bbox = [3.87,21.845,118.97,345.91];

Load a pretrained HRNet object keypoint detector.

keypointDetector = hrnetObjectKeypointDetector("human-full-body-w32");Detect the keypoints of the person in the image by using the pretrained HRNet object keypoint detector.

[keypoints,keypointScores,valid] = detect(keypointDetector,personImg,bbox)

keypoints = 17×2 single matrix

72.8781 40.7078

77.1927 36.2037

63.9647 36.2037

86.2008 45.2118

55.2407 40.7078

100.2811 85.7481

33.9517 76.7401

114.5508 135.2925

17.5455 135.2925

102.2699 180.3329

43.3386 103.7643

89.3260 193.8450

45.1380 189.3410

90.9360 270.4136

38.0240 270.4136

⋮

keypointScores = 17×1 single column vector

0.9616

0.9819

0.9797

0.9824

0.9627

0.9540

0.8812

0.9696

0.9909

0.9675

0.9327

0.8247

0.8356

0.9527

0.9111

⋮

valid = 17×1 logical array

1

1

1

1

1

1

1

1

1

1

1

1

1

1

1

⋮

Insert the person keypoints into the image and display the results.

detectedKeypoints = insertObjectKeypoints(personImg,keypoints,KeypointColor="yellow",KeypointSize=2);

imshow(detectedKeypoints)

Input Arguments

HRNet object keypoint detector, specified as an hrnetObjectKeypointDetector object.

Test images, specified as a numeric array of size H-by-W-by-C-by-T. Images must be real, nonsparse, and RGB.

H— Height of the image, in pixels.

W— Width of the image, in pixels.

C— The channel size in each image must be equal to the input channel size. Because this function requires RGB images, the channel size must be

3.T— Number of test images in the array. The function computes the object keypoint detection results for each test image in the array.

The intensity range of the test image must be similar to the intensity range of the

images used to train the detector. For example, if you train the detector on

uint8 images, rescale the test image to the range [0, 255] by using

the im2uint8 or rescale

function. The size of the test image must be comparable to the sizes of the images used

in training. If these sizes are very different, the detector has difficulty detecting

object keypoints because the scale of the objects in the test image differs from the

scale of the objects the detector is trained to identify.

Data Types: uint8 | uint16 | int16 | double | single

Locations of the objects detected in the input image or images, specified as one of these options:

M-by-4 matrix— The input is a single test image. M is the number of object bounding boxes in the image.

T-by-1 cell array— The input is an array of test images. T is the number of test images in the array. Each cell contains an M-by-4 matrix of object bounding boxes for the corresponding image, where M is the number of bounding boxes in the image.

The table describes the possible formats of bounding boxes.

| Bounding Box | Description |

|---|---|

rectangle |

Defined in spatial coordinates as an M-by-4 numeric matrix with rows of the form [x y w h], where:

|

Data Types: uint8 | uint16 | int16 | double | single

Test images, specified as an ImageDatastore, CombinedDatastore, or

TransformedDatastore

object containing the full filenames of the test images. The images in the datastore

must be RGB images.

When using the read function the datastore ds must return a table

or a cell array with these columns:

image data | bboxes | box labels |

|---|---|---|

| T-by-1 cell array, in which each cell contains an input image. The images in the datastore must be RGB images. | T-by-1 cell array, in which each cell contains an M-by-4 matrix of 2-D bounding boxes specifying object locations within the corresponding input image. Each row of the matrix specifies the location of a bounding box in the format [x y w h]. | T-by-1 cell array, in which each cell contains an M-by-1 categorical vector of object class names. All the categorical data returned by the datastore must use the same categories. |

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Example: detect(detector,I,MiniBatchSize=16) detects object keypoints

in each image using 16-element batches of bounding boxes.

Minimum batch size, specified as a positive integer. Use the

MiniBatchSize argument when processing an image with a large

number of objects. When you specify this argument, the function groups the bounding

boxes surrounding the objects into batches of the specified size and processes them

together to improve computational efficiency. Increase the minimum batch size to

decrease processing time. Decrease the size to use less memory.

Hardware resource on which to run the detector, specified as

"auto", "gpu", or "cpu".

"auto"— Use a GPU if it is available. Otherwise, use the CPU."gpu"— Use the GPU. To use a GPU, you must have Parallel Computing Toolbox™ and a CUDA®-enabled NVIDIA® GPU. If a suitable GPU is not available, the function returns an error. For information about the supported compute capabilities, see GPU Computing Requirements (Parallel Computing Toolbox)."cpu"— Use the CPU.

Performance optimization, specified as one of these options:

"auto"— Automatically apply a number of optimizations suitable for the input network and hardware resource."mex"— Compile and execute a MEX function. This option is available only when using a GPU. Using a GPU requires Parallel Computing Toolbox and a CUDA enabled NVIDIA GPU. If Parallel Computing Toolbox or a suitable GPU is not available, then the function returns an error. For information about the supported compute capabilities, see GPU Computing Requirements (Parallel Computing Toolbox)."none"— Disable all acceleration.

The default option is "auto". If you specify

"auto", MATLAB® applies a number of compatible optimizations. The

"auto" option, MATLAB never generates a MEX function.

Using the Acceleration options "auto" and

"mex" can offer performance benefits, but at the expense of an

increased initial run time. Subsequent calls with compatible parameters are faster.

Use performance optimization when you plan to call the function multiple times using

new input data.

The "mex" option generates and executes a MEX function based on

the network and parameters used in the function call. You can have several MEX

functions associated with a single network at one time. Clearing the network variable

also clears any MEX functions associated with that network.

The "mex" option is available only for input data specified as

a numeric array, cell array of numeric arrays, table, or image datastore. No other

type of datastore supports the "mex" option.

The "mex" option is available only when you use a GPU. You

must also have a C/C++ compiler installed. For setup instructions, see Set Up Compiler (GPU Coder).

"mex" acceleration does not support all layers. For a list of

supported layers, see Supported Layers (GPU Coder).

Output Arguments

Locations of object keypoints detected in the input image or images, returned as one of these options:

17-by-2-by-M array — The input is a single test image. Each row in the array is of the form [x y] where x and y specify the location of a detected keypoint in an object. M is the number of objects in the image.

T-by-1 cell array — The input is an array of test images. T is the number of test images in the array. Each cell in the cell array contains a 17-by-2-by-M array specifying the keypoint detections for the M objects in the image.

Each object has 17 detected keypoints.

Data Types: double

Detection confidence scores for object keypoints, returned as one of these options:

17-by-M matrix — The input is a single test image. M is the number of objects in the image.

T-by-1 cell array — The input is an array of test images. T is the number of test images in the array. Each cell in the array contains a 17-by-M matrix indicating the detection scores for the keypoints of each of the M objects in an image.

Each object in an image has 17 has keypoint confidence scores. A higher score indicates higher confidence in the detection.

Data Types: double

Validity of the detected object keypoints, returned as one of these options:

17-by-M logical matrix — The input is a single test image. M is the number of objects in the image.

T-by-1 cell array — The input is an array of test images. T is the number of test images in the array. Each cell in the array contains a 17-by-M logical matrix indicating the keypoint validity values for the keypoints of each of the M objects in the corresponding image.

Each object in an image has 17 keypoint validity values. A value of

1 (true) indicates a valid keypoint and

0 (false) indicates an invalid keypoint.

Data Types: logical

Detection results, returned as a two-column table with variable names

Keypoints and Scores. Each element of the

Keypoints column contains a 17-by-2-by-M array,

for M objects in the image. Each row of the array contains a keypoint

location for the corresponding object in the format [x

y]. Each element of the Scores column contains

17-by-1-by-M array, where M is the number of

objects in the image. Each row of the array contains the keypoint detection confidence

score for one of the 17 keypoints in the corresponding object in that image.

Extended Capabilities

The

detectfunction does not supportImageDatastoreobject as input for code generation.Code generation does not support the

MiniBatchSize,ExecutionEnvironment, andAccelerationname-value arguments fordetect.To prepare an

hrnetObjectKeypointDetectorobject for code generation, useloadHRNETObjectKeypointDetector.

The

detectfunction does not supportImageDatastoreobject as input for code generation.Code generation does not support the

MiniBatchSize,ExecutionEnvironment, andAccelerationname-value arguments fordetect.To prepare an

hrnetObjectKeypointDetectorobject for code generation, useloadHRNETObjectKeypointDetector.

GPU Arrays

Accelerate code by running on a graphics processing unit (GPU) using Parallel Computing Toolbox™.

Version History

Introduced in R2023bStarting from R2025a, you can vary the number of bounding boxes specified as input to

the detect function during code generation. This means that the

limitations on bounding boxes from previous releases have been removed. You can now assign

bounding boxes dynamically during runtime.

Prior to R2024b, the bboxes argument to the detect

function must be a code generation constant (coder.const()) and a

M-by-4 matrix. The number of bounding boxes M and

the values of the bounding boxes must be fixed for code generation.

Starting from R2024b, you can dynamically adjust the bounding box values during runtime.

However, the number of bounding boxes M must be a code generation

constant.

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Web サイトの選択

Web サイトを選択すると、翻訳されたコンテンツにアクセスし、地域のイベントやサービスを確認できます。現在の位置情報に基づき、次のサイトの選択を推奨します:

また、以下のリストから Web サイトを選択することもできます。

最適なサイトパフォーマンスの取得方法

中国のサイト (中国語または英語) を選択することで、最適なサイトパフォーマンスが得られます。その他の国の MathWorks のサイトは、お客様の地域からのアクセスが最適化されていません。

南北アメリカ

- América Latina (Español)

- Canada (English)

- United States (English)

ヨーロッパ

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)