量子化、投影、および枝刈り

量子化、投影、または枝刈りの実行による深層ニューラル ネットワークの圧縮

Deep Learning Toolbox™ を Deep Learning Toolbox Model Compression Library サポート パッケージと共に使用し、以下を行うことで、深層ニューラル ネットワークのメモリ フットプリントの削減と計算要件の緩和を行います。

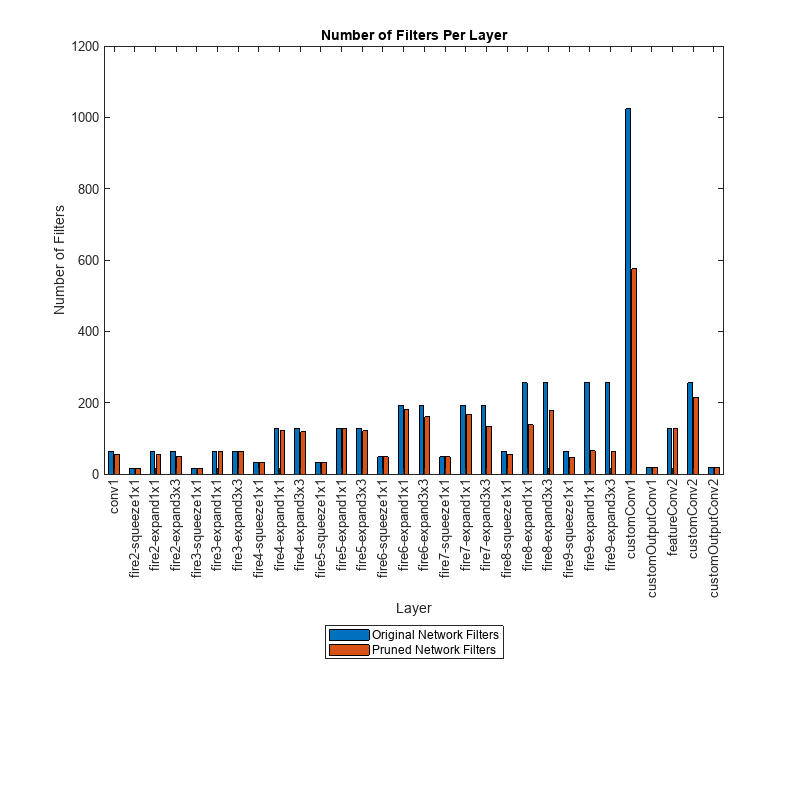

1 次テイラー近似を使用して畳み込み層からフィルターを枝刈りします。その後、この枝刈りされたネットワークから、C/C++ コードまたは CUDA® コードを生成できます。

層の活性化に対し、学習データの典型的なデータ セットを使用して主成分分析 (PCA) を実行して層を投影し、層の学習可能なパラメーターに対して線形投影を適用します。投影された深層ニューラル ネットワークのフォワード パスは、通常、ライブラリを使用せずに C/C++ コードを生成して組み込みハードウェアにネットワークを展開すると、より高速になります。

層の重み、バイアス、および活性化を、低い精度にスケーリングされた整数データ型に量子化します。その後、この量子化ネットワークから、GPU、FPGA、または CPU 展開用の C/C++ コード、CUDA コード、または HDL コードを生成できます。

ディープ ネットワーク デザイナー アプリを使用してネットワークの圧縮を解析します。

Deep Learning Toolbox Model Compression Library で利用可能な圧縮手法の詳細な概要については、Reduce Memory Footprint of Deep Neural Networksを参照してください。

関数

アプリ

| ディープ ネットワーク量子化器 | Quantize deep neural network to 8-bit scaled integer data types |

トピック

概要

- Reduce Memory Footprint of Deep Neural Networks

Learn about neural network compression techniques, including pruning, projection, and quantization.

枝刈り

- Analyze and Compress 1-D Convolutional Neural Network

Analyze 1-D convolutional network for compression and compress it using Taylor pruning and projection. (R2024b 以降) - イメージ分類ネットワークのパラメーターの枝刈りと量子化

パラメーターの枝刈りと量子化を行ってネットワークのサイズを小さくする。 - Prune Image Classification Network Using Taylor Scores

This example shows how to reduce the size of a deep neural network using Taylor pruning. By using thetaylorPrunableNetworkfunction to remove convolution layer filters, you can reduce the overall network size and increase the inference speed. - Prune Filters in a Detection Network Using Taylor Scores

This example shows how to reduce network size and increase inference speed by pruning convolutional filters in a you only look once (YOLO) v3 object detection network. - Prune and Quantize Convolutional Neural Network for Speech Recognition

Compress a convolutional neural network (CNN) to prepare it for deployment on an embedded system.

投影と知識蒸留

- Compress Neural Network Using Projection

This example shows how to compress a neural network using projection and principal component analysis. - Evaluate Code Generation Inference Time of Compressed Deep Neural Network

This example shows how to compare the inference time of a compressed deep neural network for battery state of charge estimation. (R2023b 以降) - Train Smaller Neural Network Using Knowledge Distillation

This example shows how to reduce the memory footprint of a deep learning network by using knowledge distillation. (R2023b 以降)

量子化

- 深層ニューラル ネットワークの量子化

量子化の影響とネットワーク畳み込み層のダイナミック レンジの可視化方法を学習します。 - Data Types and Scaling for Quantization of Deep Neural Networks

Understand effects of quantization and how to visualize dynamic ranges of network convolution layers. - 量子化ワークフローの前提条件

深層学習ネットワークの量子化に必要な製品。 - Supported Layers for Quantization

Deep neural network layers that are supported for quantization. - Prepare Data for Quantizing Networks

Supported datastores for quantization workflows. - Quantize Multiple-Input Network Using Image and Feature Data

Quantize Multiple Input Network Using Image and Feature Data - Export Quantized Networks to Simulink and Generate Code

Export a quantized neural network to Simulink and generate code from the exported model.

GPU ターゲットの量子化

- 深層学習ネットワーク用の INT8 コードの生成 (GPU Coder)

事前学習済み畳み込みニューラル ネットワークを量子化してコードを生成します。 - イメージ分類用の学習済み残差ネットワークの量子化と CUDA コードの生成

この例では、残差結合をもち、イメージ分類用に CIFAR-10 データで学習させた深層学習ニューラル ネットワークの畳み込み層で、学習可能パラメーターを量子化する方法を示します。 - オブジェクト検出器の層の量子化と CUDA コードの生成

この例では、畳み込み層に対して 8 ビット整数で推論計算を実行する SSD 車両検出器および YOLO v2 車両検出器の CUDA® コードを生成する方法を示します。 - Quantize Semantic Segmentation Network and Generate CUDA Code

Quantize Convolutional Neural Network Trained for Semantic Segmentation and Generate CUDA Code

FPGA ターゲットの量子化

- Quantize Network for FPGA Deployment (Deep Learning HDL Toolbox)

Reduce the memory footprint of a deep neural network by quantizing the weights, biases, and activations of convolution layers to 8-bit scaled integer data types. This example shows how to use Deep Learning Toolbox Model Compression Library and Deep Learning HDL Toolbox to deploy theint8network to a target FPGA board. - Classify Images on FPGA Using Quantized Neural Network (Deep Learning HDL Toolbox)

This example shows how to use Deep Learning HDL Toolbox™ to deploy a quantized deep convolutional neural network (CNN) to an FPGA. In the example you use the pretrained ResNet-18 CNN to perform transfer learning and quantization. You then deploy the quantized network and use MATLAB® to retrieve the prediction results. - Classify Images on FPGA by Using Quantized GoogLeNet Network (Deep Learning HDL Toolbox)

This example shows how to use the Deep Learning HDL Toolbox™ to deploy a quantized GoogleNet network to classify an image. The example uses the pretrained GoogLeNet network to demonstrate transfer learning, quantization, and deployment for the quantized network. Quantization helps reduce the memory requirement of a deep neural network by quantizing weights, biases and activations of network layers to 8-bit scaled integer data types. Use MATLAB® to retrieve the prediction results.

CPU ターゲットの量子化

- 深層学習ネットワークの int8 コードの生成 (MATLAB Coder)

事前学習済みの畳み込みニューラル ネットワークを量子化してコードを生成する。 - Raspberry Pi での深層学習ネットワークの INT8 コードの生成 (MATLAB Coder)

8 ビット整数で推論計算を実行する深層学習ネットワークのコードを生成する。 - Compress Image Classification Network for Deployment to Resource-Constrained Embedded Devices

This example shows how to reduce the memory footprint and computation requirements of an image classification network for deployment on resource constrained embedded devices such as the Raspberry Pi™.