evaluateObjectDetection

Syntax

Description

metrics = evaluateObjectDetection(detectionResults,groundTruthData)detectionResults

against the labeled ground truth groundTruthData and returns various

metrics.

metrics = evaluateObjectDetection(detectionResults,groundTruthData,threshold)

metrics = evaluateObjectDetection(___,Name=Value)AdditionalMetrics="AOS" includes average orientation similarity

metrics in the output.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

More About

Tips

To evaluate model performance across different levels of localization accuracy and fine-tune an object detector, compute metrics at multiple overlap thresholds. Overlap thresholds vary typically from 0.5 to 0.95 during evaluation. For example, set the overlap (IoU) threshold value to 0.5 to accept moderate overlap accuracy, and set the value to 0.95 to ensure very high localization accuracy. There are many ways to use the overlap threshold to tune object detector performance.

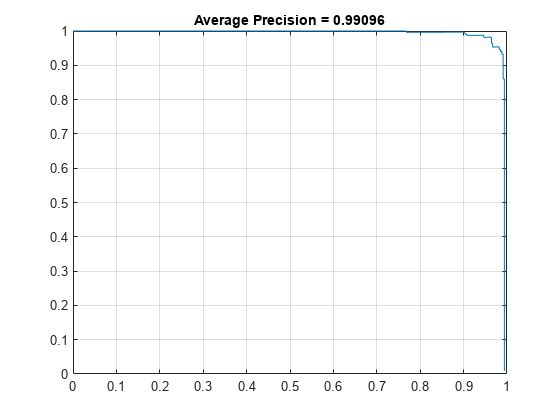

By evaluating common metrics, such as precision and recall, at different overlap thresholds, you can evaluate the tradeoff between detecting more objects (higher recall) and ensuring those detections are accurate (higher precision).

By evaluating model performance at a lower IoU threshold, such as 0.5, and at higher thresholds, such as 0.95, you can determine how good the detector is at identifying objects (detection) at the expense of precise localization (bounding box accuracy).

By computing metrics such as the mean average precision (mAP) over multiple overlap thresholds, you can produce a single performance figure that accounts for both detection and localization accuracy, enabling you to compare different detection models.

To learn how to compute metrics at a range of overlap thresholds to tune detector performance, see the "Precision and Recall for a Single Class" section of the Multiclass Object Detection Using YOLO v2 Deep Learning example.

Version History

Introduced in R2023bSee Also

objectDetectionMetrics | yoloxObjectDetector | yolov4ObjectDetector | yolov3ObjectDetector | yolov2ObjectDetector | ssdObjectDetector | boxLabelDatastore