Extract Key Scenario Events from Recorded Sensor Data

This example shows how to extract key scenario events by using recorded sensor data. In this example you extract events such as left turns, right turns, accelerations, decelerations, lane changes, and cut ins from ego and target actor trajectories.

Autonomous systems require validation on safety-critical real-world scenarios. When validating such a system using scenarios generated from real-world sensor data, you must ensure the scenarios include critical events. In this example, you:

Load, explore, and visualize actor track list data.

Filter actor tracks by removing non-ego tracks that are far from the ego vehicle.

Extract trajectories from the filtered actor tracks, and visualize them.

Segment trajectories, and extract key actor events.

Visualize the classified actor events.

Load and Visualize Sensor Data

This example requires the Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed. If it is not installed, install it using the instructions in Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Download a ZIP file containing camera video, GPS data, and vehicle track list data, and then unzip it. Load the data into the workspace by using the helperLoadTrajectoryAndCameraData helper function. The function returns this data:

camData— Camera data, returned as a structure. The structure contains images, camera intrinsic parameters, and timestamps associated with each image frame.egoTrajectory— Ego actor GPS trajectory in the world frame, returned as awaypointTrajectory(Sensor Fusion and Tracking Toolbox) object.trackList— Vehicle track list in the ego frame, returned as anactorTracklistobject.

Note: For more information on converting a track list from a custom format to the actorTracklist format used by this example, see the helperLoadTrajectoryAndCameraData helper function, included with this example.

dataFolder = tempdir; dataFileName = "PolysyncCutIn.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + dataFileName; filePath = fullfile(dataFolder,dataFileName); if ~isfile(filePath) websave(filePath,url) end unzip(filePath,dataFolder) dataDir = fullfile(dataFolder,"PolysyncCutIn"); [camData,egoTrajectory,trackList] = helperLoadTrajectoryAndCameraData(dataDir)

camData = struct with fields:

images: {392×1 cell}

numFrames: 392

imagesHeight: 480

imagesWidth: 640

camProjection: [3×4 double]

timeStamps: [392×1 double]

egoTrajectory =

waypointTrajectory with properties:

SampleRate: 100

SamplesPerFrame: 1

Waypoints: [392×3 double]

TimeOfArrival: [392×1 double]

Velocities: [392×3 double]

Course: [392×1 double]

GroundSpeed: [392×1 double]

ClimbRate: [392×1 double]

Orientation: [392×1 quaternion]

AutoPitch: 0

AutoBank: 0

ReferenceFrame: 'NED'

trackList =

actorTracklist with properties:

TimeStamp: [392×1 double]

TrackIDs: {392×1 cell}

ClassIDs: {392×1 cell}

Position: {392×1 cell}

Dimension: {392×1 cell}

Orientation: {392×1 cell}

Velocity: {392×1 cell}

Speed: {392×1 cell}

StartTime: 0

EndTime: 19.5457

NumSamples: 392

UniqueTrackIDs: [15×1 string]

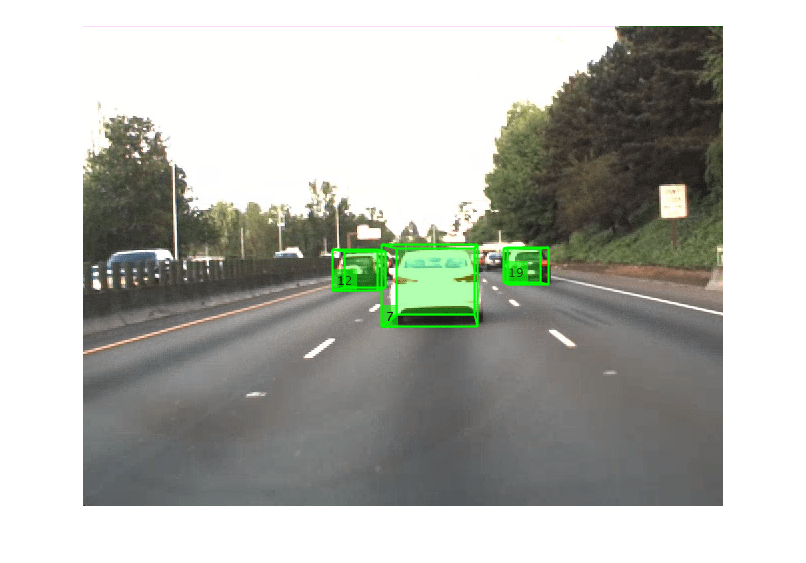

Visualize the camera images and overlay the tracks by using the helperVisualizeTrackData helper function.

helperVisualizeTrackData(camData,trackList);

Trajectories are collections of timestamps and their corresponding waypoints. In this example, you use an ego vehicle trajectory generated from GPS data. For more information on how to obtain an accurate ego vehicle trajectory, see the Ego Vehicle Localization Using GPS and IMU Fusion for Scenario Generation example. To obtain non-ego actor trajectories, you must detect and track vehicles present in the sensor field-of-view. For more information on how to extract actor track list information from raw sensor data, see these examples:

Extract 3D Vehicle Information from Recorded Monocular Camera Data for Scenario Generation

Extract Vehicle Track List from Recorded Camera Data for Scenario Generation

Fuse Prerecorded Lidar and Camera Data to Generate Vehicle Track List for Scenario Generation

Extract Vehicle Track List from Recorded Camera Data for Scenario Generation

Filter Actor Tracks

Depending on the sensor field of view, the track list can contain multiple actor tracks that are far from the ego vehicle. To remove such tracks and extract key actor events, you must define a region of interest around the ego vehicle, reducing the search space. Specify maximum values for lateral and longitudinal distance from the ego vehicle to define the region of interest. Identify and select candidate tracks that are closer to the ego vehicle.

% Specify distance thresholds to form an ROI around the ego vehicle. maxLateralDistance = 5; % Units are in meters. maxLongitudinalDistance = 20; % Units are in meters. % Identify candidate tracks that are closer to the ego vehicle. trackIDs = trackList.UniqueTrackIDs; candidateTrackIDs = []; for i = 1:numel(trackIDs) trackInfo = readData(trackList,TrackIDs=trackIDs(i)); pos = cell2mat(cellfun(@(x) x.Position,trackInfo.ActorInfo,UniformOutput=false)); isCandidate = any(abs(pos(:,1)) < maxLongitudinalDistance) || any(abs(pos(:,2)) < maxLateralDistance); if isCandidate candidateTrackIDs = vertcat(candidateTrackIDs,trackIDs(i)); %#ok<AGROW> end end % Create a copy of the track list. candidateTrackList = copy(trackList); removeData(candidateTrackList,TrackIDs=setdiff(trackIDs,candidateTrackIDs))

Extract and Visualize Trajectories

Convert the tracks in candidateTrackList from the ego frame to the world frame by using the actorprops function. The function stores trajectories as CSV files. Output the path to the directory of the CSV files csvLoc. The ego trajectory information has the filename ego.csv, and the non-ego actor trajectories have filenames corresponding to the track IDs of the respective actors. Each CSV file contains timestamps and the corresponding waypoints in the form [x y z]. These waypoints are in the right-handed Cartesian world coordinate system defined in ISO 8855, where the z-axis points up from the ground. Units are in meters. For more information on coordinate systems, see Coordinate Systems in Automated Driving Toolbox.

[nonEgoActorInfo,csvLoc] = actorprops(candidateTrackList,egoTrajectory,SmoothWaypoint=@waypointsmoother);

Read the generated CSV files as matrices by using the readmatrix function.

csvFiles = dir(strcat(csvLoc,filesep,"*.csv")); numCSVs = numel(csvFiles); targets = cell(numCSVs-1,1); targetIDs = strings(numCSVs-1,1); j = 1; for i = 1:numCSVs % Read CSV file as a matrix. waypointInfo = readmatrix(fullfile(csvFiles(i).folder,csvFiles(i).name)); waypointInfo = waypointInfo(:,1:4); if contains(csvFiles(i).name,"ego") ego = waypointInfo else targets{j} = waypointInfo; [~,targetIDs(j)] = fileparts(csvFiles(i).name); j = j + 1; end end

ego = 392×4

0 26.2858 13.6014 0.5655

0.0498 26.6576 13.7840 0.5787

0.0996 27.0345 13.9674 0.5920

0.1497 27.4096 14.1514 0.6056

0.1995 27.7895 14.3360 0.6191

0.2495 28.1677 14.5212 0.6327

0.2995 28.5505 14.7024 0.6465

0.3505 28.9315 14.8843 0.6606

0.4002 29.3108 15.0668 0.6747

0.4503 29.6946 15.2499 0.6886

0.4998 30.0767 15.4336 0.7024

0.5505 30.4631 15.6179 0.7162

0.6012 30.8477 15.8028 0.7301

0.6503 31.2365 15.9839 0.7440

0.7000 31.6236 16.1657 0.7580

⋮

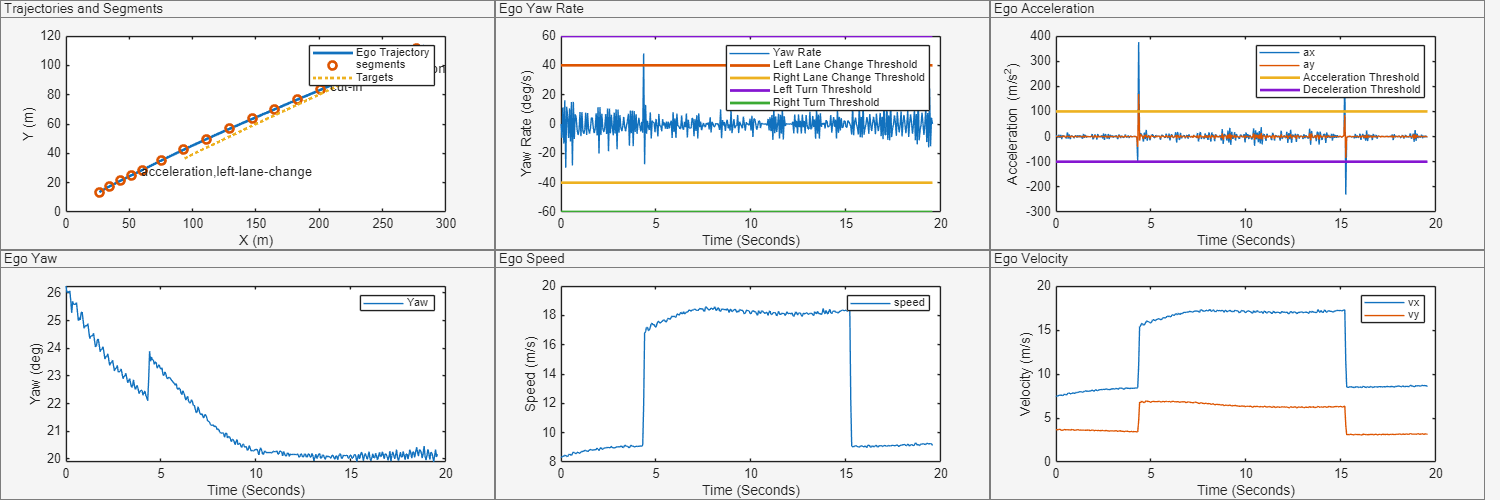

Visualize the extracted trajectories and ego vehicle yaw rate, acceleration, yaw, speed, and velocity using the helperPlotTrajectoryData helper function. Use the visualization to better understand the kinematic state of the ego vehicle and the trajectories of the targets around the ego vehicle. In the next section, you use these visualizations to help you customize a set of predefined rules to extract key actor events.

helperPlotTrajectoryData(ego,targets)

Segment Trajectories to Extract Key Actor Events

Trajectories represent the temporal positions of the actors in a scenario. State parameters in trajectories exhibit different patterns in different scenarios. In this example, you learn how to apply a rule-based algorithm to segment a trajectory into key actor events based on the state parameters. The algorithm segments trajectories and extracts key events using these events:

Divide the trajectory into smaller segments based on a fixed time interval.

Apply predefined rules to each small segment to identify key events.

You can configure these rules to extract the required events for your custom data.

Extract key actor events by using the helperExtractTrajectoryEvents helper function. The function accepts these inputs to identify key events using a rule-based approach:

ego— Ego actor waypoints, specified as matrix. Each row in the matrix is in the form[timestamp,x,y,z]. Timestamp units are in seconds and x-,y-, and z-position units are in meters.targets— Non-ego actor waypoints ,specified as a cell array. Each cell contains a matrix of the form[timestamp,x,y,z].TimeWindow— Time window used to create smaller trajectory segments, specified as a scalar. This value sets the duration of the individual trajectory segments. Units are in seconds.ClassificationParameters— Parameters by which to classify events, specified as a structure. The structure contains various rule-based parameters, such as acceleration thresholds, yaw thresholds, and distance thresholds. You can customize these parameters for your data, and add or remove parameters to create custom rules.EgoEventClassfierFcn— Ego event classifier function, specified as a function handle. The function used in this example uses the kinematic state of the ego actor for each trajectory segment to classify ego events. TheegoSegmentClassifierfunction supports these event classes: left turn, right turn, acceleration, deceleration, and lane change. You can specify a custom function to classify more event types.NonEgoEventClassifierFcn— Non-ego event classifier function, specified as a function handle. The function used in this example uses the kinematic state of the ego and non-ego actors for each trajectory segment to classify interactions between ego and non-ego actors. ThecutInClassifierfunction supports cut in event class. You can specify a custom function to classify more event types.

The helperExtractTrajectoryEvents function returns these outputs:

eventSummary— Classified event summary, returned as a structure containing ego events and the indices of the classified non-ego targets.events— Trajectory event classifications, returned as a timetable containing ego and non-ego actor event classifications for each timestamp.

% Define parameters for trajectory segmentation. params = struct; % Parameters to classify acceleration or deceleration events. params.AccelerationThreshold = 100; % Units are in meter per second squared. % Parameters to classify lane change events. params.YawThresholdForLaneChange = 2; % Units are in degrees. params.YawRateThresholdForLaneChange = 40; % Units are in degrees per second. % Parameters to classify left or right turn events. params.YawRateThresholdForTurn= 60; % Units are in degrees per second. % Parameters to classify cut in events. params.LateralDistanceBefore = 1.5; % Units are in meters. params.LateralDistanceAfter = 2; % Units are in meters. params.LongitudinalDistance = 15 ; % Units are in meters. tWindow = 1; % Units are in seconds. % Classify actor trajectories into key events. [eventSummary,events] = helperExtractTrajectoryEvents(ego,targets,TimeWindow=tWindow,ClassificationParameters=params, ... EgoEventClassfierFcn=@egoSegmentClassifier,NonEgoEventClassifierFcn=@cutInClassifier)

eventSummary = struct with fields:

EgoEvents: ["acceleration" "deceleration" "left-lane-change"]

KeyTargetIndices: 10

events=1954×2 timetable

Time EgoEvents NonEgoEvents

____________ ____________ ____________________________________________________________________________________________________________________________________________________________

0 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.01001 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.02002 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.03003 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.040041 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.050051 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.060061 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.070071 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.080081 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.090091 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.1001 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.11011 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.12012 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.13013 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.14014 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

0.15015 sec {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double} {0×0 double}

⋮

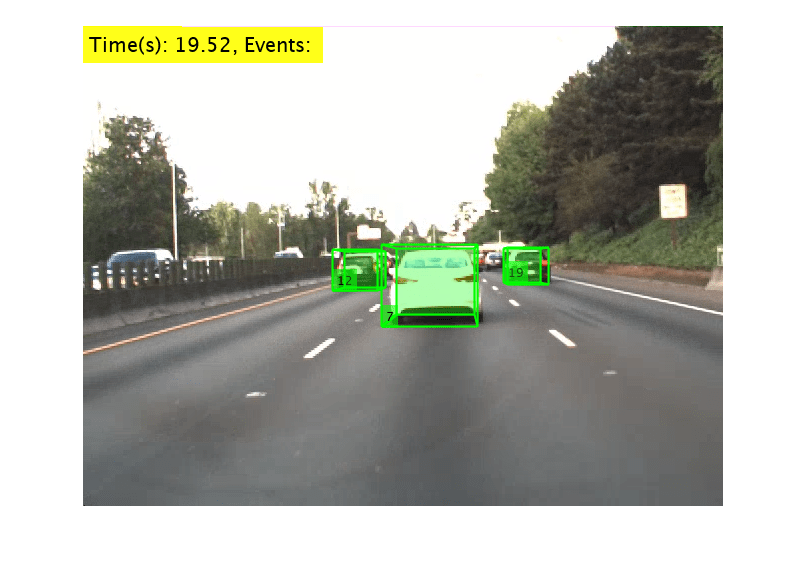

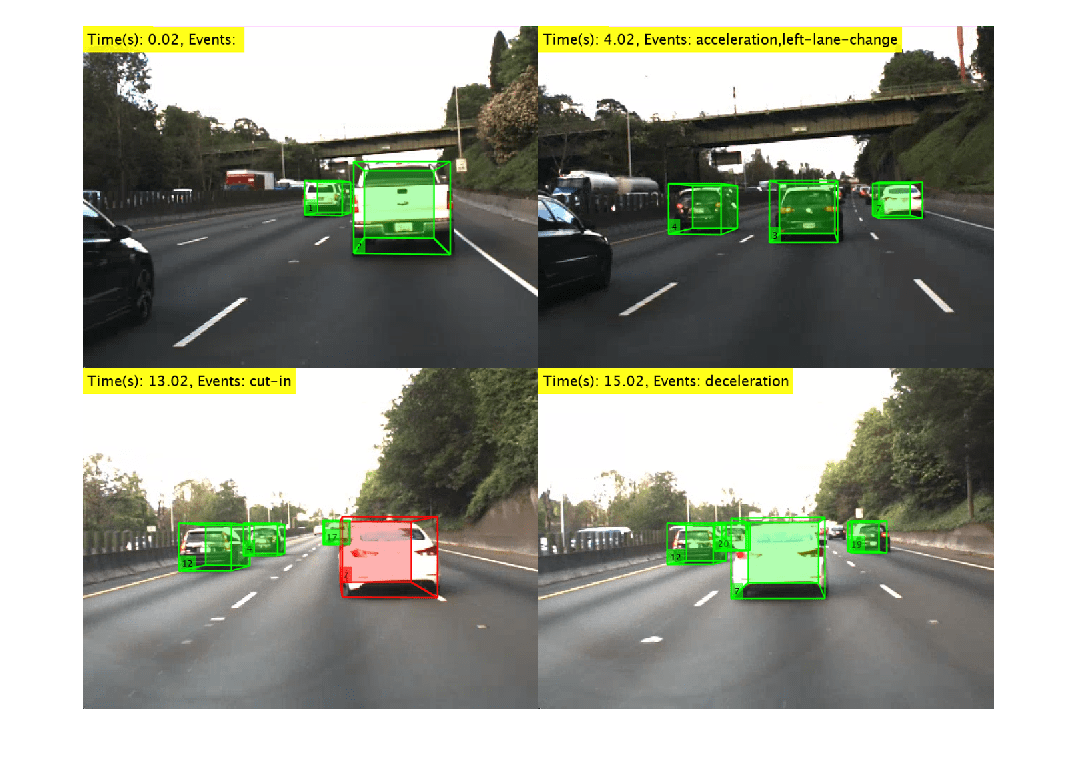

Visualize Classified Actor Events

Visualize the trajectories and classified key actor events using the helperPlotTrajectoryData helper function. The function displays a plot of the ego and non-ego trajectories and their segments, as well as an individual plot for each classified event over time. The function also displays the image frame with overlaid actor tracks and timestamps.

% Visualize the trajectories and segments.

keyFrameIDs = [2 82 262 302];

keyFrames = helperPlotTrajectoryData(ego,targets,tWindow,eventSummary,events,params,camData,candidateTrackList,targetIDs,keyFrameIDs);

Create a montage of the keyFrames output to show classified actor trajectory events along with key camera frames for visual validation.

Time(s): 0.02—The ego vehicle starts from the first lane from the right and moves forward with a nearly constant velocity. The velocity and straight path characteristics are also evident in the state plot from thehelperPlotTrajectoryDatafunction.Time(s): 4.02—The ego vehicle accelerates and changes lane toward its left. In the previous frame, the ego vehicle was in the rightmost lane, and in this frame, it moves into the middle lane. The lane change event is also evident in the yaw and yaw rate plots.Time(s): 13.02—A vehicle cuts in front of the ego vehicle from the left. This example identifies the cut in event and highlights the target vehicle in red.Time(s): 15.02—When the target vehicle is in front of the ego vehicle, the ego vehicle decelerates, and continues to move on a straight path forward.

figure montage(keyFrames)

Summary

In this example you have learned how to extract key actor events by using actor trajectory information. You can analyze the actor events and generate photorealistic scenes and scenarios for the critical events using the Scenario Builder Support Package for Automated Driving Toolbox. You can also use trajectory events for large-scale scenario annotation, retrieving use-case-specific scenarios from large scenario databases, and creating training data for deep-learning-based trajectory classification solutions.

See Also

Functions

Objects

waypointTrajectory(Navigation Toolbox) |actorTracklist|monoCamera

Topics

- Overview of Scenario Generation from Recorded Sensor Data

- Ego Vehicle Localization Using GPS and IMU Fusion for Scenario Generation

- Extract 3D Vehicle Information from Recorded Monocular Camera Data for Scenario Generation

- Extract Vehicle Track List from Recorded Lidar Data for Scenario Generation

- Fuse Prerecorded Lidar and Camera Data to Generate Vehicle Track List for Scenario Generation

- Extract Vehicle Track List from Recorded Camera Data for Scenario Generation