ベイズ最適化および ASHA 最適化による回帰モデルの自動選択

この例では、関数 fitrauto を使用し、指定した学習予測子と応答データに基づいてさまざまなハイパーパラメーターの値をもつ回帰モデルのタイプの選択を自動的に試す方法を示します。既定では、この関数はモデルの選択と評価にベイズ最適化を使用します。学習データ セットに多数の観測値が含まれている場合は、代わりに非同期連続半減アルゴリズム (ASHA) を使用できます。最適化が完了すると、fitrauto は、データ セット全体で学習済みの、新しいデータについての応答が最適とされるモデルを返します。テスト データに対するモデルの性能をチェックします。

データの準備

標本データ セット NYCHousing2015 を読み込みます。これには、2015 年のニューヨーク市における不動産の売上に関する情報を持つ 10 の変数が含まれます。この例では、これらの変数の一部を使用して売価を解析します。

load NYCHousing2015標本データ セット NYCHousing2015 を読み込む代わりに、NYC Open Data Web サイトからデータをダウンロードして、次の方法でインポートすることができます。

folder = 'Annualized_Rolling_Sales_Update'; ds = spreadsheetDatastore(folder,"TextType","string","NumHeaderLines",4); ds.Files = ds.Files(contains(ds.Files,"2015")); ds.SelectedVariableNames = ["BOROUGH","NEIGHBORHOOD","BUILDINGCLASSCATEGORY","RESIDENTIALUNITS", ... "COMMERCIALUNITS","LANDSQUAREFEET","GROSSSQUAREFEET","YEARBUILT","SALEPRICE","SALEDATE"]; NYCHousing2015 = readall(ds);

データ セットを前処理して、対象の予測子変数を選択します。前処理手順のいくつかは、線形回帰モデルの学習の例の手順と同じです。

まず、可読性を高めるため、変数名を小文字に変更します。

NYCHousing2015.Properties.VariableNames = lower(NYCHousing2015.Properties.VariableNames);

次に、特定の問題値を持つ標本を削除します。たとえば、面積の測定値 grosssquarefeet または landsquarefeet の少なくとも 1 つが非ゼロの標本のみを残します。0 ドルの saleprice は現金対価なしの所有権移転を示すものと仮定し、その saleprice の値をもつ標本を削除します。1500 以下の yearbuilt の値はタイプミスであると仮定し、対応する標本を削除します。

NYCHousing2015(NYCHousing2015.grosssquarefeet == 0 & NYCHousing2015.landsquarefeet == 0,:) = []; NYCHousing2015(NYCHousing2015.saleprice == 0,:) = []; NYCHousing2015(NYCHousing2015.yearbuilt <= 1500,:) = [];

datetime 配列として指定された変数 saledate を、MM (月) と DD (日) の 2 つの数値列に変換し、変数 saledate を削除します。すべて 2015 年の標本のため、年は無視します。

[~,NYCHousing2015.MM,NYCHousing2015.DD] = ymd(NYCHousing2015.saledate); NYCHousing2015.saledate = [];

変数 borough の数値は区の名前を示します。この変数を名前を使用したカテゴリカル変数に変更します。

NYCHousing2015.borough = categorical(NYCHousing2015.borough,1:5, ... ["Manhattan","Bronx","Brooklyn","Queens","Staten Island"]);

変数 neighborhood には 254 のカテゴリがあります。簡単にするため、この変数は削除します。

NYCHousing2015.neighborhood = [];

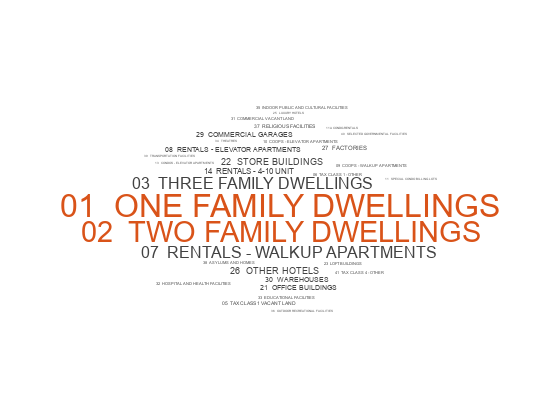

変数 buildingclasscategory をカテゴリカル変数に変換し、関数wordcloudを使用して変数を確認します。

NYCHousing2015.buildingclasscategory = categorical(NYCHousing2015.buildingclasscategory); wordcloud(NYCHousing2015.buildingclasscategory);

1 戸建て住宅、2 戸建て住宅、3 戸建て住宅のみに興味があると仮定します。これらの住宅の標本インデックスを見つけ、それ以外の標本を削除します。次に、変数 buildingclasscategory を、整数値のカテゴリ名をもつ順序カテゴリカル変数に変更します。

idx = ismember(string(NYCHousing2015.buildingclasscategory), ... ["01 ONE FAMILY DWELLINGS","02 TWO FAMILY DWELLINGS","03 THREE FAMILY DWELLINGS"]); NYCHousing2015 = NYCHousing2015(idx,:); NYCHousing2015.buildingclasscategory = categorical(NYCHousing2015.buildingclasscategory, ... ["01 ONE FAMILY DWELLINGS","02 TWO FAMILY DWELLINGS","03 THREE FAMILY DWELLINGS"], ... ["1","2","3"],'Ordinal',true);

すると、変数 buildingclasscategory は、1 つの住宅に住む家族の数を示します。

関数 summary を使用して、応答変数 saleprice を調べます。

s = summary(NYCHousing2015); s.saleprice

ans = struct with fields:

Size: [24972 1]

Type: 'double'

Description: ''

Units: ''

Continuity: []

Min: 1

Median: 515000

Max: 37000000

NumMissing: 0

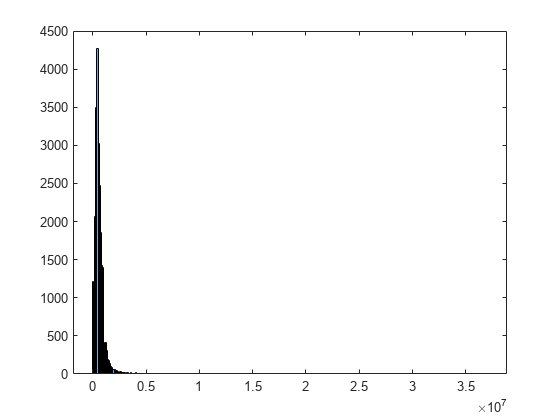

変数 saleprice のヒストグラムを作成します。

histogram(NYCHousing2015.saleprice)

値 saleprice の分布は右の裾が長く、すべての値が 0 より大きいため、変数 saleprice を対数変換します。

NYCHousing2015.saleprice = log(NYCHousing2015.saleprice);

同様に、変数 grosssquarefeet および landsquarefeet を変換します。変数が 0 に等しい場合に備えて、各変数の対数を取る前に値 1 を加算します。

NYCHousing2015.grosssquarefeet = log(1 + NYCHousing2015.grosssquarefeet); NYCHousing2015.landsquarefeet = log(1 + NYCHousing2015.landsquarefeet);

データの分割と外れ値の削除

cvpartitionを使用して、データ セットを学習セットとテスト セットに分割します。モデル選択とハイパーパラメーター調整のプロセスに観測値の約 80% を使用し、fitrauto によって返された最終モデルの性能のテストに他の 20% を使用します。

rng("default") % For reproducibility of the partition c = cvpartition(length(NYCHousing2015.saleprice),"Holdout",0.2); trainData = NYCHousing2015(training(c),:); testData = NYCHousing2015(test(c),:);

関数isoutlierを使用して、学習データから saleprice、grosssquarefeet、および landsquarefeet の外れ値を特定して削除します。

[priceIdx,priceL,priceU] = isoutlier(trainData.saleprice); trainData(priceIdx,:) = []; [grossIdx,grossL,grossU] = isoutlier(trainData.grosssquarefeet); trainData(grossIdx,:) = []; [landIdx,landL,landU] = isoutlier(trainData.landsquarefeet); trainData(landIdx,:) = [];

学習データの計算で使用したのと同じ下限および上限のしきい値を使用して、テスト データから saleprice、grosssquarefeet、および landsquarefeet の外れ値を削除します。

testData(testData.saleprice < priceL | testData.saleprice > priceU,:) = []; testData(testData.grosssquarefeet < grossL | testData.grosssquarefeet > grossU,:) = []; testData(testData.landsquarefeet < landL | testData.landsquarefeet > landU,:) = [];

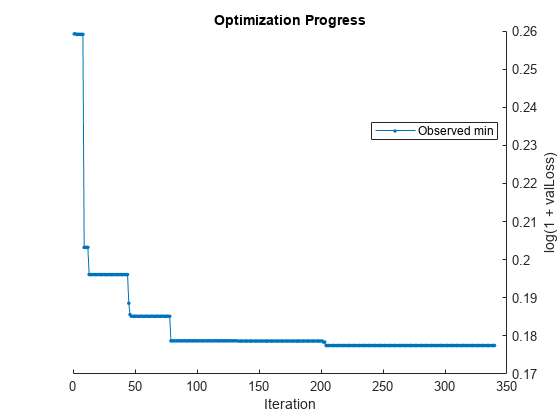

ベイズ最適化による自動モデル選択の使用

fitrauto を使用して、trainData のデータに適切な回帰モデルを見つけます。既定では、fitrauto は、ベイズ最適化を使用してモデルとそのハイパーパラメーターの値を選択し、各モデルについて の値を計算します。ここで、"valLoss" は交差検証の平均二乗誤差 (MSE) です。fitrauto は最適化のプロット、および最適化の結果の反復表示を提供します。これらの結果を解釈する方法の詳細については、Verbose の表示を参照してください。

ベイズ最適化を並列実行するよう指定します。これには Parallel Computing Toolbox™ が必要です。並列でのタイミングに再現性がないため、並列ベイズ最適化で再現性のある結果が生成されるとは限りません。最適化の複雑度に応じて、特に大きなデータ セットでは、この処理に時間がかかる場合があります。

bayesianOptions = struct("UseParallel",true); [bayesianMdl,bayesianResults] = fitrauto(trainData,"saleprice", ... "HyperparameterOptimizationOptions",bayesianOptions);

Warning: Data set has more than 10000 observations. Because ASHA optimization often finds good solutions faster than Bayesian optimization for data sets with many observations, try specifying the 'Optimizer' field value as 'asha' in the 'HyperparameterOptimizationOptions' value structure.

Starting parallel pool (parpool) using the 'Processes' profile ... Connected to parallel pool with 6 workers. Copying objective function to workers... Done copying objective function to workers. Learner types to explore: ensemble, svm, tree Total iterations (MaxObjectiveEvaluations): 90 Total time (MaxTime): Inf |==========================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Estimated min | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | validation loss | | | |==========================================================================================================================================================| | 1 | 6 | Best | 0.25922 | 6.2857 | 0.25922 | 0.25922 | svm | BoxConstraint: 0.0055914 | | | | | | | | | | KernelScale: 0.0056086 | | | | | | | | | | Epsilon: 17.88 | | 2 | 6 | Best | 0.19314 | 38.13 | 0.19314 | 0.19658 | svm | BoxConstraint: 529.96 | | | | | | | | | | KernelScale: 813.67 | | | | | | | | | | Epsilon: 0.0014318 | | 3 | 6 | Accept | 0.19652 | 40.944 | 0.19314 | 0.19652 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 232 | | | | | | | | | | MinLeafSize: 8 | | 4 | 6 | Best | 0.18796 | 1.9986 | 0.18796 | 0.18796 | tree | MinLeafSize: 43 | | 5 | 6 | Accept | 0.19634 | 45.853 | 0.18796 | 0.18796 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 271 | | | | | | | | | | MinLeafSize: 53 | | 6 | 6 | Best | 0.18761 | 39.413 | 0.18761 | 0.18761 | svm | BoxConstraint: 23.501 | | | | | | | | | | KernelScale: 37.99 | | | | | | | | | | Epsilon: 0.0072166 | | 7 | 6 | Accept | 0.29931 | 20.977 | 0.18761 | 0.18761 | tree | MinLeafSize: 2 | | 8 | 6 | Accept | 0.202 | 34.705 | 0.18761 | 0.18761 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 246 | | | | | | | | | | MinLeafSize: 1114 | | 9 | 6 | Best | 0.18737 | 55.486 | 0.18737 | 0.18761 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 297 | | | | | | | | | | MinLeafSize: 3220 | | 10 | 6 | Accept | 0.19582 | 32.49 | 0.18737 | 0.18741 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 247 | | | | | | | | | | MinLeafSize: 4243 | | 11 | 6 | Accept | 0.29931 | 20.54 | 0.18737 | 0.18741 | tree | MinLeafSize: 2 | | 12 | 6 | Accept | 0.25922 | 1.9202 | 0.18737 | 0.18741 | svm | BoxConstraint: 0.31228 | | | | | | | | | | KernelScale: 73.3 | | | | | | | | | | Epsilon: 2.1891 | | 13 | 6 | Accept | 0.25922 | 1.6937 | 0.18737 | 0.18741 | svm | BoxConstraint: 107.75 | | | | | | | | | | KernelScale: 414.93 | | | | | | | | | | Epsilon: 27.903 | | 14 | 6 | Best | 0.17764 | 156.66 | 0.17764 | 0.17768 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 275 | | | | | | | | | | MinLeafSize: 4 | | 15 | 6 | Accept | 0.18795 | 1.1238 | 0.17764 | 0.17768 | tree | MinLeafSize: 219 | | 16 | 6 | Best | 0.17762 | 161.46 | 0.17762 | 0.17765 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 299 | | | | | | | | | | MinLeafSize: 161 | | 17 | 6 | Accept | 0.19855 | 1 | 0.17762 | 0.17765 | tree | MinLeafSize: 895 | | 18 | 6 | Accept | 0.19644 | 33.735 | 0.17762 | 0.17765 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 208 | | | | | | | | | | MinLeafSize: 210 | | 19 | 6 | Accept | 0.18966 | 37.57 | 0.17762 | 0.17765 | svm | BoxConstraint: 18.072 | | | | | | | | | | KernelScale: 48.632 | | | | | | | | | | Epsilon: 0.014558 | | 20 | 6 | Accept | 0.18558 | 0.76697 | 0.17762 | 0.17765 | tree | MinLeafSize: 81 | |==========================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Estimated min | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | validation loss | | | |==========================================================================================================================================================| | 21 | 6 | Accept | 0.21098 | 1.3316 | 0.17762 | 0.17765 | tree | MinLeafSize: 12 | | 22 | 6 | Accept | 0.23354 | 36.341 | 0.17762 | 0.17765 | svm | BoxConstraint: 0.0045714 | | | | | | | | | | KernelScale: 31.869 | | | | | | | | | | Epsilon: 0.0072361 | | 23 | 6 | Best | 0.17748 | 120.76 | 0.17748 | 0.17804 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 227 | | | | | | | | | | MinLeafSize: 161 | | 24 | 6 | Accept | 0.27791 | 8.3041 | 0.17748 | 0.17804 | tree | MinLeafSize: 3 | | 25 | 6 | Accept | 0.20705 | 0.2638 | 0.17748 | 0.17804 | tree | MinLeafSize: 1381 | | 26 | 6 | Accept | 0.1853 | 50.557 | 0.17748 | 0.17806 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 218 | | | | | | | | | | MinLeafSize: 2260 | | 27 | 6 | Accept | 0.25951 | 4.7144 | 0.17748 | 0.17806 | tree | MinLeafSize: 4 | | 28 | 6 | Accept | 0.21851 | 23.951 | 0.17748 | 0.17842 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 239 | | | | | | | | | | MinLeafSize: 2731 | | 29 | 6 | Best | 0.17744 | 108.21 | 0.17744 | 0.1772 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 209 | | | | | | | | | | MinLeafSize: 12 | | 30 | 6 | Accept | 0.18572 | 93.637 | 0.17744 | 0.1772 | svm | BoxConstraint: 169.91 | | | | | | | | | | KernelScale: 27.071 | | | | | | | | | | Epsilon: 0.0098403 | | 31 | 6 | Accept | 0.23155 | 1.8938 | 0.17744 | 0.1772 | tree | MinLeafSize: 7 | | 32 | 6 | Accept | 0.25922 | 1.673 | 0.17744 | 0.1772 | svm | BoxConstraint: 404.64 | | | | | | | | | | KernelScale: 3.2648 | | | | | | | | | | Epsilon: 1.9718 | | 33 | 6 | Accept | 0.29931 | 17.896 | 0.17744 | 0.1772 | tree | MinLeafSize: 2 | | 34 | 6 | Accept | 0.23949 | 2.2754 | 0.17744 | 0.1772 | tree | MinLeafSize: 6 | | 35 | 6 | Accept | 0.25922 | 1.6811 | 0.17744 | 0.1772 | svm | BoxConstraint: 1.3089 | | | | | | | | | | KernelScale: 0.051591 | | | | | | | | | | Epsilon: 10.5 | | 36 | 6 | Accept | 0.19423 | 28.765 | 0.17744 | 0.1772 | svm | BoxConstraint: 111.04 | | | | | | | | | | KernelScale: 660.47 | | | | | | | | | | Epsilon: 0.011798 | | 37 | 6 | Accept | 0.19293 | 0.34422 | 0.17744 | 0.1772 | tree | MinLeafSize: 421 | | 38 | 6 | Accept | 0.178 | 80.632 | 0.17744 | 0.17728 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 200 | | | | | | | | | | MinLeafSize: 530 | | 39 | 6 | Accept | 0.21113 | 0.26389 | 0.17744 | 0.17728 | tree | MinLeafSize: 2018 | | 40 | 6 | Accept | 0.25922 | 0.079127 | 0.17744 | 0.17728 | tree | MinLeafSize: 9068 | |==========================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Estimated min | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | validation loss | | | |==========================================================================================================================================================| | 41 | 6 | Accept | 0.18554 | 0.51767 | 0.17744 | 0.17728 | tree | MinLeafSize: 107 | | 42 | 6 | Accept | 0.18555 | 0.53657 | 0.17744 | 0.17728 | tree | MinLeafSize: 90 | | 43 | 6 | Accept | 0.18613 | 0.54 | 0.17744 | 0.17728 | tree | MinLeafSize: 120 | | 44 | 6 | Accept | 0.18636 | 0.50797 | 0.17744 | 0.17728 | tree | MinLeafSize: 67 | | 45 | 6 | Accept | 0.186 | 0.49626 | 0.17744 | 0.17728 | tree | MinLeafSize: 119 | | 46 | 6 | Accept | 0.18566 | 0.56792 | 0.17744 | 0.17728 | tree | MinLeafSize: 75 | | 47 | 6 | Accept | 0.18516 | 0.49218 | 0.17744 | 0.17728 | tree | MinLeafSize: 85 | | 48 | 6 | Accept | 0.18613 | 0.50745 | 0.17744 | 0.17728 | tree | MinLeafSize: 120 | | 49 | 6 | Accept | 0.18597 | 0.53279 | 0.17744 | 0.17728 | tree | MinLeafSize: 73 | | 50 | 6 | Accept | 0.19384 | 0.75739 | 0.17744 | 0.17728 | tree | MinLeafSize: 26 | | 51 | 6 | Accept | 0.22647 | 0.16018 | 0.17744 | 0.17728 | tree | MinLeafSize: 4214 | | 52 | 6 | Accept | 0.24354 | 16.868 | 0.17744 | 0.17741 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 213 | | | | | | | | | | MinLeafSize: 5333 | | 53 | 6 | Accept | 0.1874 | 0.54513 | 0.17744 | 0.17741 | tree | MinLeafSize: 164 | | 54 | 6 | Best | 0.17736 | 119.47 | 0.17736 | 0.17741 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 254 | | | | | | | | | | MinLeafSize: 330 | | 55 | 6 | Accept | 0.18978 | 0.42057 | 0.17736 | 0.17741 | tree | MinLeafSize: 289 | | 56 | 6 | Accept | 0.25922 | 2.0556 | 0.17736 | 0.17741 | svm | BoxConstraint: 0.093209 | | | | | | | | | | KernelScale: 416.84 | | | | | | | | | | Epsilon: 47.572 | | 57 | 6 | Accept | 0.18703 | 0.65344 | 0.17736 | 0.17741 | tree | MinLeafSize: 55 | | 58 | 6 | Accept | 0.1776 | 102.98 | 0.17736 | 0.17746 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 200 | | | | | | | | | | MinLeafSize: 1 | | 59 | 6 | Accept | 0.1776 | 103.17 | 0.17736 | 0.17735 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 201 | | | | | | | | | | MinLeafSize: 1 | | 60 | 6 | Accept | 0.20326 | 41.575 | 0.17736 | 0.17735 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 330 | | | | | | | | | | MinLeafSize: 1205 | |==========================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Estimated min | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | validation loss | | | |==========================================================================================================================================================| | 61 | 6 | Accept | 0.25922 | 2.6973 | 0.17736 | 0.17735 | svm | BoxConstraint: 0.0037301 | | | | | | | | | | KernelScale: 0.030368 | | | | | | | | | | Epsilon: 7.7463 | | 62 | 6 | Accept | 0.33688 | 57.891 | 0.17736 | 0.17735 | tree | MinLeafSize: 1 | | 63 | 6 | Accept | 0.1854 | 0.49429 | 0.17736 | 0.17735 | tree | MinLeafSize: 92 | | 64 | 6 | Accept | 0.1854 | 0.48028 | 0.17736 | 0.17735 | tree | MinLeafSize: 92 | | 65 | 6 | Accept | 0.18534 | 0.498 | 0.17736 | 0.17735 | tree | MinLeafSize: 91 | | 66 | 6 | Accept | 0.18523 | 0.50116 | 0.17736 | 0.17735 | tree | MinLeafSize: 98 | | 67 | 6 | Accept | 0.19488 | 0.32729 | 0.17736 | 0.17735 | tree | MinLeafSize: 594 | | 68 | 6 | Accept | 0.18719 | 0.51344 | 0.17736 | 0.17735 | tree | MinLeafSize: 151 | | 69 | 6 | Accept | 0.18521 | 0.46106 | 0.17736 | 0.17735 | tree | MinLeafSize: 97 | | 70 | 6 | Accept | 0.18994 | 0.64877 | 0.17736 | 0.17735 | tree | MinLeafSize: 37 | | 71 | 6 | Accept | 0.18539 | 0.49978 | 0.17736 | 0.17735 | tree | MinLeafSize: 94 | | 72 | 6 | Accept | 0.18516 | 0.51504 | 0.17736 | 0.17735 | tree | MinLeafSize: 85 | | 73 | 6 | Accept | 0.18621 | 0.55018 | 0.17736 | 0.17735 | tree | MinLeafSize: 61 | | 74 | 6 | Accept | 0.18521 | 0.50246 | 0.17736 | 0.17735 | tree | MinLeafSize: 97 | | 75 | 6 | Accept | 0.18529 | 0.5302 | 0.17736 | 0.17735 | tree | MinLeafSize: 83 | | 76 | 6 | Accept | 0.18523 | 0.48346 | 0.17736 | 0.17735 | tree | MinLeafSize: 98 | | 77 | 6 | Accept | 0.18516 | 0.51575 | 0.17736 | 0.17735 | tree | MinLeafSize: 84 | | 78 | 6 | Accept | 0.18521 | 0.49904 | 0.17736 | 0.17735 | tree | MinLeafSize: 97 | | 79 | 6 | Accept | 0.18518 | 0.53828 | 0.17736 | 0.17735 | tree | MinLeafSize: 86 | | 80 | 6 | Accept | 0.18521 | 0.51996 | 0.17736 | 0.17735 | tree | MinLeafSize: 97 | |==========================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Estimated min | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | validation loss | | | |==========================================================================================================================================================| | 81 | 6 | Accept | 0.18516 | 0.5163 | 0.17736 | 0.17735 | tree | MinLeafSize: 84 | | 82 | 6 | Accept | 0.18545 | 0.51286 | 0.17736 | 0.17735 | tree | MinLeafSize: 96 | | 83 | 6 | Accept | 0.18529 | 0.52428 | 0.17736 | 0.17735 | tree | MinLeafSize: 82 | | 84 | 6 | Accept | 0.18534 | 0.50201 | 0.17736 | 0.17735 | tree | MinLeafSize: 91 | | 85 | 6 | Accept | 0.18521 | 0.53773 | 0.17736 | 0.17735 | tree | MinLeafSize: 97 | | 86 | 6 | Accept | 0.18516 | 0.52911 | 0.17736 | 0.17735 | tree | MinLeafSize: 85 | | 87 | 6 | Accept | 0.18555 | 0.49957 | 0.17736 | 0.17735 | tree | MinLeafSize: 90 | | 88 | 6 | Accept | 0.18519 | 0.51559 | 0.17736 | 0.17735 | tree | MinLeafSize: 100 | | 89 | 6 | Accept | 0.18518 | 0.50232 | 0.17736 | 0.17735 | tree | MinLeafSize: 87 | | 90 | 6 | Accept | 0.18523 | 0.505 | 0.17736 | 0.17735 | tree | MinLeafSize: 98 |

__________________________________________________________ Optimization completed. Total iterations: 90 Total elapsed time: 571.3359 seconds Total time for training and validation: 1784.4961 seconds Best observed learner is an ensemble model with: Learner: ensemble Method: LSBoost NumLearningCycles: 254 MinLeafSize: 330 Observed log(1 + valLoss): 0.17736 Time for training and validation: 119.4679 seconds Best estimated learner (returned model) is an ensemble model with: Learner: ensemble Method: LSBoost NumLearningCycles: 254 MinLeafSize: 330 Estimated log(1 + valLoss): 0.17735 Estimated time for training and validation: 119.5561 seconds Documentation for fitrauto display

Total elapsed time の値から、ベイズ最適化の実行に時間を要したことがわかります (約 10 分)。

fitrauto によって返される最終的なモデルが、最適な推定学習器となります。モデルを返す前に、関数は学習データ セット全体 (trainData)、リストされている Learner (またはモデル) のタイプ、および表示されたハイパーパラメーター値を使用して、モデルの再学習を行います。

ASHA 最適化による自動モデル選択の使用

学習セットの観測値の数が原因でベイズ最適化による fitrauto の実行に長い時間がかかる場合は、代わりに ASHA 最適化による fitrauto を使用することを検討してください。trainData に含まれる観測値が 10,000 を超える場合は、ASHA 最適化による fitrauto を使用して適切な回帰モデルを自動的に見つけるよう試します。ASHA 最適化による fitrauto を使用すると、関数はさまざまなハイパーパラメーターの値をもつ複数のモデルを無作為に選択し、学習データの小さいサブセットで学習させます。特定のモデルに対する の値 (ここで "valLoss" は交差検証 MSE) が有望な場合、そのモデルをプロモートし、より多くの学習データで学習させます。このプロセスを繰り返し、データの量を徐々に増やしながら有望なモデルに学習させます。既定の設定では、fitrauto は、最適化のプロット、および最適化の結果の反復表示を提供します。これらの結果を解釈する方法の詳細については、Verbose の表示を参照してください。

ASHA 最適化を並列実行するよう指定します。ASHA 最適化は既定のベイズ最適化に比べて反復回数が多くなる場合が多いことに注意してください。時間の制約がある場合は、HyperparameterOptimizationOptions 構造体の MaxTime フィールドを指定して、fitrauto を実行する秒数を制限できます。

ashaOptions = struct("Optimizer","asha","UseParallel",true); [ashaMdl,ashaResults] = fitrauto(trainData,"saleprice", ... "HyperparameterOptimizationOptions",ashaOptions);

Copying objective function to workers...

Warning: Files that have already been attached are being ignored. To see which files are attached see the 'AttachedFiles' property of the parallel pool.

Done copying objective function to workers. Learner types to explore: ensemble, svm, tree Total iterations (MaxObjectiveEvaluations): 340 Total time (MaxTime): Inf |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 1 | 5 | Best | 0.25924 | 0.39045 | 0.25924 | 228 | tree | MinLeafSize: 449 | | 2 | 5 | Accept | 0.25934 | 0.31103 | 0.25924 | 228 | tree | MinLeafSize: 2530 | | 3 | 5 | Best | 0.25912 | 0.34606 | 0.25912 | 228 | svm | BoxConstraint: 1.3072 | | | | | | | | | | KernelScale: 15.843 | | | | | | | | | | Epsilon: 12.799 | | 4 | 6 | Accept | 1.1239 | 3.8682 | 0.25912 | 228 | svm | BoxConstraint: 0.031078 | | | | | | | | | | KernelScale: 0.70461 | | | | | | | | | | Epsilon: 0.000557 | | 5 | 6 | Accept | 0.25929 | 0.12766 | 0.25912 | 910 | svm | BoxConstraint: 1.3072 | | | | | | | | | | KernelScale: 15.843 | | | | | | | | | | Epsilon: 12.799 | | 6 | 6 | Accept | 0.25925 | 6.0063 | 0.25912 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 252 | | | | | | | | | | MinLeafSize: 3143 | | 7 | 6 | Accept | 0.25952 | 9.1801 | 0.25912 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 290 | | | | | | | | | | MinLeafSize: 2969 | | 8 | 6 | Accept | 0.25947 | 5.6197 | 0.25912 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 204 | | | | | | | | | | MinLeafSize: 1349 | | 9 | 6 | Best | 0.20323 | 7.4145 | 0.20323 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 207 | | | | | | | | | | MinLeafSize: 8 | | 10 | 6 | Accept | 0.25922 | 5.2324 | 0.20323 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 218 | | | | | | | | | | MinLeafSize: 2848 | | 11 | 6 | Accept | 0.26174 | 0.13671 | 0.20323 | 228 | svm | BoxConstraint: 511.01 | | | | | | | | | | KernelScale: 0.002289 | | | | | | | | | | Epsilon: 0.37187 | | 12 | 6 | Accept | 0.23809 | 6.5597 | 0.20323 | 228 | svm | BoxConstraint: 293.55 | | | | | | | | | | KernelScale: 542.8 | | | | | | | | | | Epsilon: 1.1488 | | 13 | 6 | Best | 0.19609 | 7.9481 | 0.19609 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 207 | | | | | | | | | | MinLeafSize: 8 | | 14 | 6 | Accept | 0.26923 | 0.38435 | 0.19609 | 228 | svm | BoxConstraint: 9.0541 | | | | | | | | | | KernelScale: 4.9834 | | | | | | | | | | Epsilon: 0.023362 | | 15 | 6 | Accept | 0.25935 | 0.2083 | 0.19609 | 228 | tree | MinLeafSize: 2204 | | 16 | 6 | Accept | 0.23759 | 0.17437 | 0.19609 | 910 | svm | BoxConstraint: 293.55 | | | | | | | | | | KernelScale: 542.8 | | | | | | | | | | Epsilon: 1.1488 | | 17 | 6 | Accept | 0.25932 | 0.087589 | 0.19609 | 228 | tree | MinLeafSize: 1521 | | 18 | 6 | Accept | 0.23005 | 0.088929 | 0.19609 | 228 | tree | MinLeafSize: 89 | | 19 | 6 | Accept | 0.22935 | 0.25217 | 0.19609 | 228 | svm | BoxConstraint: 0.0038969 | | | | | | | | | | KernelScale: 4.0278 | | | | | | | | | | Epsilon: 0.02414 | | 20 | 6 | Accept | 0.21347 | 0.66203 | 0.19609 | 910 | svm | BoxConstraint: 0.0038969 | | | | | | | | | | KernelScale: 4.0278 | | | | | | | | | | Epsilon: 0.02414 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 21 | 6 | Accept | 1.1498 | 30.005 | 0.19609 | 228 | svm | BoxConstraint: 5.9764 | | | | | | | | | | KernelScale: 0.15731 | | | | | | | | | | Epsilon: 0.13472 | | 22 | 6 | Accept | 0.25943 | 0.27203 | 0.19609 | 228 | svm | BoxConstraint: 0.17794 | | | | | | | | | | KernelScale: 0.0016095 | | | | | | | | | | Epsilon: 0.10435 | | 23 | 6 | Accept | 4.9545 | 31.542 | 0.19609 | 228 | svm | BoxConstraint: 0.0054073 | | | | | | | | | | KernelScale: 0.10302 | | | | | | | | | | Epsilon: 0.084674 | | 24 | 6 | Accept | 0.20767 | 0.15721 | 0.19609 | 228 | tree | MinLeafSize: 3 | | 25 | 6 | Accept | 0.20439 | 0.26159 | 0.19609 | 910 | tree | MinLeafSize: 3 | | 26 | 6 | Accept | 0.25915 | 0.1048 | 0.19609 | 228 | svm | BoxConstraint: 308.98 | | | | | | | | | | KernelScale: 1.5085 | | | | | | | | | | Epsilon: 8.6079 | | 27 | 6 | Accept | 0.26703 | 0.26196 | 0.19609 | 228 | svm | BoxConstraint: 0.020371 | | | | | | | | | | KernelScale: 100.81 | | | | | | | | | | Epsilon: 0.05477 | | 28 | 6 | Accept | 0.22263 | 0.38075 | 0.19609 | 228 | svm | BoxConstraint: 96.951 | | | | | | | | | | KernelScale: 11.176 | | | | | | | | | | Epsilon: 0.061916 | | 29 | 6 | Accept | 0.25923 | 0.097954 | 0.19609 | 228 | svm | BoxConstraint: 0.80152 | | | | | | | | | | KernelScale: 11.639 | | | | | | | | | | Epsilon: 14.529 | | 30 | 6 | Accept | 0.2061 | 2.1617 | 0.19609 | 910 | svm | BoxConstraint: 96.951 | | | | | | | | | | KernelScale: 11.176 | | | | | | | | | | Epsilon: 0.061916 | | 31 | 5 | Accept | 0.2594 | 0.12307 | 0.19609 | 228 | svm | BoxConstraint: 0.0049835 | | | | | | | | | | KernelScale: 0.096697 | | | | | | | | | | Epsilon: 1.8 | | 32 | 5 | Accept | 0.19645 | 12.504 | 0.19609 | 3639 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 207 | | | | | | | | | | MinLeafSize: 8 | | 33 | 6 | Accept | 0.26057 | 0.11147 | 0.19609 | 228 | tree | MinLeafSize: 254 | | 34 | 6 | Accept | 0.19634 | 8.2066 | 0.19609 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 232 | | | | | | | | | | MinLeafSize: 21 | | 35 | 6 | Accept | 4.5202 | 38.259 | 0.19609 | 228 | svm | BoxConstraint: 0.0012763 | | | | | | | | | | KernelScale: 0.020115 | | | | | | | | | | Epsilon: 0.010552 | | 36 | 6 | Accept | 0.26111 | 0.17637 | 0.19609 | 228 | svm | BoxConstraint: 5.2635 | | | | | | | | | | KernelScale: 8.9786 | | | | | | | | | | Epsilon: 15.631 | | 37 | 6 | Accept | 0.59958 | 0.12302 | 0.19609 | 228 | svm | BoxConstraint: 2.1875 | | | | | | | | | | KernelScale: 0.0027909 | | | | | | | | | | Epsilon: 1.5475 | | 38 | 6 | Accept | 0.25949 | 8.105 | 0.19609 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 278 | | | | | | | | | | MinLeafSize: 4637 | | 39 | 6 | Accept | 0.25919 | 0.10681 | 0.19609 | 228 | tree | MinLeafSize: 367 | | 40 | 6 | Accept | 0.19879 | 0.10956 | 0.19609 | 910 | tree | MinLeafSize: 89 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 41 | 6 | Accept | 0.2181 | 7.0304 | 0.19609 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 230 | | | | | | | | | | MinLeafSize: 47 | | 42 | 6 | Accept | 4.1559 | 38.977 | 0.19609 | 228 | svm | BoxConstraint: 207.78 | | | | | | | | | | KernelScale: 0.046022 | | | | | | | | | | Epsilon: 0.0010033 | | 43 | 6 | Accept | 0.25932 | 0.21084 | 0.19609 | 228 | tree | MinLeafSize: 3815 | | 44 | 6 | Accept | 0.20715 | 0.17156 | 0.19609 | 228 | tree | MinLeafSize: 25 | | 45 | 6 | Best | 0.18857 | 0.17109 | 0.18857 | 910 | tree | MinLeafSize: 25 | | 46 | 6 | Best | 0.18559 | 10.538 | 0.18559 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 232 | | | | | | | | | | MinLeafSize: 21 | | 47 | 6 | Best | 0.18515 | 0.28055 | 0.18515 | 3639 | tree | MinLeafSize: 25 | | 48 | 6 | Accept | 4.4083 | 0.15547 | 0.18515 | 228 | svm | BoxConstraint: 159.42 | | | | | | | | | | KernelScale: 0.0025582 | | | | | | | | | | Epsilon: 0.35672 | | 49 | 6 | Accept | 0.25999 | 0.080345 | 0.18515 | 228 | tree | MinLeafSize: 924 | | 50 | 6 | Accept | 0.23664 | 14.625 | 0.18515 | 228 | svm | BoxConstraint: 2.9833 | | | | | | | | | | KernelScale: 64.904 | | | | | | | | | | Epsilon: 0.46657 | | 51 | 6 | Accept | 0.2592 | 0.089563 | 0.18515 | 228 | tree | MinLeafSize: 427 | | 52 | 6 | Accept | 0.2542 | 7.341 | 0.18515 | 228 | svm | BoxConstraint: 17.138 | | | | | | | | | | KernelScale: 705.47 | | | | | | | | | | Epsilon: 0.0020063 | | 53 | 6 | Accept | 0.23038 | 7.9301 | 0.18515 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 282 | | | | | | | | | | MinLeafSize: 67 | | 54 | 6 | Accept | 0.20748 | 0.1069 | 0.18515 | 228 | tree | MinLeafSize: 33 | | 55 | 6 | Accept | 4.9482 | 31.776 | 0.18515 | 228 | svm | BoxConstraint: 2.4584 | | | | | | | | | | KernelScale: 0.097169 | | | | | | | | | | Epsilon: 0.77631 | | 56 | 6 | Accept | 0.19914 | 0.11203 | 0.18515 | 228 | tree | MinLeafSize: 12 | | 57 | 6 | Accept | 0.19159 | 0.1374 | 0.18515 | 910 | tree | MinLeafSize: 33 | | 58 | 6 | Accept | 0.25921 | 0.090418 | 0.18515 | 228 | tree | MinLeafSize: 278 | | 59 | 6 | Accept | 0.25949 | 0.11956 | 0.18515 | 228 | svm | BoxConstraint: 0.0036313 | | | | | | | | | | KernelScale: 229.52 | | | | | | | | | | Epsilon: 19.065 | | 60 | 6 | Accept | 0.25933 | 0.08928 | 0.18515 | 228 | svm | BoxConstraint: 0.017434 | | | | | | | | | | KernelScale: 5.5931 | | | | | | | | | | Epsilon: 6.2283 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 61 | 6 | Accept | 0.19301 | 0.23848 | 0.18515 | 910 | tree | MinLeafSize: 12 | | 62 | 6 | Accept | 0.25956 | 0.10927 | 0.18515 | 228 | svm | BoxConstraint: 3.3555 | | | | | | | | | | KernelScale: 11.762 | | | | | | | | | | Epsilon: 3.4714 | | 63 | 6 | Accept | 0.19729 | 9.3817 | 0.18515 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 299 | | | | | | | | | | MinLeafSize: 51 | | 64 | 6 | Accept | 0.19969 | 9.5324 | 0.18515 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 230 | | | | | | | | | | MinLeafSize: 47 | | 65 | 6 | Accept | 0.25944 | 6.8929 | 0.18515 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 266 | | | | | | | | | | MinLeafSize: 232 | | 66 | 6 | Accept | 0.25923 | 0.098082 | 0.18515 | 228 | tree | MinLeafSize: 268 | | 67 | 6 | Accept | 0.19949 | 8.296 | 0.18515 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 250 | | | | | | | | | | MinLeafSize: 11 | | 68 | 6 | Accept | 0.29328 | 0.15509 | 0.18515 | 228 | svm | BoxConstraint: 0.0021604 | | | | | | | | | | KernelScale: 0.72568 | | | | | | | | | | Epsilon: 1.0249 | | 69 | 6 | Accept | 0.25924 | 6.998 | 0.18515 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 248 | | | | | | | | | | MinLeafSize: 5910 | | 70 | 6 | Accept | 0.25962 | 0.33801 | 0.18515 | 228 | tree | MinLeafSize: 1001 | | 71 | 6 | Accept | 0.19958 | 8.7508 | 0.18515 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 266 | | | | | | | | | | MinLeafSize: 2 | | 72 | 6 | Accept | 0.25936 | 0.1205 | 0.18515 | 228 | svm | BoxConstraint: 0.014495 | | | | | | | | | | KernelScale: 0.031712 | | | | | | | | | | Epsilon: 3.5679 | | 73 | 6 | Accept | 0.25922 | 0.24097 | 0.18515 | 228 | svm | BoxConstraint: 2.3012 | | | | | | | | | | KernelScale: 835.74 | | | | | | | | | | Epsilon: 0.0077649 | | 74 | 6 | Accept | 0.18703 | 11.889 | 0.18515 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 299 | | | | | | | | | | MinLeafSize: 51 | | 75 | 6 | Accept | 0.20816 | 0.10311 | 0.18515 | 228 | tree | MinLeafSize: 43 | | 76 | 6 | Accept | 0.18623 | 10.336 | 0.18515 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 250 | | | | | | | | | | MinLeafSize: 11 | | 77 | 6 | Accept | 0.26156 | 0.12955 | 0.18515 | 228 | svm | BoxConstraint: 0.0010645 | | | | | | | | | | KernelScale: 6.4809 | | | | | | | | | | Epsilon: 7.9513 | | 78 | 6 | Accept | 0.20273 | 7.7668 | 0.18515 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 224 | | | | | | | | | | MinLeafSize: 9 | | 79 | 6 | Best | 0.1787 | 23.057 | 0.1787 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 232 | | | | | | | | | | MinLeafSize: 21 | | 80 | 6 | Accept | 0.26811 | 0.27008 | 0.1787 | 228 | svm | BoxConstraint: 0.0032518 | | | | | | | | | | KernelScale: 656.68 | | | | | | | | | | Epsilon: 0.34053 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 81 | 6 | Accept | 0.22695 | 0.29741 | 0.1787 | 228 | svm | BoxConstraint: 0.39402 | | | | | | | | | | KernelScale: 31.169 | | | | | | | | | | Epsilon: 0.031802 | | 82 | 6 | Accept | 0.19756 | 11.213 | 0.1787 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 266 | | | | | | | | | | MinLeafSize: 2 | | 83 | 6 | Accept | 4.0586 | 39.347 | 0.1787 | 228 | svm | BoxConstraint: 13.159 | | | | | | | | | | KernelScale: 0.030173 | | | | | | | | | | Epsilon: 0.019064 | | 84 | 6 | Accept | 0.21179 | 0.1484 | 0.1787 | 228 | tree | MinLeafSize: 3 | | 85 | 6 | Accept | 5.3881 | 39.708 | 0.1787 | 228 | svm | BoxConstraint: 0.036205 | | | | | | | | | | KernelScale: 0.039957 | | | | | | | | | | Epsilon: 0.00052381 | | 86 | 6 | Accept | 0.79147 | 6.8742 | 0.1787 | 228 | svm | BoxConstraint: 425.38 | | | | | | | | | | KernelScale: 4.6541 | | | | | | | | | | Epsilon: 0.01015 | | 87 | 5 | Accept | 0.22605 | 0.2864 | 0.1787 | 228 | svm | BoxConstraint: 0.0080031 | | | | | | | | | | KernelScale: 3.4638 | | | | | | | | | | Epsilon: 0.0098092 | | 88 | 5 | Accept | 0.19337 | 0.14007 | 0.1787 | 910 | tree | MinLeafSize: 43 | | 89 | 6 | Accept | 0.19487 | 8.9817 | 0.1787 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 280 | | | | | | | | | | MinLeafSize: 6 | | 90 | 6 | Accept | 0.1975 | 9.3135 | 0.1787 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 224 | | | | | | | | | | MinLeafSize: 9 | | 91 | 6 | Accept | 0.25954 | 0.096006 | 0.1787 | 228 | tree | MinLeafSize: 6723 | | 92 | 6 | Accept | 0.25928 | 0.089713 | 0.1787 | 228 | tree | MinLeafSize: 723 | | 93 | 6 | Accept | 1.7565 | 22.635 | 0.1787 | 228 | svm | BoxConstraint: 31.378 | | | | | | | | | | KernelScale: 1.578 | | | | | | | | | | Epsilon: 0.13664 | | 94 | 6 | Accept | 0.19604 | 9.4771 | 0.1787 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 289 | | | | | | | | | | MinLeafSize: 1 | | 95 | 6 | Accept | 0.25926 | 5.3677 | 0.1787 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 201 | | | | | | | | | | MinLeafSize: 1178 | | 96 | 6 | Accept | 0.20448 | 0.18023 | 0.1787 | 228 | tree | MinLeafSize: 4 | | 97 | 6 | Accept | 0.25921 | 6.9837 | 0.1787 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 252 | | | | | | | | | | MinLeafSize: 146 | | 98 | 6 | Accept | 0.25947 | 0.09563 | 0.1787 | 228 | tree | MinLeafSize: 600 | | 99 | 6 | Accept | 0.19444 | 7.1977 | 0.1787 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 229 | | | | | | | | | | MinLeafSize: 7 | | 100 | 6 | Accept | 0.21648 | 0.15696 | 0.1787 | 228 | tree | MinLeafSize: 2 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 101 | 6 | Accept | 0.18608 | 11.922 | 0.1787 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 280 | | | | | | | | | | MinLeafSize: 6 | | 102 | 6 | Accept | 0.18584 | 12.463 | 0.1787 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 289 | | | | | | | | | | MinLeafSize: 1 | | 103 | 6 | Accept | 0.21165 | 0.11397 | 0.1787 | 228 | tree | MinLeafSize: 53 | | 104 | 6 | Accept | 0.25928 | 0.084474 | 0.1787 | 228 | tree | MinLeafSize: 8998 | | 105 | 6 | Accept | 0.25925 | 0.095797 | 0.1787 | 228 | tree | MinLeafSize: 2272 | | 106 | 6 | Accept | 0.25923 | 0.080193 | 0.1787 | 228 | tree | MinLeafSize: 5905 | | 107 | 6 | Accept | 0.19701 | 0.27589 | 0.1787 | 910 | tree | MinLeafSize: 4 | | 108 | 6 | Accept | 0.20037 | 10.177 | 0.1787 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 280 | | | | | | | | | | MinLeafSize: 1 | | 109 | 6 | Accept | 0.25958 | 0.10236 | 0.1787 | 228 | tree | MinLeafSize: 134 | | 110 | 6 | Accept | 0.25934 | 0.10276 | 0.1787 | 228 | svm | BoxConstraint: 74.567 | | | | | | | | | | KernelScale: 0.0015974 | | | | | | | | | | Epsilon: 0.0034784 | | 111 | 6 | Accept | 0.20288 | 0.1018 | 0.1787 | 228 | tree | MinLeafSize: 19 | | 112 | 6 | Accept | 0.18487 | 10.591 | 0.1787 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 229 | | | | | | | | | | MinLeafSize: 7 | | 113 | 6 | Accept | 0.21404 | 0.15926 | 0.1787 | 228 | tree | MinLeafSize: 3 | | 114 | 6 | Accept | 0.17898 | 24.396 | 0.1787 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 250 | | | | | | | | | | MinLeafSize: 11 | | 115 | 6 | Accept | 0.25781 | 7.0975 | 0.1787 | 228 | svm | BoxConstraint: 0.57268 | | | | | | | | | | KernelScale: 144.77 | | | | | | | | | | Epsilon: 0.0035523 | | 116 | 6 | Accept | 4.8979 | 33.111 | 0.1787 | 228 | svm | BoxConstraint: 42.319 | | | | | | | | | | KernelScale: 0.090631 | | | | | | | | | | Epsilon: 0.10486 | | 117 | 6 | Accept | 0.20353 | 0.11934 | 0.1787 | 228 | tree | MinLeafSize: 6 | | 118 | 6 | Accept | 0.19678 | 11.63 | 0.1787 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 280 | | | | | | | | | | MinLeafSize: 1 | | 119 | 6 | Accept | 0.18914 | 0.16287 | 0.1787 | 910 | tree | MinLeafSize: 19 | | 120 | 6 | Accept | 0.20292 | 0.15012 | 0.1787 | 228 | tree | MinLeafSize: 4 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 121 | 6 | Accept | 0.25926 | 0.085338 | 0.1787 | 228 | tree | MinLeafSize: 1980 | | 122 | 6 | Accept | 0.25924 | 0.088001 | 0.1787 | 228 | tree | MinLeafSize: 7649 | | 123 | 6 | Accept | 0.20825 | 0.27787 | 0.1787 | 228 | svm | BoxConstraint: 20.926 | | | | | | | | | | KernelScale: 46.672 | | | | | | | | | | Epsilon: 0.0093695 | | 124 | 6 | Accept | 0.19916 | 0.24746 | 0.1787 | 910 | tree | MinLeafSize: 4 | | 125 | 6 | Accept | 0.17911 | 28.029 | 0.1787 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 289 | | | | | | | | | | MinLeafSize: 1 | | 126 | 6 | Accept | 4.6514 | 38.346 | 0.1787 | 228 | svm | BoxConstraint: 0.0099888 | | | | | | | | | | KernelScale: 0.032527 | | | | | | | | | | Epsilon: 0.012744 | | 127 | 6 | Accept | 0.19877 | 8.8632 | 0.1787 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 275 | | | | | | | | | | MinLeafSize: 1 | | 128 | 6 | Accept | 0.25929 | 0.0835 | 0.1787 | 228 | tree | MinLeafSize: 4202 | | 129 | 6 | Accept | 0.19996 | 0.11225 | 0.1787 | 228 | tree | MinLeafSize: 12 | | 130 | 6 | Accept | 0.22175 | 7.2107 | 0.1787 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 248 | | | | | | | | | | MinLeafSize: 49 | | 131 | 6 | Accept | 0.25927 | 0.10101 | 0.1787 | 228 | svm | BoxConstraint: 463.19 | | | | | | | | | | KernelScale: 0.0015281 | | | | | | | | | | Epsilon: 3.5963 | | 132 | 6 | Accept | 0.23848 | 0.25945 | 0.1787 | 228 | svm | BoxConstraint: 0.0031649 | | | | | | | | | | KernelScale: 4.8895 | | | | | | | | | | Epsilon: 0.0013612 | | 133 | 6 | Best | 0.17865 | 26.593 | 0.17865 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 229 | | | | | | | | | | MinLeafSize: 7 | | 134 | 6 | Accept | 0.26635 | 0.13553 | 0.17865 | 228 | svm | BoxConstraint: 242.37 | | | | | | | | | | KernelScale: 76.45 | | | | | | | | | | Epsilon: 1.5249 | | 135 | 6 | Accept | 0.19013 | 0.17075 | 0.17865 | 910 | tree | MinLeafSize: 12 | | 136 | 6 | Accept | 5.2905 | 33.591 | 0.17865 | 228 | svm | BoxConstraint: 14.996 | | | | | | | | | | KernelScale: 0.10377 | | | | | | | | | | Epsilon: 0.0016871 | | 137 | 6 | Accept | 4.799 | 0.21266 | 0.17865 | 228 | svm | BoxConstraint: 21.44 | | | | | | | | | | KernelScale: 0.0076633 | | | | | | | | | | Epsilon: 0.23505 | | 138 | 6 | Accept | 0.25925 | 0.084649 | 0.17865 | 228 | tree | MinLeafSize: 8192 | | 139 | 6 | Accept | 0.25938 | 6.5295 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 230 | | | | | | | | | | MinLeafSize: 377 | | 140 | 6 | Accept | 0.19391 | 0.23152 | 0.17865 | 910 | tree | MinLeafSize: 6 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 141 | 6 | Accept | 0.20039 | 9.1042 | 0.17865 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 262 | | | | | | | | | | MinLeafSize: 7 | | 142 | 6 | Accept | 4.3905 | 0.19627 | 0.17865 | 228 | svm | BoxConstraint: 0.0090374 | | | | | | | | | | KernelScale: 0.0060556 | | | | | | | | | | Epsilon: 0.71 | | 143 | 6 | Accept | 0.19648 | 11.545 | 0.17865 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 275 | | | | | | | | | | MinLeafSize: 1 | | 144 | 6 | Accept | 0.44845 | 0.51895 | 0.17865 | 228 | svm | BoxConstraint: 1.7321 | | | | | | | | | | KernelScale: 2.1647 | | | | | | | | | | Epsilon: 0.15215 | | 145 | 6 | Accept | 0.20219 | 0.28444 | 0.17865 | 228 | svm | BoxConstraint: 220.22 | | | | | | | | | | KernelScale: 40.425 | | | | | | | | | | Epsilon: 0.030772 | | 146 | 6 | Accept | 0.82646 | 5.6295 | 0.17865 | 228 | svm | BoxConstraint: 273.9 | | | | | | | | | | KernelScale: 4.1295 | | | | | | | | | | Epsilon: 0.0024699 | | 147 | 6 | Accept | 3.7322 | 38.403 | 0.17865 | 228 | svm | BoxConstraint: 0.0010279 | | | | | | | | | | KernelScale: 0.017025 | | | | | | | | | | Epsilon: 0.69262 | | 148 | 6 | Accept | 0.25967 | 0.088793 | 0.17865 | 228 | svm | BoxConstraint: 0.021344 | | | | | | | | | | KernelScale: 0.0012067 | | | | | | | | | | Epsilon: 0.06872 | | 149 | 6 | Accept | 0.25926 | 5.7485 | 0.17865 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 226 | | | | | | | | | | MinLeafSize: 6821 | | 150 | 6 | Accept | 1.1525 | 3.7652 | 0.17865 | 228 | svm | BoxConstraint: 0.46953 | | | | | | | | | | KernelScale: 1.1723 | | | | | | | | | | Epsilon: 0.018647 | | 151 | 6 | Accept | 0.20021 | 0.99398 | 0.17865 | 910 | svm | BoxConstraint: 220.22 | | | | | | | | | | KernelScale: 40.425 | | | | | | | | | | Epsilon: 0.030772 | | 152 | 6 | Accept | 0.20343 | 10.365 | 0.17865 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 279 | | | | | | | | | | MinLeafSize: 1 | | 153 | 6 | Accept | 0.21077 | 9.0509 | 0.17865 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 239 | | | | | | | | | | MinLeafSize: 26 | | 154 | 6 | Accept | 0.19652 | 11.886 | 0.17865 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 262 | | | | | | | | | | MinLeafSize: 7 | | 155 | 6 | Accept | 0.21346 | 0.107 | 0.17865 | 228 | tree | MinLeafSize: 40 | | 156 | 6 | Accept | 0.25943 | 6.6607 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 213 | | | | | | | | | | MinLeafSize: 5729 | | 157 | 6 | Accept | 0.25919 | 0.12216 | 0.17865 | 228 | svm | BoxConstraint: 1.3285 | | | | | | | | | | KernelScale: 736.47 | | | | | | | | | | Epsilon: 9.793 | | 158 | 6 | Accept | 0.25921 | 8.1718 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 299 | | | | | | | | | | MinLeafSize: 263 | | 159 | 6 | Accept | 0.22275 | 0.20173 | 0.17865 | 228 | tree | MinLeafSize: 1 | | 160 | 6 | Accept | 0.19625 | 0.73561 | 0.17865 | 910 | svm | BoxConstraint: 20.926 | | | | | | | | | | KernelScale: 46.672 | | | | | | | | | | Epsilon: 0.0093695 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 161 | 6 | Accept | 0.25939 | 0.098374 | 0.17865 | 228 | svm | BoxConstraint: 0.058482 | | | | | | | | | | KernelScale: 0.0022433 | | | | | | | | | | Epsilon: 1.1598 | | 162 | 6 | Accept | 0.25928 | 0.088939 | 0.17865 | 228 | tree | MinLeafSize: 2723 | | 163 | 6 | Accept | 0.19591 | 6.7653 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 209 | | | | | | | | | | MinLeafSize: 13 | | 164 | 6 | Accept | 0.21206 | 0.20982 | 0.17865 | 228 | svm | BoxConstraint: 0.23746 | | | | | | | | | | KernelScale: 3.6412 | | | | | | | | | | Epsilon: 0.20189 | | 165 | 6 | Accept | 1.5717 | 12.792 | 0.17865 | 228 | svm | BoxConstraint: 0.1537 | | | | | | | | | | KernelScale: 0.7338 | | | | | | | | | | Epsilon: 0.0023964 | | 166 | 6 | Accept | 4.6735 | 0.15923 | 0.17865 | 228 | svm | BoxConstraint: 4.4526 | | | | | | | | | | KernelScale: 0.0035563 | | | | | | | | | | Epsilon: 0.46657 | | 167 | 6 | Accept | 0.19707 | 11.491 | 0.17865 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 279 | | | | | | | | | | MinLeafSize: 1 | | 168 | 6 | Accept | 0.23619 | 0.39746 | 0.17865 | 228 | svm | BoxConstraint: 157.33 | | | | | | | | | | KernelScale: 12.524 | | | | | | | | | | Epsilon: 0.031316 | | 169 | 6 | Accept | 0.25921 | 0.079397 | 0.17865 | 228 | tree | MinLeafSize: 6454 | | 170 | 6 | Accept | 0.25921 | 5.7177 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 210 | | | | | | | | | | MinLeafSize: 786 | | 171 | 6 | Accept | 0.26015 | 0.11349 | 0.17865 | 228 | svm | BoxConstraint: 0.4392 | | | | | | | | | | KernelScale: 16.376 | | | | | | | | | | Epsilon: 26.02 | | 172 | 6 | Accept | 0.25929 | 6.9015 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 258 | | | | | | | | | | MinLeafSize: 799 | | 173 | 6 | Accept | 0.26118 | 0.11207 | 0.17865 | 228 | svm | BoxConstraint: 1.9516 | | | | | | | | | | KernelScale: 0.43497 | | | | | | | | | | Epsilon: 9.5343 | | 174 | 6 | Accept | 0.19444 | 0.14242 | 0.17865 | 910 | tree | MinLeafSize: 53 | | 175 | 6 | Accept | 0.18394 | 9.6563 | 0.17865 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 209 | | | | | | | | | | MinLeafSize: 13 | | 176 | 6 | Accept | 0.25479 | 0.14223 | 0.17865 | 228 | svm | BoxConstraint: 225.13 | | | | | | | | | | KernelScale: 123.73 | | | | | | | | | | Epsilon: 1.3343 | | 177 | 6 | Accept | 0.1795 | 25.509 | 0.17865 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 280 | | | | | | | | | | MinLeafSize: 6 | | 178 | 6 | Accept | 0.21688 | 0.37729 | 0.17865 | 228 | svm | BoxConstraint: 768.25 | | | | | | | | | | KernelScale: 29.702 | | | | | | | | | | Epsilon: 0.0024812 | | 179 | 6 | Accept | 0.26039 | 0.11452 | 0.17865 | 228 | svm | BoxConstraint: 4.9484 | | | | | | | | | | KernelScale: 1.0482 | | | | | | | | | | Epsilon: 21.234 | | 180 | 6 | Accept | 0.19701 | 9.8469 | 0.17865 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 239 | | | | | | | | | | MinLeafSize: 26 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 181 | 6 | Accept | 0.25983 | 5.9307 | 0.17865 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 225 | | | | | | | | | | MinLeafSize: 6419 | | 182 | 6 | Accept | 0.20388 | 0.29265 | 0.17865 | 910 | tree | MinLeafSize: 3 | | 183 | 6 | Accept | 0.25921 | 6.2816 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 232 | | | | | | | | | | MinLeafSize: 159 | | 184 | 6 | Accept | 0.2619 | 0.097129 | 0.17865 | 228 | svm | BoxConstraint: 0.0037221 | | | | | | | | | | KernelScale: 0.37378 | | | | | | | | | | Epsilon: 9.2589 | | 185 | 6 | Accept | 0.25928 | 0.10908 | 0.17865 | 228 | tree | MinLeafSize: 7591 | | 186 | 6 | Accept | 0.20357 | 0.090146 | 0.17865 | 228 | tree | MinLeafSize: 27 | | 187 | 6 | Accept | 0.1893 | 0.15405 | 0.17865 | 910 | tree | MinLeafSize: 27 | | 188 | 6 | Accept | 0.25952 | 5.8543 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 220 | | | | | | | | | | MinLeafSize: 283 | | 189 | 6 | Accept | 0.25922 | 0.094638 | 0.17865 | 228 | tree | MinLeafSize: 735 | | 190 | 6 | Accept | 1.0501 | 9.0791 | 0.17865 | 228 | svm | BoxConstraint: 0.0029624 | | | | | | | | | | KernelScale: 0.4503 | | | | | | | | | | Epsilon: 0.0047908 | | 191 | 6 | Accept | 2.0742 | 24.322 | 0.17865 | 228 | svm | BoxConstraint: 0.31516 | | | | | | | | | | KernelScale: 0.58016 | | | | | | | | | | Epsilon: 0.0081462 | | 192 | 6 | Accept | 0.25947 | 0.10052 | 0.17865 | 228 | svm | BoxConstraint: 0.0077418 | | | | | | | | | | KernelScale: 0.0015665 | | | | | | | | | | Epsilon: 36.814 | | 193 | 6 | Accept | 0.19851 | 0.11387 | 0.17865 | 228 | tree | MinLeafSize: 8 | | 194 | 6 | Accept | 0.26163 | 0.084585 | 0.17865 | 228 | svm | BoxConstraint: 112.86 | | | | | | | | | | KernelScale: 0.0021014 | | | | | | | | | | Epsilon: 0.029344 | | 195 | 6 | Accept | 0.19976 | 0.55087 | 0.17865 | 910 | svm | BoxConstraint: 0.23746 | | | | | | | | | | KernelScale: 3.6412 | | | | | | | | | | Epsilon: 0.20189 | | 196 | 6 | Accept | 0.20698 | 0.12351 | 0.17865 | 228 | tree | MinLeafSize: 4 | | 197 | 6 | Accept | 0.19674 | 0.19255 | 0.17865 | 910 | tree | MinLeafSize: 8 | | 198 | 6 | Accept | 0.25922 | 0.097554 | 0.17865 | 228 | svm | BoxConstraint: 20.918 | | | | | | | | | | KernelScale: 646.59 | | | | | | | | | | Epsilon: 5.3626 | | 199 | 6 | Accept | 4.3697 | 0.16234 | 0.17865 | 228 | svm | BoxConstraint: 283.79 | | | | | | | | | | KernelScale: 0.0039105 | | | | | | | | | | Epsilon: 0.071105 | | 200 | 6 | Accept | 0.19725 | 8.0734 | 0.17865 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 262 | | | | | | | | | | MinLeafSize: 10 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 201 | 6 | Accept | 0.17981 | 20.71 | 0.17865 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 209 | | | | | | | | | | MinLeafSize: 13 | | 202 | 6 | Best | 0.17839 | 31.64 | 0.17839 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 299 | | | | | | | | | | MinLeafSize: 51 | | 203 | 6 | Accept | 3.0117 | 25.226 | 0.17839 | 228 | svm | BoxConstraint: 0.0010023 | | | | | | | | | | KernelScale: 0.21324 | | | | | | | | | | Epsilon: 0.015111 | | 204 | 6 | Best | 0.17747 | 113.95 | 0.17747 | 14556 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 232 | | | | | | | | | | MinLeafSize: 21 | | 205 | 6 | Accept | 0.20464 | 0.12123 | 0.17747 | 228 | tree | MinLeafSize: 38 | | 206 | 6 | Accept | 0.20345 | 0.11611 | 0.17747 | 228 | tree | MinLeafSize: 5 | | 207 | 6 | Accept | 0.25958 | 0.084698 | 0.17747 | 228 | tree | MinLeafSize: 175 | | 208 | 6 | Accept | 0.25946 | 6.6024 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 259 | | | | | | | | | | MinLeafSize: 2564 | | 209 | 6 | Accept | 0.19334 | 0.24243 | 0.17747 | 910 | tree | MinLeafSize: 5 | | 210 | 6 | Accept | 0.19562 | 9.0505 | 0.17747 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 271 | | | | | | | | | | MinLeafSize: 1 | | 211 | 6 | Accept | 0.18447 | 11.522 | 0.17747 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 262 | | | | | | | | | | MinLeafSize: 10 | | 212 | 6 | Accept | 0.25934 | 6.8799 | 0.17747 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 265 | | | | | | | | | | MinLeafSize: 283 | | 213 | 6 | Accept | 0.20264 | 9.0681 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 266 | | | | | | | | | | MinLeafSize: 9 | | 214 | 6 | Accept | 4.2528 | 19.129 | 0.17747 | 228 | svm | BoxConstraint: 82.625 | | | | | | | | | | KernelScale: 0.049305 | | | | | | | | | | Epsilon: 0.1354 | | 215 | 6 | Accept | 0.36051 | 22.407 | 0.17747 | 228 | svm | BoxConstraint: 0.24379 | | | | | | | | | | KernelScale: 0.21574 | | | | | | | | | | Epsilon: 0.19855 | | 216 | 6 | Accept | 0.19788 | 0.11434 | 0.17747 | 228 | tree | MinLeafSize: 7 | | 217 | 6 | Accept | 4.6436 | 38.78 | 0.17747 | 228 | svm | BoxConstraint: 0.0074352 | | | | | | | | | | KernelScale: 0.0083396 | | | | | | | | | | Epsilon: 0.0013205 | | 218 | 6 | Accept | 0.25962 | 0.10879 | 0.17747 | 228 | tree | MinLeafSize: 2811 | | 219 | 6 | Accept | 0.20341 | 7.1399 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 213 | | | | | | | | | | MinLeafSize: 8 | | 220 | 6 | Accept | 0.19174 | 0.31247 | 0.17747 | 910 | tree | MinLeafSize: 7 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 221 | 6 | Accept | 4.4187 | 0.18142 | 0.17747 | 228 | svm | BoxConstraint: 0.062005 | | | | | | | | | | KernelScale: 0.0061157 | | | | | | | | | | Epsilon: 1.2716 | | 222 | 6 | Accept | 0.25924 | 0.085414 | 0.17747 | 228 | tree | MinLeafSize: 1624 | | 223 | 6 | Accept | 0.2593 | 4.8506 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 202 | | | | | | | | | | MinLeafSize: 1594 | | 224 | 6 | Accept | 0.20956 | 7.8639 | 0.17747 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 249 | | | | | | | | | | MinLeafSize: 68 | | 225 | 6 | Accept | 0.25934 | 0.096467 | 0.17747 | 228 | tree | MinLeafSize: 963 | | 226 | 6 | Accept | 0.17886 | 25.303 | 0.17747 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 262 | | | | | | | | | | MinLeafSize: 10 | | 227 | 6 | Accept | 0.25947 | 0.10379 | 0.17747 | 228 | tree | MinLeafSize: 1473 | | 228 | 6 | Accept | 0.25918 | 0.13096 | 0.17747 | 228 | svm | BoxConstraint: 0.38183 | | | | | | | | | | KernelScale: 0.023107 | | | | | | | | | | Epsilon: 10.677 | | 229 | 6 | Accept | 0.18509 | 12.601 | 0.17747 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 271 | | | | | | | | | | MinLeafSize: 1 | | 230 | 6 | Accept | 0.19606 | 8.9382 | 0.17747 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 213 | | | | | | | | | | MinLeafSize: 8 | | 231 | 5 | Accept | 0.19671 | 11.18 | 0.17747 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 266 | | | | | | | | | | MinLeafSize: 9 | | 232 | 5 | Accept | 0.20255 | 0.13277 | 0.17747 | 228 | tree | MinLeafSize: 5 | | 233 | 6 | Accept | 0.26213 | 0.26877 | 0.17747 | 228 | svm | BoxConstraint: 0.0028038 | | | | | | | | | | KernelScale: 810.16 | | | | | | | | | | Epsilon: 0.0030228 | | 234 | 6 | Accept | 0.25943 | 0.091359 | 0.17747 | 228 | tree | MinLeafSize: 215 | | 235 | 6 | Accept | 0.25962 | 0.096807 | 0.17747 | 228 | svm | BoxConstraint: 0.009004 | | | | | | | | | | KernelScale: 37.307 | | | | | | | | | | Epsilon: 5.8595 | | 236 | 6 | Accept | 0.19437 | 0.21291 | 0.17747 | 910 | tree | MinLeafSize: 5 | | 237 | 6 | Accept | 0.20498 | 0.25416 | 0.17747 | 228 | svm | BoxConstraint: 0.71175 | | | | | | | | | | KernelScale: 6.6854 | | | | | | | | | | Epsilon: 0.0028282 | | 238 | 6 | Accept | 0.26001 | 0.095152 | 0.17747 | 228 | svm | BoxConstraint: 120.8 | | | | | | | | | | KernelScale: 12.251 | | | | | | | | | | Epsilon: 4.4155 | | 239 | 6 | Accept | 0.25924 | 0.073817 | 0.17747 | 228 | tree | MinLeafSize: 2181 | | 240 | 6 | Accept | 0.21369 | 0.14467 | 0.17747 | 228 | tree | MinLeafSize: 3 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 241 | 6 | Accept | 0.1906 | 0.13465 | 0.17747 | 910 | tree | MinLeafSize: 38 | | 242 | 6 | Accept | 0.40741 | 1.3827 | 0.17747 | 228 | svm | BoxConstraint: 227.27 | | | | | | | | | | KernelScale: 6.4364 | | | | | | | | | | Epsilon: 0.13925 | | 243 | 6 | Accept | 0.20198 | 9.1787 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 283 | | | | | | | | | | MinLeafSize: 9 | | 244 | 5 | Accept | 3.8508 | 39.213 | 0.17747 | 228 | svm | BoxConstraint: 587.66 | | | | | | | | | | KernelScale: 0.014658 | | | | | | | | | | Epsilon: 0.036807 | | 245 | 5 | Accept | 0.19808 | 8.3436 | 0.17747 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 289 | | | | | | | | | | MinLeafSize: 14 | | 246 | 6 | Accept | 4.8726 | 31.184 | 0.17747 | 228 | svm | BoxConstraint: 784.29 | | | | | | | | | | KernelScale: 0.075318 | | | | | | | | | | Epsilon: 0.18619 | | 247 | 6 | Accept | 0.25987 | 0.10339 | 0.17747 | 228 | svm | BoxConstraint: 0.0071746 | | | | | | | | | | KernelScale: 0.15234 | | | | | | | | | | Epsilon: 11.082 | | 248 | 6 | Accept | 0.1797 | 25.885 | 0.17747 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 271 | | | | | | | | | | MinLeafSize: 1 | | 249 | 6 | Accept | 0.2611 | 0.1044 | 0.17747 | 228 | svm | BoxConstraint: 3.4502 | | | | | | | | | | KernelScale: 5.8058 | | | | | | | | | | Epsilon: 3.6108 | | 250 | 6 | Accept | 0.25929 | 0.12647 | 0.17747 | 228 | tree | MinLeafSize: 344 | | 251 | 6 | Accept | 4.4082 | 38.748 | 0.17747 | 228 | svm | BoxConstraint: 17.315 | | | | | | | | | | KernelScale: 0.016928 | | | | | | | | | | Epsilon: 0.73348 | | 252 | 6 | Accept | 0.2593 | 6.3505 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 258 | | | | | | | | | | MinLeafSize: 400 | | 253 | 6 | Accept | 0.26003 | 0.10729 | 0.17747 | 228 | tree | MinLeafSize: 828 | | 254 | 6 | Accept | 0.20025 | 8.1396 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 234 | | | | | | | | | | MinLeafSize: 1 | | 255 | 6 | Accept | 0.18528 | 12.7 | 0.17747 | 910 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 289 | | | | | | | | | | MinLeafSize: 14 | | 256 | 6 | Accept | 0.26376 | 0.26448 | 0.17747 | 228 | svm | BoxConstraint: 0.50105 | | | | | | | | | | KernelScale: 434.22 | | | | | | | | | | Epsilon: 0.0099215 | | 257 | 6 | Accept | 0.25921 | 6.9512 | 0.17747 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 255 | | | | | | | | | | MinLeafSize: 3385 | | 258 | 6 | Accept | 0.1978 | 11.792 | 0.17747 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 283 | | | | | | | | | | MinLeafSize: 9 | | 259 | 6 | Accept | 0.19776 | 9.7264 | 0.17747 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 234 | | | | | | | | | | MinLeafSize: 1 | | 260 | 6 | Accept | 0.24875 | 7.349 | 0.17747 | 228 | svm | BoxConstraint: 14.01 | | | | | | | | | | KernelScale: 469.23 | | | | | | | | | | Epsilon: 0.13191 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 261 | 6 | Accept | 0.22455 | 6.9209 | 0.17747 | 228 | svm | BoxConstraint: 0.18817 | | | | | | | | | | KernelScale: 12.784 | | | | | | | | | | Epsilon: 0.6341 | | 262 | 6 | Accept | 0.19167 | 0.75477 | 0.17747 | 910 | svm | BoxConstraint: 0.71175 | | | | | | | | | | KernelScale: 6.6854 | | | | | | | | | | Epsilon: 0.0028282 | | 263 | 6 | Accept | 0.25957 | 0.11494 | 0.17747 | 228 | svm | BoxConstraint: 118.19 | | | | | | | | | | KernelScale: 0.0023401 | | | | | | | | | | Epsilon: 2.6591 | | 264 | 6 | Accept | 0.25909 | 0.10551 | 0.17747 | 228 | svm | BoxConstraint: 0.13507 | | | | | | | | | | KernelScale: 0.0022823 | | | | | | | | | | Epsilon: 0.0016091 | | 265 | 6 | Accept | 0.271 | 0.44689 | 0.17747 | 228 | svm | BoxConstraint: 973.84 | | | | | | | | | | KernelScale: 10.717 | | | | | | | | | | Epsilon: 0.7485 | | 266 | 6 | Accept | 0.20133 | 8.5234 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 254 | | | | | | | | | | MinLeafSize: 13 | | 267 | 6 | Accept | 0.20039 | 9.6539 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 285 | | | | | | | | | | MinLeafSize: 5 | | 268 | 6 | Accept | 5.9515 | 31.371 | 0.17747 | 228 | svm | BoxConstraint: 15.141 | | | | | | | | | | KernelScale: 0.15473 | | | | | | | | | | Epsilon: 0.017362 | | 269 | 6 | Accept | 0.21388 | 0.17365 | 0.17747 | 228 | svm | BoxConstraint: 7.419 | | | | | | | | | | KernelScale: 44.748 | | | | | | | | | | Epsilon: 0.53286 | | 270 | 6 | Accept | 4.2493 | 0.16616 | 0.17747 | 228 | svm | BoxConstraint: 28.598 | | | | | | | | | | KernelScale: 0.0057012 | | | | | | | | | | Epsilon: 0.49041 | | 271 | 6 | Accept | 0.19753 | 10.631 | 0.17747 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 254 | | | | | | | | | | MinLeafSize: 13 | | 272 | 6 | Accept | 0.25935 | 0.10701 | 0.17747 | 228 | svm | BoxConstraint: 0.5398 | | | | | | | | | | KernelScale: 0.0010211 | | | | | | | | | | Epsilon: 0.2952 | | 273 | 6 | Accept | 7.3592 | 29.508 | 0.17747 | 228 | svm | BoxConstraint: 0.0027531 | | | | | | | | | | KernelScale: 0.21405 | | | | | | | | | | Epsilon: 0.49714 | | 274 | 6 | Accept | 0.17866 | 30.497 | 0.17747 | 3639 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 289 | | | | | | | | | | MinLeafSize: 14 | | 275 | 6 | Accept | 0.19587 | 11.523 | 0.17747 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 285 | | | | | | | | | | MinLeafSize: 5 | | 276 | 6 | Accept | 0.18464 | 0.26372 | 0.17747 | 3639 | tree | MinLeafSize: 19 | | 277 | 6 | Accept | 0.25925 | 0.083213 | 0.17747 | 228 | tree | MinLeafSize: 446 | | 278 | 6 | Accept | 0.26052 | 0.11342 | 0.17747 | 228 | svm | BoxConstraint: 1.1407 | | | | | | | | | | KernelScale: 0.014809 | | | | | | | | | | Epsilon: 4.2648 | | 279 | 6 | Accept | 0.19919 | 0.21925 | 0.17747 | 910 | tree | MinLeafSize: 4 | | 280 | 6 | Accept | 0.17749 | 122.4 | 0.17747 | 14556 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 229 | | | | | | | | | | MinLeafSize: 7 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | result | | & validation (sec)| validation loss | size | | | |=======================================================================================================================================================| | 281 | 6 | Accept | 0.24351 | 13.121 | 0.17747 | 228 | svm | BoxConstraint: 0.0033709 | | | | | | | | | | KernelScale: 2.689 | | | | | | | | | | Epsilon: 0.93454 | | 282 | 6 | Accept | 0.26078 | 7.0174 | 0.17747 | 228 | svm | BoxConstraint: 0.012946 | | | | | | | | | | KernelScale: 32.975 | | | | | | | | | | Epsilon: 0.002842 | | 283 | 6 | Accept | 0.2133 | 0.24612 | 0.17747 | 228 | svm | BoxConstraint: 0.0075765 | | | | | | | | | | KernelScale: 2.8074 | | | | | | | | | | Epsilon: 0.0044881 | | 284 | 6 | Accept | 0.20091 | 8.2694 | 0.17747 | 228 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 255 | | | | | | | | | | MinLeafSize: 6 | | 285 | 6 | Accept | 3.6401 | 23.897 | 0.17747 | 228 | svm | BoxConstraint: 0.0026163 | | | | | | | | | | KernelScale: 0.10725 | | | | | | | | | | Epsilon: 0.0041286 | | 286 | 6 | Accept | 4.2321 | 0.12661 | 0.17747 | 228 | svm | BoxConstraint: 237.21 | | | | | | | | | | KernelScale: 0.0035398 | | | | | | | | | | Epsilon: 0.001316 | | 287 | 6 | Accept | 0.25978 | 7.9338 | 0.17747 | 228 | ensemble | Method: LSBoost | | | | | | | | | | NumLearningCycles: 292 | | | | | | | | | | MinLeafSize: 291 | | 288 | 6 | Accept | 0.25928 | 0.10312 | 0.17747 | 228 | tree | MinLeafSize: 7169 | | 289 | 6 | Accept | 0.23721 | 6.9699 | 0.17747 | 228 | svm | BoxConstraint: 22.889 | | | | | | | | | | KernelScale: 344 | | | | | | | | | | Epsilon: 0.0049392 | | 290 | 6 | Accept | 0.25931 | 0.12434 | 0.17747 | 228 | tree | MinLeafSize: 5898 | | 291 | 6 | Accept | 0.19899 | 0.099808 | 0.17747 | 228 | tree | MinLeafSize: 20 | | 292 | 6 | Accept | 0.20548 | 0.12388 | 0.17747 | 228 | tree | MinLeafSize: 4 | | 293 | 6 | Accept | 0.18895 | 0.15628 | 0.17747 | 910 | tree | MinLeafSize: 20 | | 294 | 6 | Accept | 0.19728 | 10.1 | 0.17747 | 910 | ensemble | Method: Bag | | | | | | | | | | NumLearningCycles: 255 | | | | | | | | | | MinLeafSize: 6 | | 295 | 6 | Accept | 0.2609 | 0.10755 | 0.17747 | 228 | svm | BoxConstraint: 48.918 | | | | | | | | | | KernelScale: 0.19215 | | | | | | | | | | Epsilon: 40.346 | | 296 | 6 | Accept | 0.19886 | 0.12588 | 0.17747 | 228 | tree | MinLeafSize: 7 | | 297 | 6 | Accept | 0.2613 | 0.10721 | 0.17747 | 228 | svm | BoxConstraint: 0.0029303 | | | | | | | | | | KernelScale: 0.0022101 | | | | | | | | | | Epsilon: 0.043672 | | 298 | 6 | Accept | 6.2139 | 0.17019 | 0.17747 | 228 | svm | BoxConstraint: 678.2 | | | | | | | | | | KernelScale: 0.0050689 | | | | | | | | | | Epsilon: 0.0020536 | | 299 | 6 | Accept | 0.19489 | 0.20124 | 0.17747 | 910 | tree | MinLeafSize: 7 | | 300 | 6 | Accept | 0.18562 | 0.25638 | 0.17747 | 3639 | tree | MinLeafSize: 20 | |=======================================================================================================================================================| | Iter | Active | Eval | log(1+valLoss)| Time for training | Observed min | Training set | Learner | Hyperparameter: Value | | | workers | resu...

__________________________________________________________ Optimization completed. Total iterations: 340 Total elapsed time: 429.3495 seconds Total time for training and validation: 2373.789 seconds Best observed learner is an ensemble model with: Learner: ensemble Method: LSBoost NumLearningCycles: 232 MinLeafSize: 21 Observed log(1 + valLoss): 0.17747 Time for training and validation: 113.9466 seconds Documentation for fitrauto display

Total elapsed time の値から、ASHA 最適化の方がベイズ最適化よりも実行時間が短縮されたことがわかります (約 7 分)。

fitrauto によって返される最終的なモデルが、観測された最適な学習器となります。モデルを返す前に、関数は学習データ セット全体 (trainData)、リストされている Learner (またはモデル) のタイプ、および表示されたハイパーパラメーター値を使用して、モデルの再学習を行います。

テスト セットのパフォーマンスの評価

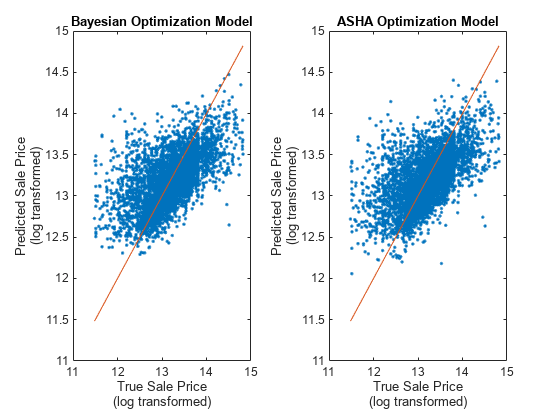

テスト セット testData で返されたモデル bayesianMdl および ashaMdl のパフォーマンスを評価します。各モデルについて、テスト セットの平均二乗誤差 (MSE) を計算し、MSE の対数変換を行って、fitrauto の詳細表示の値と一致させます。MSE (および対数変換された MSE) の値が小さいほど、パフォーマンスが優れていることを示します。

bayesianTestMSE = loss(bayesianMdl,testData,"saleprice");

bayesianTestError = log(1 + bayesianTestMSE)bayesianTestError = 0.1793

ashaTestMSE = loss(ashaMdl,testData,"saleprice");

ashaTestError = log(1 + ashaTestMSE)ashaTestError = 0.1793

各モデルについて、テスト セットの予測応答値と実際の応答値を比較します。予測売価を縦軸に、実際の売価を横軸に沿ってプロットします。基準線上にある点は予測が正しいことを示します。優れたモデルでは、生成された予測が線の近くに分布します。1 行 2 列のタイル レイアウトを使用して 2 つのモデルの結果を比較します。

bayesianTestPredictions = predict(bayesianMdl,testData); ashaTestPredictions = predict(ashaMdl,testData); tiledlayout(1,2) nexttile plot(testData.saleprice,bayesianTestPredictions,".") hold on plot(testData.saleprice,testData.saleprice) % Reference line hold off xlabel(["True Sale Price","(log transformed)"]) ylabel(["Predicted Sale Price","(log transformed)"]) title("Bayesian Optimization Model") nexttile plot(testData.saleprice,ashaTestPredictions,".") hold on plot(testData.saleprice,testData.saleprice) % Reference line hold off xlabel(["True Sale Price","(log transformed)"]) ylabel(["Predicted Sale Price","(log transformed)"]) title("ASHA Optimization Model")

対数変換された MSE の値および予測プロットから、bayesianMdl および ashaMdl モデルの性能は、テスト セットで同様に優れていることがわかります。

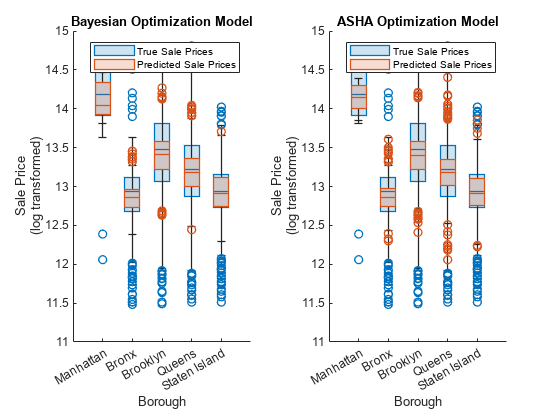

各モデルについて、箱ひげ図を使用して、行政区ごとの予測売価と実際の売価の分布を比較します。関数boxchartを使用して、箱ひげ図を作成します。各箱ひげ図には、中央値、第 1 四分位数と第 3 四分位数、外れ値 (四分位数間範囲を使用して計算)、および外れ値ではない最小値と最大値を表示します。特に、各ボックスの内側の線は標本の中央値であり、円形のマーカーは外れ値を示します。

各行政区について、赤色の箱ひげ図 (予測売価の分布を示す) と青色の箱ひげ図 (実際の売価の分布を示す) を比較します。予測売価と実際の売価の分布が似ていることは、予測が優れていることを示します。1 行 2 列のタイル レイアウトを使用して 2 つのモデルの結果を比較します。

tiledlayout(1,2) nexttile boxchart(testData.borough,testData.saleprice) hold on boxchart(testData.borough,bayesianTestPredictions) hold off legend(["True Sale Prices","Predicted Sale Prices"]) xlabel("Borough") ylabel(["Sale Price","(log transformed)"]) title("Bayesian Optimization Model") nexttile boxchart(testData.borough,testData.saleprice) hold on boxchart(testData.borough,ashaTestPredictions) hold off legend(["True Sale Prices","Predicted Sale Prices"]) xlabel("Borough") ylabel(["Sale Price","(log transformed)"]) title("ASHA Optimization Model")

両方のモデルについて、各行政区における予測売価の中央値は実際の売価の中央値とほぼ一致しています。予測売価は、実際の売価よりも変動が少ないようです。

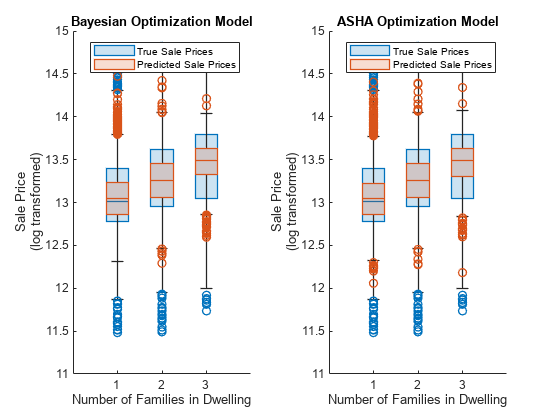

各モデルについて、住宅に住む家族の数ごとに予測売価と実際の売価の分布を比較するボックス チャートを表示します。1 行 2 列のタイル レイアウトを使用して 2 つのモデルの結果を比較します。

tiledlayout(1,2) nexttile boxchart(testData.buildingclasscategory,testData.saleprice) hold on boxchart(testData.buildingclasscategory,bayesianTestPredictions) hold off legend(["True Sale Prices","Predicted Sale Prices"]) xlabel("Number of Families in Dwelling") ylabel(["Sale Price","(log transformed)"]) title("Bayesian Optimization Model") nexttile boxchart(testData.buildingclasscategory,testData.saleprice) hold on boxchart(testData.buildingclasscategory,ashaTestPredictions) hold off legend(["True Sale Prices","Predicted Sale Prices"]) xlabel("Number of Families in Dwelling") ylabel(["Sale Price","(log transformed)"]) title("ASHA Optimization Model")

両方のモデルについて、各タイプの住宅における予測売価の中央値は実際の売価の中央値とほぼ一致しています。予測売価は、実際の売価よりも変動が少ないようです。

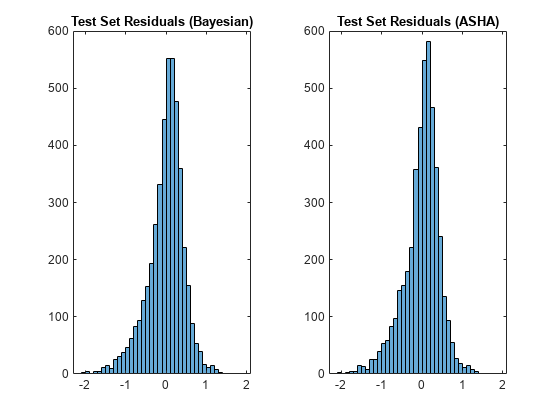

各モデルについて、テスト セットの残差のヒストグラムをプロットし、それらが正規分布していることを確認します (売価は対数変換されます)。1 行 2 列のタイル レイアウトを使用して 2 つのモデルの結果を比較します。

bayesianTestResiduals = testData.saleprice - bayesianTestPredictions; ashaTestResiduals = testData.saleprice - ashaTestPredictions; tiledlayout(1,2) nexttile histogram(bayesianTestResiduals) title("Test Set Residuals (Bayesian)") nexttile histogram(ashaTestResiduals) title("Test Set Residuals (ASHA)")

ヒストグラムはわずかに左の裾が長くなっていますが、両方とも 0 付近でほぼ対称です。

参考

fitrauto | boxchart | histogram | BayesianOptimization