rlHybridStochasticActor

Hybrid stochastic actor with a hybrid action space for reinforcement learning agents

Since R2024b

Description

This object implements a function approximator to be used as a stochastic actor

within a reinforcement learning agent with a hybrid action space (partly discrete and partly

continuous). A hybrid stochastic actor takes an environment observation as input and returns

as output a random action containing a discrete and a continuous part. The discrete part is

sampled from a categorical (also known as Multinoulli) probability distribution, and the

continuous part is sampled from a parametrized Gaussian probability distribution. After you

create an rlHybridStochasticActor object, use it to create an rlSACAgent agent with a

hybrid action space. For more information on creating actors and critics, see Create Policies and Value Functions.

Creation

Syntax

Description

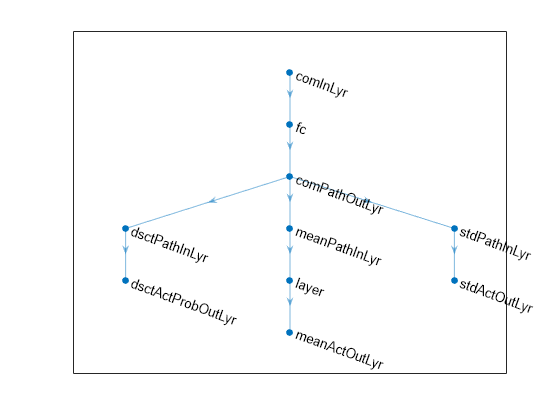

actor = rlHybridStochasticActor(net,observationInfo,actionInfo,DiscreteActionOutputNames=dsctOutLyrName,ContinuousActionMeanOutputNames=meanOutLyrName,ContinuousActionStandardDeviationOutputNames=stdOutLyrName)net as approximation model. Here, net must

have three differently named output layers. One layer must return the probability of each

possible discrete action, and the other two layers must return the mean and the standard

deviation of the Gaussian distribution of each component of the continuous action,

respectively. The actor uses the output of these three layers, according to the names

specified in the strings dsctOutLyrName,

meanOutLyrName and stdOutLyrName, to represent

the probability distributions from which the discrete and continuous components of the

action are sampled. This syntax sets the ObservationInfo and

ActionInfo properties of actor to the input

arguments observationInfo and actionInfo, respectively.

Note

actor does not enforce constraints set by the continuous

action specification. When using this actor in a different agent than SAC, you must

enforce action space constraints within the environment.

actor = rlHybridStochasticActor(___,Name=Value)UseDevice property using one or more name-value arguments. Use

this syntax with any of the input argument combinations in the preceding syntax. Specify

the input layer names to explicitly associate the layers of your network with specific

environment channels. To specify the device where computations for

actor are executed, set the UseDevice property, for

example UseDevice="gpu".

Input Arguments

Name-Value Arguments

Properties

Object Functions

rlSACAgent | Soft actor-critic (SAC) reinforcement learning agent |

getAction | Obtain action from agent, actor, or policy object given environment observations |

evaluate | Evaluate function approximator object given observation (or observation-action) input data |

gradient | (Not recommended) Evaluate gradient of function approximator object given observation and action input data |

accelerate | (Not recommended) Option to accelerate computation of gradient for approximator object based on neural network |

getLearnableParameters | Obtain learnable parameter values from agent, function approximator, or policy object |

setLearnableParameters | Set learnable parameter values of agent, function approximator, or policy object |

setModel | Set approximation model in function approximator object |

getModel | Get approximation model from function approximator object |

Examples

Version History

Introduced in R2024b