メインコンテンツ

Results for

We will be updating the MATLAB Answers infrastructure at 1PM EST today. We do not expect any disruption of service during this time. However, if you notice any issues, please be patient and try again later. Thank you for your understanding.

I am very excited to share my new book "Data-driven method for dynamic systems" available through SIAM publishing: https://epubs.siam.org/doi/10.1137/1.9781611978162

This book brings together modern computational tools to provide an accurate understanding of dynamic data. The techniques build on pencil-and-paper mathematical techniques that go back decades and sometimes even centuries. The result is an introduction to state-of-the-art methods that complement, rather than replace, traditional analysis of time-dependent systems. One can find methods in this book that are not found in other books, as well as methods developed exclusively for the book itself. I also provide an example-driven exploration that is (hopefully) appealing to graduate students and researchers who are new to the subject.

Each and every example for the book can be reproduced using the code at this repo: https://github.com/jbramburger/DataDrivenDynSyst

Hope you like it!

Christmas season is underway at my house:

(Sorry - the ornament is not available at the MathWorks Merch Shop -- I made it with a 3-D printer.)

Is it possible to differenciate the input, output and in-between wires by colors?

Hello, MATLAB fans!

For years, many of you have expressed interest in getting your hands on some cool MathWorks merchandise. I'm thrilled to announce that the wait is over—the MathWorks Merch Shop is officially open!

In our shop, you'll find a variety of exciting items, including baseball caps, mugs, T-shirts, and YETI bottles.

Visit the shop today and explore all the fantastic merchandise we have to offer. Happy shopping!

I was curious to startup your new AI Chat playground.

The first screen that popped up made the statement:

"Please keep in mind that AI sometimes writes code and text that seems accurate, but isnt"

Can someone elaborate on what exactly this means with respect to your AI Chat playground integration with the Matlab tools?

Are there any accuracy metrics for this integration?

It would be nice to have a function to shade between two curves. This is a common question asked on Answers and there are some File Exchange entries on it but it's such a common thing to want to do I think there should be a built in function for it. I'm thinking of something like

plotsWithShading(x1, y1, 'r-', x2, y2, 'b-', 'ShadingColor', [.7, .5, .3], 'Opacity', 0.5);

So we can specify the coordinates of the two curves, and the shading color to be used, and its opacity, and it would shade the region between the two curves where the x ranges overlap. Other options should also be accepted, like line with, line style, markers or not, etc. Perhaps all those options could be put into a structure as fields, like

plotsWithShading(x1, y1, options1, x2, y2, options2, 'ShadingColor', [.7, .5, .3], 'Opacity', 0.5);

the shading options could also (optionally) be a structure. I know it can be done with a series of other functions like patch or fill, but it's kind of tricky and not obvious as we can see from the number of questions about how to do it.

Does anyone else think this would be a convenient function to add?

My favorite image processing book is The Image Processing Handbook by John Russ. It shows a wide variety of examples of algorithms from a wide variety of image sources and techniques. It's light on math so it's easy to read. You can find both hardcover and eBooks on Amazon.com Image Processing Handbook

There is also a Book by Steve Eddins, former leader of the image processing team at Mathworks. Has MATLAB code with it. Digital Image Processing Using MATLAB

You might also want to look at the free online book http://szeliski.org/Book/

In the past two years, large language models have brought us significant changes, leading to the emergence of programming tools such as GitHub Copilot, Tabnine, Kite, CodeGPT, Replit, Cursor, and many others. Most of these tools support code writing by providing auto-completion, prompts, and suggestions, and they can be easily integrated with various IDEs.

As far as I know, aside from the MATLAB-VSCode/MatGPT plugin, MATLAB lacks such AI assistant plugins for its native MATLAB-Desktop, although it can leverage other third-party plugins for intelligent programming assistance. There is hope for a native tool of this kind to be built-in.

What incredible short movies can be crafted with no more than 2000 characters of MATLAB code? Discover the creativity in our GALLERY from the MATLAB Shorts Mini Hack contest.

Vote on your favorite short movies by Nov.10th. We are giving out MATLAB T-shirts to 10 lucky voters!

Tips: the more you vote, the higher your chance to win.

Mark your calendar for November 13–14 and get ready for two days of learning, inspiration, and connections!

We are thrilled to announce that MathWork’s incredible María Elena Gavilán Alfonso was selected as a keynote speaker at this year’s MATLAB Expo.

Her session, "From Embedded to Empowered: The Rise of Software-Defined Products," promises to be a game-changer! With her expertise and insights, María is set to inspire and elevate our understanding of the evolving world of software-defined products.

I know we have all been in that all-too-common situation of needing to inefficiently identify prime numbers using only a regular expression... and now Matt Parker from Standup Maths helpfully released a YouTube video entitled "How on Earth does ^.?$|^(..+?)\1+$ produce primes?" in which he explains a simple regular expression (aka Halloween incantation) which matches composite numbers:

Here is my first attempt using MATLAB and Matt Parker's example values:

fnh = @(n) isempty(regexp(repelem('*',n),'^.?$|^(..+?)\1+$','emptymatch'));

fnh(13)

fnh(15)

fnh(101)

fnh(1000)

Feel free to try/modify the incantation yourself. Happy Halloween!

Watch episodes 5-7 for the new stuff, but the whole series is really great.

Hello! The MathWorks Book Program is thrilled to welcome you to our discussion channel dedicated to books on MATLAB and Simulink. Here, you can:

- Promote Your Books: Are you an author of a book on MATLAB or Simulink? Feel free to share your work with our community. We’re eager to learn about your insights and contributions to the field.

- Request Recommendations: Looking for a book on a specific topic? Whether you're diving into advanced simulations or just starting with MATLAB, our community is here to help you find the perfect read.

- Ask Questions: Curious about the MathWorks Book Program, or need guidance on finding resources? Post your questions and let our knowledgeable community assist you.

We’re excited to see the discussions and exchanges that will unfold here. Whether you're an expert or beginner, there's a place for you in our community. Let's embark on this journey together!

Welcome to the launch of our new blog area, Semiconductor Design and Verification! The mission is to empower engineers and designers in the semiconductor industry by streamlining architectural exploration, optimizing the post-processing of simulations, and enabling early verification with MATLAB and Simulink.

Meet Our Authors

We are thrilled to have two esteemed authors:

@Ganesh Rathinavel and @Cristian Macario Macario have both made significant contributions to the advancement of Analog/Mixed-Signal design and the broader communications, electronics, and semiconductor industries. With impressive engineering backgrounds and extensive experience at leading companies such as IMEC, STMicroelectronics, NXP Semiconductors, LSI Corporation, and ARM, they bring a wealth of knowledge and expertise to our blog. Their work is focused on enhancing MathWorks' tools to better align with industry needs.

What to Expect

The blog will cover a wide range of topics aimed at professionals in the semiconductor field, providing insights and strategies to enhance your design and verification processes. Whether you're looking to streamline your current workflows or explore cutting-edge methodologies, our blog is your go-to resource.

Call to Action

We invite all professionals and enthusiasts in the semiconductor industry to follow our blog posts. Stay updated with the latest trends and insights by subscribing to our blog.

Don’t miss the first post: Accelerating Mixed-Signal Design with Early Behavioral Models, where they explore how early behavioral modeling can accelerate mixed-signal design and enhance system efficiency.

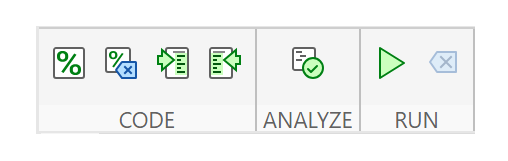

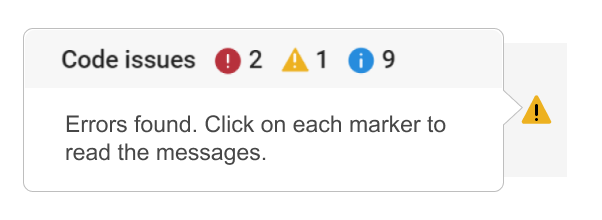

We are happy to announce the addition of a new code analyzing feature to the AI Chat Playground. This new feature allows you to identify issues with your code making it easier to troubleshoot.

How does it work?

Just click the ANALYZE button in the toolbar of the code editor. Your code is sent to MATLAB running on a server which returns any warnings or errors, each of which are associated to a line of code on the right side of the editor window. Hover over each line marker to view the message.

Give it a try and share your feedback here. We will be adding this new capability to other community areas in the future so your feedback is appreciated.

Thank you,

David

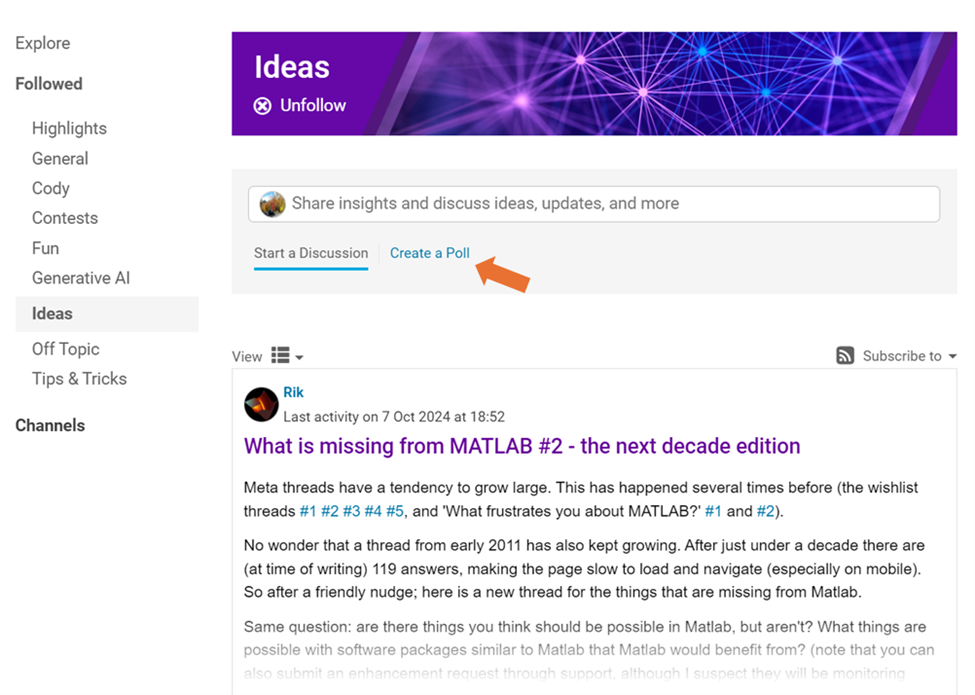

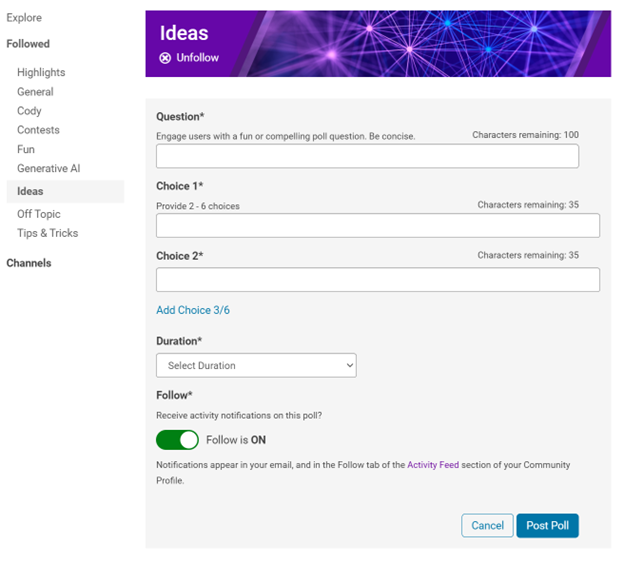

We are thrilled to announce that every community member now has the ability to create a poll in Discussions, allowing you to gather votes and opinions from the community.

How to create a poll:

You can find the ‘Create a Poll’ link just below the text box (see screenshot below). Please note that the default type of content is a ‘Discussion’. To start a poll, simply click the link.

Creating a poll is straightforward. You can add up to 6 choices for your poll and set the duration from 1 to 6 weeks.

Where to find the poll

Polls created by community members will appear only in the channel where they are created and the landing page of Discussions area. Discussions moderators have the privilege to feature/broadcast the poll across Answers, File Exchange, and Cody.

Thoughts?

We can’t wait to see what interesting polls our community will create. Meanwhile, if you have any questions or suggestions, feel free to leave a comment.