Fusion of Radar and Lidar Data Using ROS

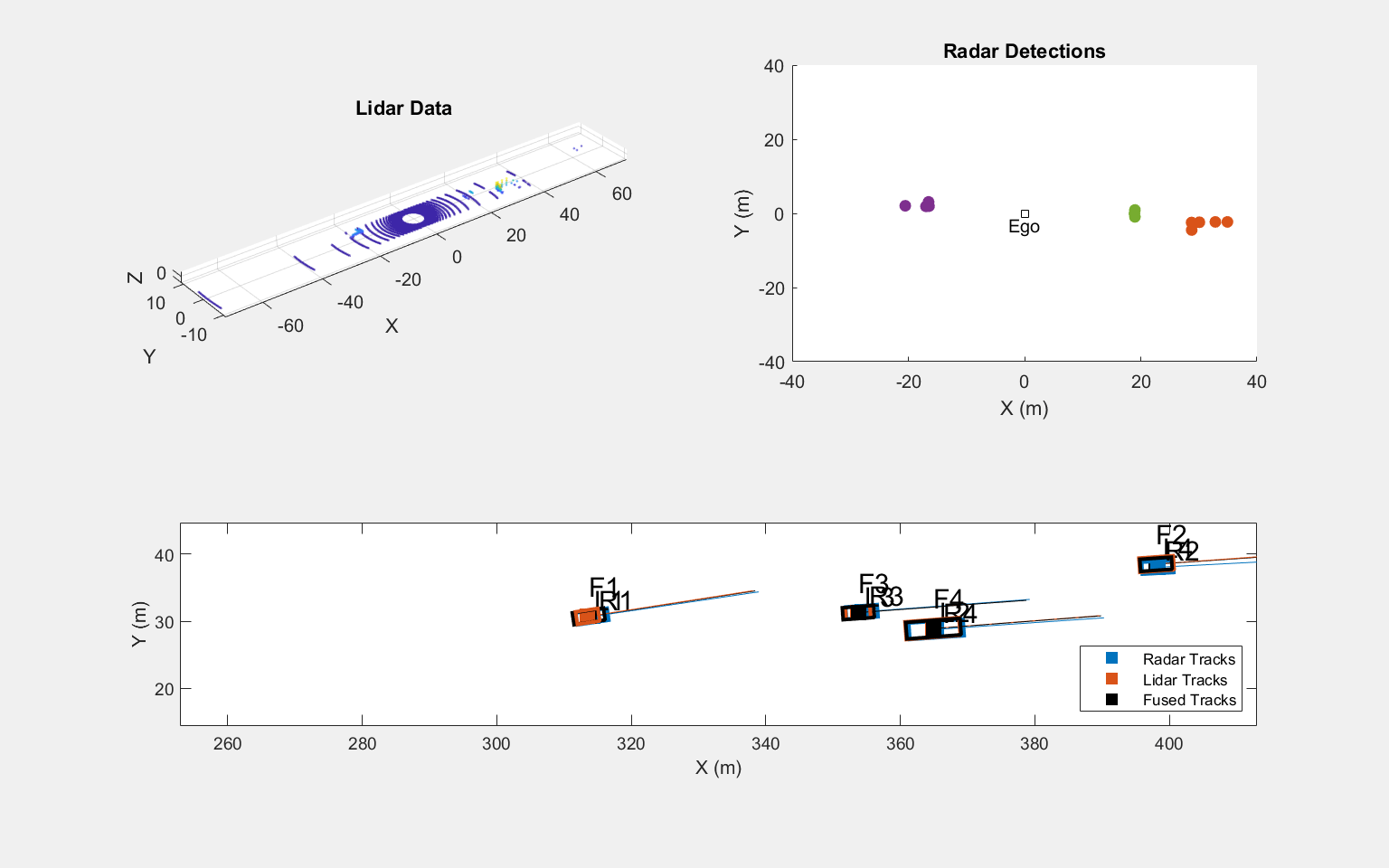

Perform track-level sensor fusion on recorded lidar sensor data for a driving scenario recorded on a rosbag. This example uses the same driving scenario and sensor fusion as the Track-Level Fusion of Radar and Lidar Data (Sensor Fusion and Tracking Toolbox) example, but uses a prerecorded rosbag instead of the driving scenario simulation.

Extract Sensor Data from Rosbag

This provides an example rosbag containing lidar, radar, and vehicle data, and is approximately 33MB in size. Download the rosbag from the MathWorks website.

bagFile = matlab.internal.examples.downloadSupportFile("ros","rosbags/simulated_lidar_radar_driving_798.bag");

Access the rosbag and view the available topics.

bag = rosbag(bagFile); disp(bag.AvailableTopics(:,["NumMessages", "MessageType"]))

NumMessages MessageType

___________ ________________________________________

/clock 114 rosgraph_msgs/Clock

/ego/lidar_scan 114 sensor_msgs/PointCloud2

/ego/radar_1/detections 114 cob_object_detection_msgs/DetectionArray

/ego/radar_2/detections 114 cob_object_detection_msgs/DetectionArray

/ego/radar_3/detections 114 cob_object_detection_msgs/DetectionArray

/ego/radar_4/detections 114 cob_object_detection_msgs/DetectionArray

/ego/state 114 nav_msgs/Odometry

The ego vehicle has one lidar and four radar sensors, as well as absolute positional information for itself. These messages can be extracted into separate arrays for later fusion. Because this rosbag is compressed to reduce file size, reading the messages may take a few seconds. Extract the messages as structures using the DataFormat name-value argument, which improves overall performance for ROS messages.

lidarBagSel = select(bag,"Topic","/ego/lidar_scan"); lidarMsgs = readMessages(lidarBagSel,"DataFormat","struct"); stateBagSel = select(bag,"Topic","/ego/state"); stateMsgs = readMessages(stateBagSel,"DataFormat","struct"); radarMsgs = cell(bag.AvailableTopics{"/ego/radar_1/detections","NumMessages"},4); for idxRadar = 1:4 radarBagSel = select(bag,"Topic",sprintf("/ego/radar_%d/detections",idxRadar)); radarMsgs(:,idxRadar) = readMessages(radarBagSel,"DataFormat","struct"); end

Make timestamps to be used with fusion relative to the first message timestamp. Note that the bag StartTime cannot be used since that is the timestamp the first message was recorded at, which is later than the message timestamp.

clockBagSel = select(bag,"Topic","/clock"); clockMsg = readMessages(bag,1,"DataFormat","struct"); startTime = double(clockMsg{1}.Clock_.Sec) + double(clockMsg{1}.Clock_.Nsec)*1e-9;

Set Up Sensor Tracking and Fusion

Information about the sensors is known based on the vehicle set up, and needs to be put in a format usable by the tracking algorithm.

[lidarInfo, radarInfo, radarParameters] = helperRadarLidarInfo;

Radar and lidar tracking algorithms are necessary to process the high-resolution scans and determine the objects viewed in the scans without repeats. These algorithms are defined as helper functions. More details on the algorithms can be seen in the Track-Level Fusion of Radar and Lidar Data (Sensor Fusion and Tracking Toolbox) example.

radarTrackingAlgorithm = helperROSRadarTracker(radarInfo); radarConfig = fuserSourceConfiguration("SourceIndex",1,... "IsInitializingCentralTracks",true,... "CentralToLocalTransformFcn",@central2radar,... "LocalToCentralTransformFcn",@radar2central); lidarTrackingAlgorithm = helperLidarTrackingAlgorithm(lidarInfo); lidarConfig = fuserSourceConfiguration("SourceIndex",2,... "IsInitializingCentralTracks",true); fuser = trackFuser("SourceConfigurations",{radarConfig;lidarConfig},... "StateTransitionFcn",lidarTrackingAlgorithm.StateTransitionFcn,... "ProcessNoise",diag([1 3 1]),... "HasAdditiveProcessNoise",false,... "AssignmentThreshold",[250 inf],... "ConfirmationThreshold",[3 5],... "DeletionThreshold",[5 5],... "StateFusion","Custom",... "CustomStateFusionFcn",@helperRadarLidarFusionFcn);

Visualization

Set up figure and properties for visualization of sensor data using a helper function.

displayHelper = helperROSSensorFusionDisplay;

Perform Fusion on Sensor Messages

Iterate through the messages and run the sensor fusion algorithm. Watch the visualization to see the rosbag played back and the tracking of vehicles using the sensor fusion.

The sensors were triggered to all measure simultaneously, and all radar sensors published a message at each triggering, even if no detections were present. Because all the message arrays are all the same length, the processing of the sensor data is far simpler. If sensors triggered at distinct rates, connection issue occurred, or messages were received out of order, an intermediate step of synchronizing the sensor data may be necessary.

for idx = 1:numel(lidarMsgs) % Extract time on first sensor reading. % Make time relative to rosbag start time for easier tracking. lidarTimeStamp = lidarMsgs{idx}.Header.Stamp; lidarTime = double(lidarTimeStamp.Sec) + ... double(lidarTimeStamp.Nsec)*1e-9 - startTime; radarTimeStamp = radarMsgs{idx,1}.Header.Stamp; radarTime = double(radarTimeStamp.Sec) + ... double(radarTimeStamp.Nsec)*1e-9 - startTime; % Extract vehicle state and modify structures for processing. egoPose = struct; stateMsg = stateMsgs{idx}; positionMsg = stateMsg.Pose.Pose.Position; egoPose.Position = [positionMsg.X ; positionMsg.Y ; positionMsg.Z]; % Orientation in degrees. orientQuat = rosReadQuaternion(stateMsg.Pose.Pose.Orientation); orientEul = eulerd(orientQuat,"XYZ","point"); egoPose.Roll = orientEul(1); egoPose.Pitch = orientEul(2); egoPose.Yaw = orientEul(3); % By convension, nav_msgs/Odometry velocity is provided in the child % reference frame (the vehicle). The fusion requires velocity in the world % reference frame. velMsg = stateMsg.Twist.Twist.Linear; egoPose.Velocity = rotatepoint(orientQuat,... [velMsg.X velMsg.Y velMsg.Z]); % Extract point cloud from lidar for processing % This lidar provided no RGB or intensity information lidarXYZPoints = rosReadXYZ(lidarMsgs{idx}); ptCloud = pointCloud(lidarXYZPoints); % Extract radar detections into a single array using metadata to % specify the source sensor. nDetections = sum(cellfun(@(msg) numel(msg.Detections),radarMsgs(idx,:))); radarDetections = cell(nDetections,1); % Preallocate idxDetection = 1; for idxRadar = 1:size(radarMsgs,2) for idxRadarDetection = 1:numel(radarMsgs{idx,idxRadar}.Detections) detMsg = radarMsgs{idx,idxRadar}.Detections(idxRadarDetection); detTime = double(detMsg.Header.Stamp.Sec) + ... double(detMsg.Header.Stamp.Nsec)*1e-9 - startTime; measureMsg = detMsg.Pose.Pose.Position; measurement = [measureMsg.X ; measureMsg.Y ; measureMsg.Z]; % Measurement noise is stored in the bounding box field due to % this message type containing Pose instead of PoseCovariance. measureNoise = diag([detMsg.BoundingBoxLwh.X detMsg.BoundingBoxLwh.Y detMsg.BoundingBoxLwh.Z]); % Store signal-to-noise ratio in Score field. objectAttributes = struct("TargetIndex",detMsg.Id,"SNR",detMsg.Score); radarDetections{idxDetection} = objectDetection(detTime,measurement,... "MeasurementNoise",measureNoise,... "SensorIndex",idxRadar,... "ObjectClassID",0,... "ObjectAttributes",{objectAttributes},... "MeasurementParameters",{radarParameters(idxRadar)}); idxDetection = idxDetection + 1; end end % Generate sensor tracks and analysis information like the bounding box % detections and point cloud segmentation information. radarTracks = radarTrackingAlgorithm(egoPose, radarDetections, radarTime); [lidarTracks, lidarDetections, segmentationInfo] = ... lidarTrackingAlgorithm(egoPose, ptCloud, lidarTime); localTracks = [radarTracks ; lidarTracks]; % Update the fuser. The first call must contain one local track. if ~(isempty(localTracks) && ~isLocked(fuser)) fusedTracks = fuser(localTracks,lidarTime); else fusedTracks = objectTrack.empty(0,1); end % Update the display updateSensorData(displayHelper,ptCloud,radarDetections) plotTracks(displayHelper,radarTracks,lidarTracks,fusedTracks,egoPose) end

Utility Functions

The following function definitions are used in the above script.

radar2central

function centralTrack = radar2central(radarTrack) % Initialize a track of the correct state size centralTrack = objectTrack('State',zeros(10,1),... 'StateCovariance',eye(10)); % Sync properties of radarTrack except State and StateCovariance with % radarTrack See syncTrack defined below. centralTrack = syncTrack(centralTrack,radarTrack); xRadar = radarTrack.State; PRadar = radarTrack.StateCovariance; H = zeros(10,7); % Radar to central linear transformation matrix H(1,1) = 1; H(2,2) = 1; H(3,3) = 1; H(4,4) = 1; H(5,5) = 1; H(8,6) = 1; H(9,7) = 1; xCentral = H*xRadar; % Linear state transformation PCentral = H*PRadar*H'; % Linear covariance transformation PCentral([6 7 10],[6 7 10]) = eye(3); % Unobserved states % Set state and covariance of central track centralTrack.State = xCentral; centralTrack.StateCovariance = PCentral; end

central2radar

function radarTrack = central2radar(centralTrack) % Initialize a track of the correct state size radarTrack = objectTrack('State',zeros(7,1),... 'StateCovariance',eye(7)); % Sync properties of centralTrack except State and StateCovariance with % radarTrack See syncTrack defined below. radarTrack = syncTrack(radarTrack,centralTrack); xCentral = centralTrack.State; PCentral = centralTrack.StateCovariance; H = zeros(7,10); % Central to radar linear transformation matrix H(1,1) = 1; H(2,2) = 1; H(3,3) = 1; H(4,4) = 1; H(5,5) = 1; H(6,8) = 1; H(7,9) = 1; xRadar = H*xCentral; % Linear state transformation PRadar = H*PCentral*H'; % Linear covariance transformation % Set state and covariance of radar track radarTrack.State = xRadar; radarTrack.StateCovariance = PRadar; end

syncTrack

function tr1 = syncTrack(tr1,tr2) props = properties(tr1); notState = ~strcmpi(props,'State'); notCov = ~strcmpi(props,'StateCovariance'); props = props(notState & notCov); for i = 1:numel(props) tr1.(props{i}) = tr2.(props{i}); end end