acfObjectDetectorMonoCamera

Detect objects in monocular camera using aggregate channel features

Description

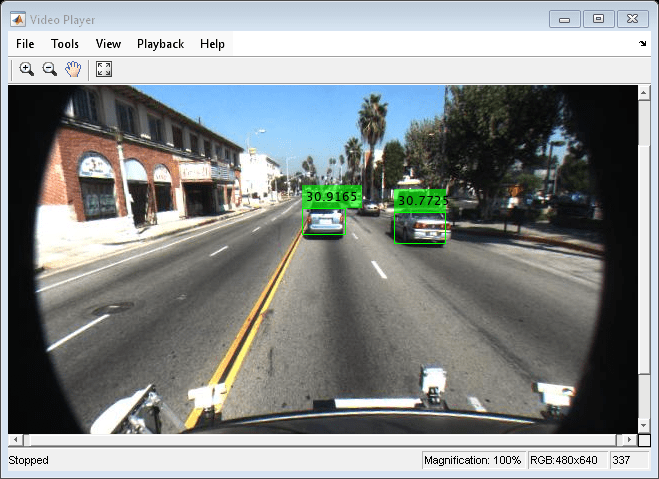

The acfObjectDetectorMonoCamera contains information about an aggregate

channel features (ACF) object detector that is configured for use with a monocular

camera sensor. To detect objects in an image that was captured by the camera, pass the

detector to the detect

function.

Creation

Create an

acfObjectDetectorobject by calling thetrainACFObjectDetectorfunction with training data.detector = trainACFObjectDetector(trainingData,...);

Alternatively, create a pretrained detector using functions such as

vehicleDetectorACForpeopleDetectorACF.Create a

monoCameraobject to model the monocular camera sensor.sensor = monoCamera(...);

Create an

acfObjectDetectorMonoCameraobject by passing the detector and sensor as inputs to theconfigureDetectorMonoCamerafunction. The configured detector inherits property values from the original detector.configuredDetector = configureDetectorMonoCamera(detector,sensor,...);

Properties

Object Functions

detect | Detect objects using ACF object detector configured for monocular camera |

Examples

Extended Capabilities

Version History

Introduced in R2017a