メインコンテンツ

結果:

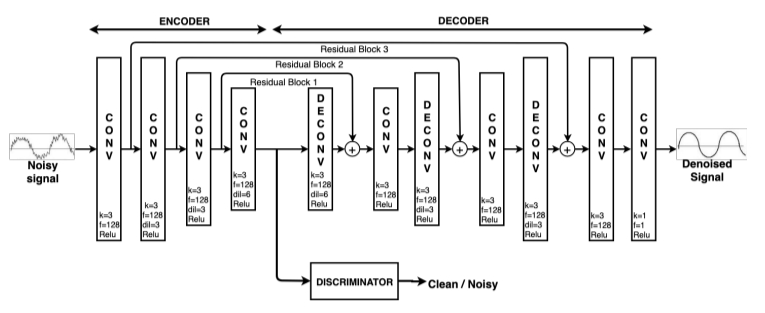

I am a beginner of deep learning, and meet with some problems in learning the MATLAB example "Denoise Signals with Adversarial Learning Denoiser Model", hope very much to get help!

1. visualizaition of the features

It is my understanding that the encoded representation of the autoencoder is the features of the original signal. However in this example, the output dimension of the encoder is 64xSignalLength. Does it mean that every sample point of the signal has 64 features?

2. usage of the residual blocks

The encoder-decoder model uses residual blocks (which contribute to reconstructing the denoised signal from the latent space, ). However, only the encoder output is connected to the discriminator. Doesn't it cause the prolem that most features will be learned by the residual blocks, and only a few features that could confuse the discriminator will be learned by the encoder and sent to the discriminator?

Is there a reason for TMW not to invest in 3D polyshapes? Is the mathematical complexity of having all the same operations in 3D (union, intersection, subtract,...) prohibitive?

I have been developing a neural net to extract a set of generative parameters from an image of a 2-D NMR spectrum. I use a pair of convolution layers each followed by a fullyconnected layer; the pair are joined by an addtion layer and that fed to a regression layer. This trains fine, but answers are sub-optimal. I woudl like to add a fully connected layer between the addtion layer and regression, but training using default training scripts simply won't converge. Any suggestions? Maybe I can start with the pre-trained weights for the convolution layers, but I don't know how to do this.

JHP

I noticed a couple new replies show up on the recent poll a day or so ago, but since then, the page can't be loaded anymore in any browser I've tried.

Is MathWorks going to spend 5 years starting in 2024 making Python the #1 supported language?

I'm not sure it's authentic information, and am looking forward to a high level of integration with python.

Reference:

This is not a question, it is my attempt at complying with the request for thumbs up/down voting. I vote thumbs up, for having AI.....

I am not sure if specific AI errors are to be reported. Other messages I just read from others here and the AI Chat itself clearly state that errors abound.

My AI request was: "Plot 300 points of field 2"

AI Chat gave me, in part:

data = thingSpeakRead(channelID, 'Fields', 2, 'NumPoints', 300, 'ReadKey', readAPIKey);

% Extract the field values

field1Values = data.Field1;

% Plot the data

plot(field1Values);

The AI code failed due to "Dot indexing is not supported for variables of this type"

So, I corrected the code thus to get the correct plot:

data = thingSpeakRead(channelID, 'Fields', 2, 'NumPoints', 300, 'ReadKey', readAPIKey);

% Extract the field values

%field1Values = data.Field1;

% Plot the data

plot(data);

I see great promise in AI Chat.

Opie

Quick answer: Add set(hS,'Color',[0 0.4470 0.7410]) to code line 329 (R2023b).

Explanation: Function corrplot uses functions plotmatrix and lsline. In lsline get(hh(k),'Color') is called in for cycle for each line and scatter object in axes. Inside the corrplot it is also called for all axes, which is slow. However, when you first set the color to any given value, internal optimization makes it much faster. I chose [0 0.4470 0.7410], because it is a default color for plotmatrix and corrplot and this setting doesn't change a behavior of corrplot.

Suggestion for a better solution: Add the line of code set(hS,'Color',[0 0.4470 0.7410]) to the function plotmatrix. This will make not only corrplot faster, but also any other possible combinations of plotmatrix and get functions called like this:

h = plotmatrix(A);

% set(h,'Color',[0 0.4470 0.7410])

for k = 1:length(h(:))

get(h(k),'Color');

end

Write a matlab script that will print the odd numbers, 1 through 20, in reverse.

I cannot figure out how to do this correctly, please help.

Hello, all!

This is my first post after just joining this discussion, so please forgive me and provide kind assistance if I have posted to the wrong subsection!

I have a good interest in learning sql server course and right now I am taking help from various platforms like https://www.coursera.org/ https://www.udemy.com/

Also I have a doubt that is it a good option to learn from platforms like this or I should go for some sql server online training . I have searched for the solution of my queries in various above platforms which helped me up to some extent only as it was not directly given by any expert or trainer.

Hoping in getting a quick response

Thankyou in advance.

Hello, I am a student and I am working on a neural network for a line follower car and I would like you to recommend a tutorial to implement it in simulink.

I think it would be a really great feature to be able to add an Alpha property to the basic "Line" class in MATLAB plots. I know that I have previously had to resort to using Patch to be able to plot semitransparent lines, but there are also so many other functions that rely on the "Line" class.

For example, if you want to make a scatter plot from a table with things specified into groups, you can use ScatterHistogram or gscatter but since gscatter uses the Line class, you can't adjust the marker transparency. So if you don't want the histograms, you are stuck with manually separating it and using scatter with hold on.

I saw this post on Answers.

I was impressed at the capability of the AI, as I have been at other times when I posed a question to it, at least some of the time. So much so that I wondered...

What if the AI were automatically applied to EVERY question on Answers? Would that be a good or bad thing? For example, suppose the AI automatically offers an answer to every question as soon as it gets posted? Of course, users would still be allowed to post their own, possibly better answers. But would it tend to disincentivise individuals from ansering questions?

Perhaps as bad, would it push Answers into the mode of a homework solving forum? Since if every homework question gets a possibly pretty good automatic AI generated solution, then every student will just post all HW questions, and the forum would quickly become overwhelmed.

I suppose one idea could be to set up the AI to post an answer to all un-answered questions that are at least one month old. Then students would not gain by posting their homework.

Hi Guys

Posting this based on a thought I had, so I don't really ahve any code however I would like to know if the thought process is correct and/or relatively accurate.

Consider a simple spring mass system which only allows compression on the spring however when there is tension the mass should move without the effect of the spring distrupting it, thus the mass is just thrown vertically upwards.

The idea which I came up with for such a system is to have two sets of dfferential equations, one which represents the spring system and another which presents a mass in motion without the effects of the spring.

Please refer to the below basic outline of the code which I am proposing. I believe that this may produce relatively decent results. The code essentially checks if there is tension in the system if there is it then takes the last values from the spring mass differential equation and uses it as initial conditions for the differential equation with the mass moving wothout the effects of the spring, this process works in reverse also. The error which would exist is that the initial conditions applied to the system would include effects of the spring. Would there be a better way to code such behaviour?

function xp = statespace(t,x,f,c,k,m)

if (k*x(1)) positive #implying tension

**Use last time step as initial conditions**

**differential equation of a mass moving""

end

if x(1) negative #implying that the mass in now moving down therefore compression in spring

**Use last time step as initial conditions**

**differential equation for a spring mass system**

end

end

Seeing a colleague make this mistake (one I've had to fix multiple times in other's work too) makes me want to ask the community: would you like the awgn() function/blocks to give the option for creating a SNR at the bandwidth of the signal? Your typical flow is something like this:

- Create a signal, usually at some nominal upsampling factor (e.g., 4) such that it's now nicely over sampled, especially if you're using a RRC or similar pulse shaping filter.

- Potentially add a frequency offset (which might make the sample frequency even higher)

- Add AWGN channel model for a desired SNR

- Put this into your detector/receiver model

The problem is, when someone says, "I'm detecting XYZ at foo SNR," it should not magically improve as a function of the oversample. The problem isn't that awgn() generates white noise, that's what it's supposed to do and the typical receiver has noise across the entire band. The problem is that SNR is most properly defined as the signal power over the noise power spectral density times the signal's noise equivalent bandwidth. Now I looked and there's no handy function for computing NEBW for an input signal (there's just a function for assessing analysis windows). In practice it can get a bit tricky. The occupied bandwidth or HPBW are often close enough to the NEBW, we're usually not haggling over hundredths of a dB. So, in my not so humble opinion, the "measured" flag for awgn() should give an option for bandwidth matching or at least document the behavior better in the help page. All too often I'm seeing 3-6 (or worse) dB errors because people aren't taking the signal's bandwidth into account.

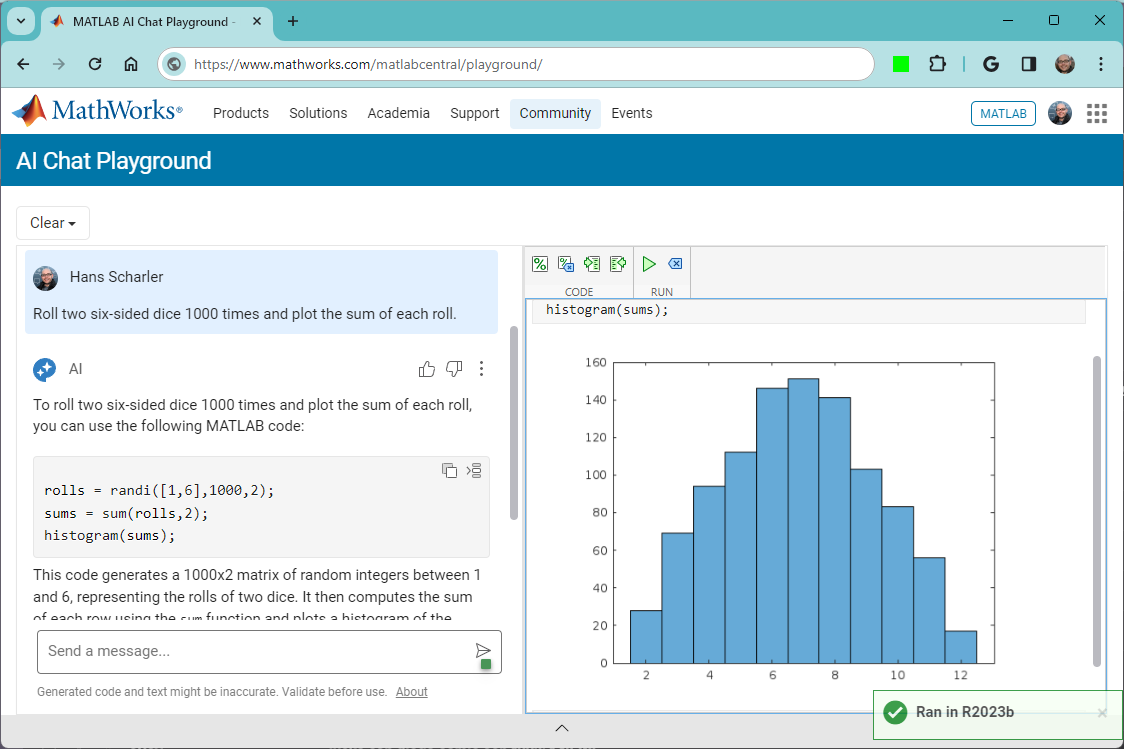

The MATLAB AI Chat Playground is open to everyone!

Check it out here on the community: https://www.mathworks.com/matlabcentral/playground

good afternoon everyone my name is Dundu lawan haruna ,i'm a final year student at the department of computer engineering ABU Zaria, Nigerian , and i wanted to do my final year project based on computer vision : project topic , designing an eye glasses to help those people with visual imparement to be able to navigate enviroment efficiently , that's why i need a support from you guys ,all advised are highly well come , thank you for your support.

Share your fun photos in the comments!

I know the latest version of MATLAB R2023b has this feature already, put it should be added to R2023a as well because of its simplicity and convenience.

Basically, I want to make a bar graph that lets me name each column in a basic bar graph:

y=[100 99 100 200 200 300 500 800 1000];

x=["0-4" "5-17" "18-29" "30-39" "40-49" "50-64" "65-74" "75-84" "85+"];

bar(x,y)

However, in R2023a, this isn't a feature. I think it should be added because it helps to present data and ideas more clearly and professionally, which is the purpose of a graph to begin with.