nssTrainingRMSProp

Description

RMSProp options set object to train an idNeuralStateSpace

network using nlssest.

Creation

Create an nssTrainingRMSProp object using nssTrainingOptions

and specifying "rmsprop" as input argument.

Properties

Solver used to update network parameters, returned as a string. This property is read-only.

Use nssTrainingOptions("adam"),

nssTrainingOptions("sgdm"), or

nssTrainingOptions("lbfgs") to return an options set object for the

Adam, SGDM, or L-BFGS solvers respectively. For more information on these algorithms,

see the Algorithms section of trainingOptions (Deep Learning Toolbox).

Decay rate of squared gradient moving average for the RMSProp solver, specified as a

nonnegative scalar less than 1.

Typical values of the decay rate are 0.9,

0.99, and 0.999, corresponding to averaging

lengths of 10, 100, and 1000

parameter updates, respectively.

For more information, see Root Mean Square Propagation (Deep Learning Toolbox).

Type of function used to calculate loss, specified as one of the following:

"MeanAbsoluteError"— uses the mean value of the absolute error."MeanSquaredError"— uses the mean value of the squared error.

Option to plot the value of the loss function during training, specified as one of the following:

true— plots the value of the loss function during training.false— does not plot the value of the loss function during training.

Constant coefficient applied to the regularization term added to the loss function, specified as a positive scalar.

The loss function with the regularization term is given by:

where t is the time variable, N is the size of the batch, ε is the sum of the reconstruction loss and autoencoder loss, θ is a concatenated vector of weights and biases of the neural network, and λ is the regularization constant that you can tune.

For more information, see Regularized Estimates of Model Parameters.

Learning rate used for training, specified as a positive scalar. If the learning rate is too small, then training can take a long time. If the learning rate is too large, then training might reach a suboptimal result or diverge.

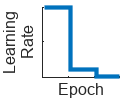

Learning rate schedule, specified as "none" or

"piecewise".

| Learning Rate Schedule | Description | Plot |

|---|---|---|

"none" | No learning rate schedule. This schedule keeps the learning rate constant. |

|

"piecewise" | Piecewise learning rate schedule. Every 10 epochs, this schedule drops the learn rate by a factor of 10. |

|

Number of epochs for dropping the learning rate, specified as a positive integer.

This option is valid only when the LearnRateSchedule training

option is "piecewise".

The software multiplies the global learning rate with the drop factor every time the

specified number of epochs passes. Specify the drop factor using the

LearnRateDropFactor training option.

Factor for dropping the learning rate, specified as a scalar from

0 to 1. This option is valid only when the

LearnRateSchedule training option is

"piecewise".

LearnRateDropFactor is a multiplicative factor to apply to the

learning rate every time a certain number of epochs passes. Specify the number of epochs

using the LearnRateDropPeriod training option.

Maximum number of epochs to use for training, specified as a positive integer. An epoch is the full pass of the training algorithm over the entire training set.

Size of the mini-batch to use for each training iteration, specified as a positive integer. A mini-batch is a subset of the training set that is used to evaluate the gradient of the loss function and update the weights.

If the mini-batch size does not evenly divide the number of training samples, then

nlssest

discards the training data that does not fit into the final complete mini-batch of each

epoch.

Coefficient applied to tune the reconstruction loss of an autoencoder, specified as a nonnegative scalar.

Reconstruction loss measures the difference between the original input

(x) and its reconstruction

(xr) after encoding and decoding. You

calculate this loss as the L2 norm of (x

-

xr) divided by the batch size

(N).

Number of samples in each frame or batch when segmenting data for model training, specified as a positive integer.

Number of samples in the overlap between successive frames when segmenting data for model training, specified as an integer. A negative integer indicates that certain data samples are skipped when creating the data frames.

The default value, "auto", implies that the size of the overlap

is 0.

ODE solver options to integrate continuous-time neural state-space systems,

specified as an nssDLODE45 object.

Use dot notation to access properties such as the following:

Solver— Solver type, set as"dlode45". This is a read-only property.InitialStepSize— Initial step size, specified as a positive scalar. If you do not specify an initial step size, then the solver bases the initial step size on the slope of the solution at the initial time point.MaxStepSize— Maximum step size, specified as a positive scalar. It is an upper bound on the size of any step taken by the solver. The default is one tenth of the difference between final and initial time.AbsoluteTolerance— Absolute tolerance, specified as a positive scalar. It is the largest allowable absolute error. Intuitively, when the solution approaches 0,AbsoluteToleranceis the threshold below which you do not worry about the accuracy of the solution since it is effectively 0.RelativeTolerance— Relative tolerance, specified as a positive scalar. This tolerance measures the error relative to the magnitude of each solution component. Intuitively, it controls the number of significant digits in a solution, (except when it is smaller than the absolute tolerance).

For more information, see odeset.

Input interpolation method, specified as one of the following:

'zoh'— uses zero-order hold interpolation method.'foh'— uses first-order hold interpolation method.'cubic'— uses cubic interpolation method.'makima'— uses modified Akima interpolation method.'pchip'— uses shape-preserving piecewise cubic interpolation method.'spline'— uses spline interpolation method.

This is the interpolation method used to interpolate the input when integrating

continuous-time neural state-space systems. For more information, see interpolation

methods in interp1.

Object Functions

Examples

Use nssTrainingOptions to return an options set object to train an idNeuralStateSpace system.

rmspropOpts = nssTrainingOptions("rmsprop")rmspropOpts =

nssTrainingRMSProp with properties:

UpdateMethod: "RMSProp"

LearnRate: 1.0000e-03

SquaredGradientDecayFactor: 0.9000

MaxEpochs: 100

MiniBatchSize: 1000

LearnRateSchedule: "none"

LearnRateDropFactor: 0.1000

LearnRateDropPeriod: 10

Lambda: 0

Beta: 0

LossFcn: "MeanAbsoluteError"

PlotLossFcn: 1

ODESolverOptions: [1×1 idoptions.nssDLODE45]

InputInterSample: 'foh'

WindowSize: 2.1475e+09

Overlap: "auto"

Use dot notation to access the object properties.

rmspropOpts.PlotLossFcn = false;

You can use rmspropOpts as an input argument to nlssest to specify the training options for the state or the non-trivial output network of an idNeuralStateSpace object.

Version History

Introduced in R2024b

See Also

Objects

Functions

nssTrainingOptions|nlssest|odeset|generateMATLABFunction|idNeuralStateSpace/evaluate|idNeuralStateSpace/linearize|sim|createMLPNetwork|setNetwork

Blocks

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Web サイトの選択

Web サイトを選択すると、翻訳されたコンテンツにアクセスし、地域のイベントやサービスを確認できます。現在の位置情報に基づき、次のサイトの選択を推奨します:

また、以下のリストから Web サイトを選択することもできます。

最適なサイトパフォーマンスの取得方法

中国のサイト (中国語または英語) を選択することで、最適なサイトパフォーマンスが得られます。その他の国の MathWorks のサイトは、お客様の地域からのアクセスが最適化されていません。

南北アメリカ

- América Latina (Español)

- Canada (English)

- United States (English)

ヨーロッパ

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)