lassoblm

Bayesian linear regression model with lasso regularization

Description

The Bayesian linear regression model object lassoblm specifies the joint prior distribution of the regression coefficients and the disturbance variance (β, σ2) for implementing Bayesian lasso regression

[1]. For j = 1,…,NumPredictors, the conditional prior distribution of βj|σ2 is the Laplace (double exponential) distribution with a mean of 0 and scale σ2/λ, where λ is the lasso regularization, or shrinkage, parameter. The prior distribution of σ2 is inverse gamma with shape A and scale B.

The data likelihood is where ϕ(yt;xtβ,σ2) is the Gaussian probability density evaluated at yt with mean xtβ and variance σ2. The resulting posterior distribution is not analytically tractable. For details on the posterior distribution, see Analytically Tractable Posteriors.

In general, when you create a Bayesian linear regression model object, it specifies the joint prior distribution and characteristics of the linear regression model only. That is, the model object is a template intended for further use. Specifically, to incorporate data into the model for posterior distribution analysis and feature selection, pass the model object and data to the appropriate object function.

Creation

Description

PriorMdl = lassoblm(NumPredictors)PriorMdl) composed of NumPredictors predictors and an intercept, and sets the NumPredictors property. The joint prior distribution of (β, σ2) is appropriate for implementing Bayesian lasso regression [1]. PriorMdl is a template that defines the prior distributions and specifies the values of the lasso regularization parameter λ and the dimensionality of β.

PriorMdl = lassoblm(NumPredictors,Name,Value)NumPredictors) using name-value pair arguments. Enclose each property name in quotes. For example, lassoblm(3,'Lambda',0.5) specifies a shrinkage of 0.5 for the three coefficients (not the intercept).

Properties

Object Functions

estimate | Perform predictor variable selection for Bayesian linear regression models |

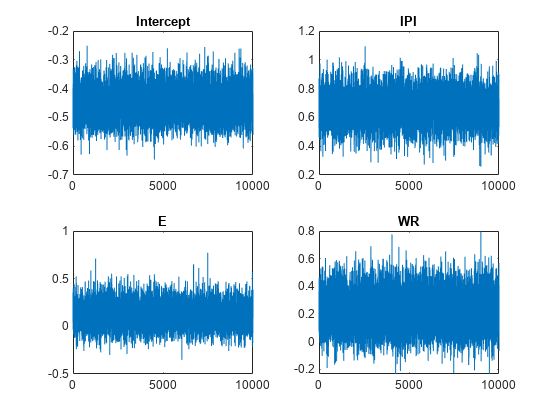

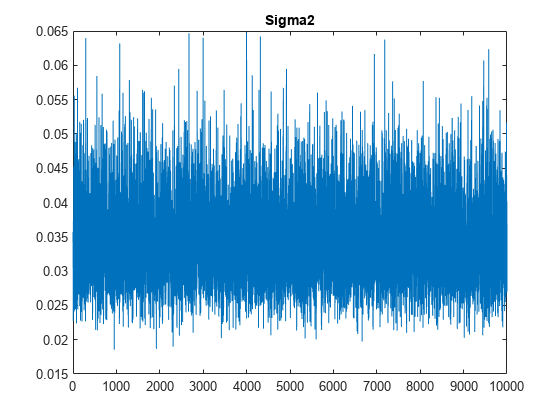

simulate | Simulate regression coefficients and disturbance variance of Bayesian linear regression model |

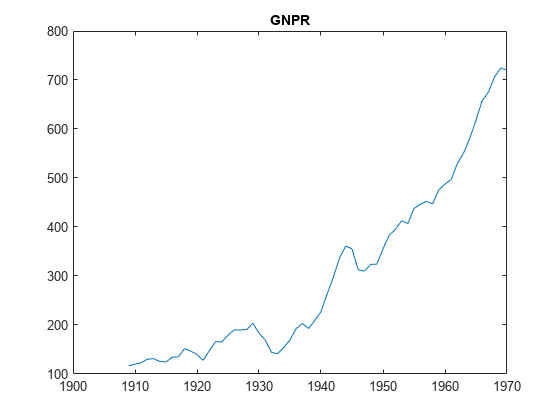

forecast | Forecast responses of Bayesian linear regression model |

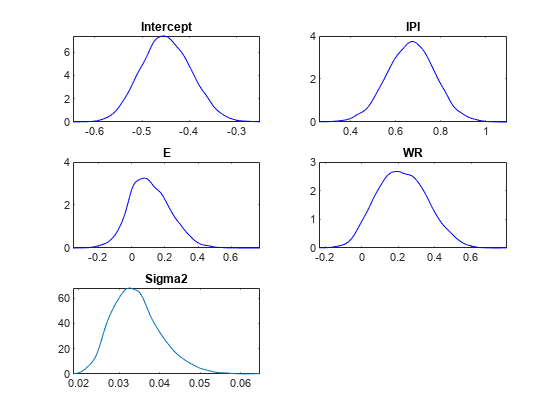

plot | Visualize prior and posterior densities of Bayesian linear regression model parameters |

summarize | Distribution summary statistics of Bayesian linear regression model for predictor variable selection |

Examples

More About

Tips

Lambdais a tuning parameter. Therefore, perform Bayesian lasso regression using a grid of shrinkage values, and choose the model that best balances a fit criterion and model complexity.For estimation, simulation, and forecasting, MATLAB® does not standardize predictor data. If the variables in the predictor data have different scales, then specify a shrinkage parameter for each predictor by supplying a numeric vector for

Lambda.

Alternative Functionality

The bayeslm function can create any supported prior model object for Bayesian linear regression.

References

[1] Park, T., and G. Casella. "The Bayesian Lasso." Journal of the American Statistical Association. Vol. 103, No. 482, 2008, pp. 681–686.

Version History

Introduced in R2018b