Test Learner Solutions

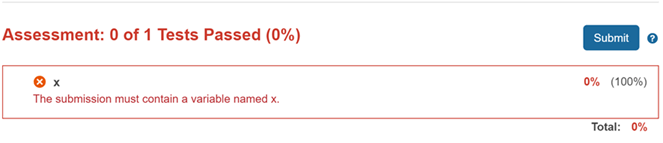

Use assessments to determine whether the learner’s solution meets criteria you have set. When you write tests, think about the errors that learners often make, and include assessments for these errors. The following examples illustrate some of the more common conditions to consider in creating assessments. The examples include suggestions for the messages to provide to learners as output after the tests run.

Assessment Methods

When you create an assessment:

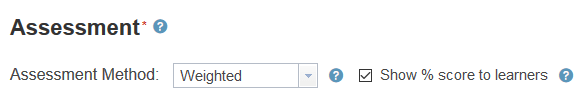

You can set the assessment method to Correct/Incorrect or Weighted. The assessment method determines how the points assigned to the problem are awarded.

You can indicate that an assessment is a pretest.

Test types can be among these options:

Compare a variable in the learner solution to one in the reference solution.

Determine whether a function or keyword is present.

Determine whether a function or keyword is absent.

Create your own assessment using MATLAB® code.

You can convert any of these options (except for MATLAB code) to MATLAB code.

See Write Assessments for Function-Type Learner Solutions and Write Assessments for Script-Type Learner Solutions for details on these types.

Correct/Incorrect Assessments

If you select the Correct/Incorrect method, the problem is treated as pass/fail. Assessment conditions defined as Correct/Incorrect return 1 if all tests pass and 0 if any tests fail. If the results are all correct, the maximum points possible are awarded. No points are awarded for any result marked incorrect.

MATLAB Grader™ indicates when solutions pass or fail by marking the solution in green or red, respectively.

Weighted Assessments

Select the Weighted method to award partial credit. You assign each assessment a percentage of the total points possible. You can modify the percentage by changing the point value (weight) assigned to each assessment. The points awarded are determined by summing the percentages of the assessment results marked correct and multiplying by the maximum points possible.

To indicate assessments are weighted, select

Weighted as the Assessment

Method.

When you create multiple assessments, you use points to assign the weight you want each assessment to have.

When you select Weighted, you can also select to show the percentage to your learners.

Feedback on Incorrect Solutions

Show Additional Feedback

You can provide additional feedback to the learner on assessment failure. This feedback can be written in rich text format and include hypertext links, images, and math equations.

The following image shows additional feedback that contains bullets, mathematical equations, formatted code, and a picture.

This image is from the example Predator-Prey model: lynxes and snow hares, which you can find in the MATLAB Grader Problem Catalog.

Show Feedback for Initial Error Only (Script-Type Problems)

In a script-type problem, an initial error may cause subsequent errors. You may want to encourage the learner to focus first on the initial error.

When you select the option Only Show Feedback for Initial Error, detailed feedback is shown for the initial error, but is hidden by default for subsequent errors. The learner can display this additional feedback by clicking Show Feedback.

Pretests

Pretests are assessments that learners can run without submitting their solution for grading. Use pretests to give learners a way to determine if their solution is on the right path before they submit their solutions.

Note that when learners submit their solution, pretests are also run and are treated the same as regular assessments, and therefore contribute to the final grade.

Pretests differ from regular assessments in the following ways:

When a learner runs pretests, the pretest results are not recorded in the gradebook.

Running pretests does not count against a submission limit.

Learners can view the assessment code in a pretest, as well as the output generated by that code (for MATLAB code test types only).

Use pretests to guide learners on problems where multiple approaches are correct or when a submission limit applies. For example, consider "Calculating voltage using Kirchhoff loops" in the MATLAB Grader Problem Catalog under Getting Started with MATLAB Grader. To solve this problem, learners must write a system of equations. There are multiple correct ways to do this, but only solutions that match the reference solution are marked correct. The instructor has therefore added one pretest for learners to check that their equations are in the expected order before submitting.

Time Limits for Submissions

MATLAB Grader enforces an execution time limit of 60 seconds. The clock starts when the learner clicks Run or Submit, and stops when the output or assessment results are displayed to the learner. If the time limit is reached, an error message is displayed stating that the server timed out. The factors that contribute to total execution time depend on whether the learner is running or submitting their solution, as well as if they are solving a script- or a function-type problem.

The information in this topic explains how timing works for both script-type problems and function-type problems. For guidance on advising your learners, see Execution Time Limit.

To get an estimate of the time required to execute the reference solution, run it using MATLAB Online™, as the computational environment used to execute the MATLAB code is most similar to what is used by MATLAB Grader.

Note

Learners might find that they can see the output of their code when they click Run Script/Function, but when they submit, they get the error message "The server timed out while running and assessing your solution." This error is due to the additional execution time needed to run the reference solution and all assessments.

Timing for Script-Type Problem Solutions

Script-type problems run in the following order:

| Run Script | Submit |

|---|---|

Run learner solution one time |

|

Consider the following examples:

Example #1: Low probability of exceeding execution time limit

| Code to Execute | Execution Time |

|---|---|

| Reference solution | approx. 20 seconds |

| Typical learner solution | approx. 20 seconds |

| Assessments (assume there are three for this problem) | approx. 1 second each (approx. 3 seconds total) |

Typical total execution time: approx. 45 seconds (includes network overhead)

A timeout error in this scenario could be caused by an error in the learner’s solution, inefficient code, or excessive output printing to the screen.

Example #2: High probability of exceeding execution time limit

| Code to Execute | Execution Time |

|---|---|

| Reference solution | approx. 25 seconds |

| Typical learner solution | approx. 25 seconds |

| Assessments (assume there are three for this problem) | approx. 1 second each (approx. 3 seconds total) |

Typical total execution time: approx. 65 seconds (includes network overhead)

In this scenario, the learner is able to run their solution, but likely encounters the execution time limit when submitting. It might be necessary to redesign or remove this problem.

Timing for Function-Type Problem Solutions

Function-type problem solutions run in the following order:

| Run Function | Submit |

|---|---|

Run code in Code to call your function. | Run all assessments in sequential order. |

To test a function, it must be called. When a learner submits the solution to a function-type problem, only the assessments are run. Each assessment typically checks for correctness by calling the reference function and learner function with the same inputs and comparing the resulting outputs. Consequently, a single function-type problem might run the reference and learner functions multiple times.

Consider the following examples:

Example #1: Low probability of exceeding execution time limit

In this example, each assessment evaluates the learner's function with a different input. The reference function and a typical learner solution both take approx. 5 seconds to run.

| Code to Execute | Execution Time |

|---|---|

| Assessments (assume there are three for this problem) | approx. 10 second each (approx. 30 seconds total) |

Typical total execution time: approx. 32 seconds (includes network overhead)

A time out error in this scenario could be caused by an error in the learner’s solution, inefficient code, or excessive output printing to the screen.

Example #2: High probability of exceeding execution time limit

In this example, each assessment evaluates the learner's function with a different input. The reference function and a typical learner solution both take approx. 10 seconds to run.

| Code to Execute | Execution Time |

|---|---|

| Assessments (assume there are three for this problem) | approx. 20 second each (approx. 60 seconds total) |

Typical total execution time: approx. 62 seconds (includes network overhead)

In this scenario, the learner is able to run their solution, but likely encounters the execution time limit when submitting. It might be necessary to redesign or remove this problem.